the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Review article: Scaling, dynamical regimes, and stratification. How long does weather last? How big is a cloud?

Until the 1980s, scaling notions were restricted to self-similar homogeneous special cases. I review developments over the last decades, especially in multifractals and generalized scale invariance (GSI). The former is necessary for characterizing and modelling strongly intermittent scaling processes, while the GSI formalism extends scaling to strongly anisotropic (especially stratified) systems. Both of these generalizations are necessary for atmospheric applications. The theory and some of the now burgeoning empirical evidence in its favour are reviewed.

Scaling can now be understood as a very general symmetry principle. It is needed to clarify and quantify the notion of dynamical regimes. In addition to the weather and climate, there is an intermediate “macroweather regime”, and at timescales beyond the climate regime (up to Milankovitch scales), there is a macroclimate and megaclimate regime. By objectively distinguishing weather from macroweather, it answers the question “how long does weather last?”. Dealing with anisotropic scaling systems – notably atmospheric stratification – requires new (non-Euclidean) definitions of the notion of scale itself. These are needed to answer the question “how big is a cloud?”. In anisotropic scaling systems, morphologies of structures change systematically with scale even though there is no characteristic size. GSI shows that it is unwarranted to infer dynamical processes or mechanisms from morphology.

Two “sticking points” preventing more widespread acceptance of the scaling paradigm are also discussed. The first is an often implicit phenomenological “scalebounded” thinking that postulates a priori the existence of new mechanisms, processes every factor of 2 or so in scale. The second obstacle is the reluctance to abandon isotropic theories of turbulence and accept that the atmosphere's scaling is anisotropic. Indeed, there currently appears to be no empirical evidence that the turbulence in any atmospheric field is isotropic.

Most atmospheric scientists rely on general circulation models, and these are scaling – they inherited the symmetry from the (scaling) primitive equations upon which they are built. Therefore, the real consequence of ignoring wide-range scaling is that it blinds us to alternative scaling approaches to macroweather and climate – especially to new models for long-range forecasts and to new scaling approaches to climate projections. Such stochastic alternatives are increasingly needed, notably to reduce uncertainties in climate projections to the year 2100.

- Article

(59611 KB) - Full-text XML

- BibTeX

- EndNote

1.1 Dynamical ranges, fluctuations, and scales

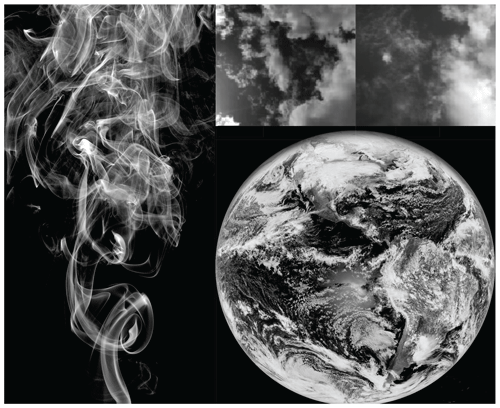

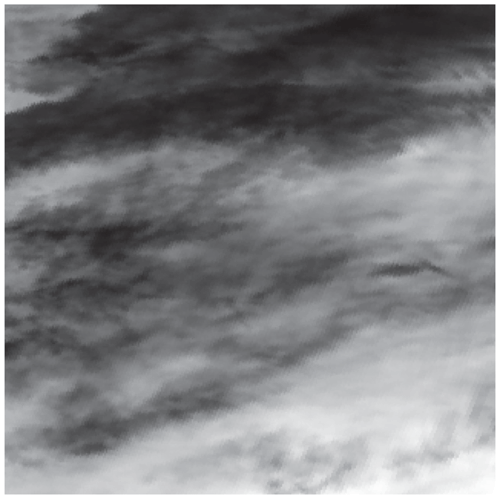

Perhaps the most obvious difficulty in understanding the atmosphere is in dealing with its enormous range of scales. The single picture in Fig. 1 shows clouds with horizontal spatial variability ranging from millimetres to the size of the planet, a factor of 10 billion in scale. In the vertical direction the range is more modest but still huge: about 10 million. The range of temporal variability is extreme, spanning a range of 100 billion billion: from milliseconds to the planet's age (Fig. 2).

The earliest approach to atmospheric variability was phenomenological: weather as a juxtaposition of various processes with characteristic morphologies, air masses, fronts, and the like. Circumscribed by the poor quality and quantity of the then available data, these were naturally associated with narrow-scale-range, mechanistic processes.

At first, ice ages, “medieval warming”, and other evidence of low-frequency processes were only vaguely discerned. Weather processes were thought to occur with respect to a relatively constant (and unimportant) background: climate was conceived as simply long-term “average” weather. It was not until the 1930s that the International Meteorological Organisation defined “climate normals” in an attempt to quantify the background “climate state”. The duration of the normals – 30 years – was imposed essentially by fiat: it conveniently corresponded to the length of high-quality data then available: 1900–1930. This 30-year duration is still with us today with the implicit consequence that – purely by convention – “climate change” occurs at scales longer than 30 years.

Interestingly, yet another official timescale for defining “anomalies” has been developed. Again, for reasons of convenience (and partly – for temperatures – due to the difficulty in making absolute measurements), anomalies are defined with respect to monthly averages. Ironically, a month wavers between 28 and 31 d: it is not even a well-defined unit of time.

The overall consequence of adopting, by convenience, monthly and 30-year timescales is a poorly theorized, inadequately justified division of atmospheric processes into three regimes: scales less than a month, a month up to 30 years, and a lumping together of all slower processes with timescales longer than 30 years. While the high-frequency regime is clearly “weather” and the slow processes – at least up to ice age scales – are “climate”, until Lovejoy (2013) the intermediate regime lacked even a name. Using scaling – and with somewhat different transition scales – the three regimes were finally put on an objective quantitative basis, with the middle regime baptized “macroweather”. By using scaling to quantitatively define weather, macroweather, and climate, we can finally objectively answer the question: how long does weather last? A bonus, detailed in Sect. 2, is that scaling analyses showed that what had hitherto been considered simply climate is itself composed of three distinct dynamical regimes. Rather than lumping all low frequencies together, we must also distinguish between macroclimate and megaclimate.

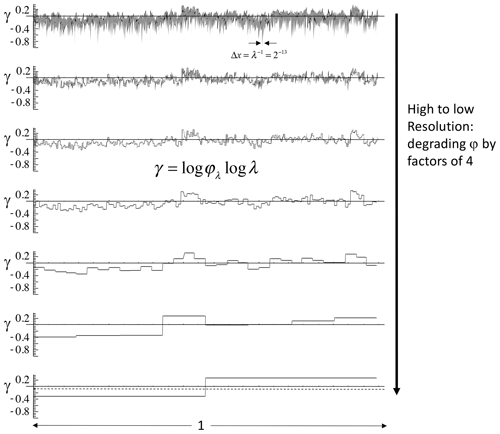

To review how scaling defines dynamical regimes, let us define scaling using fluctuations – for example of the temperature or of a component of the wind. For the moment, consider only one dimension, i.e. time series or spatial transects. Temporal scaling means that the amplitudes of fluctuations are proportional to their timescale raised to a scale-invariant exponent. For appropriately nondimensionalized quantities,

Every term in this equation needs an appropriate definition, but for now, consider the classical ones. First, the usual turbulence definition of a fluctuation is a difference (of temperature, wind components, etc.) taken over an interval in space or in time. This interval defines the timescale (Δt) or space scale (Δx) of the corresponding fluctuation. Also, classically, one considers the statistically averaged fluctuation (indicated by “〈〉”). If we decompose ζ into a random singularity γ and a non-random “fluctuation exponent” H, then the appropriately averaged fluctuation will also be scaling with

where the symbol 〈〉 indicates statistical (ensemble) averaging.

Later, in Sect. 2.5, fluctuations as differences (sometimes called “poor man's wavelets”) are replaced by (nearly as simple) Haar fluctuations based on Haar wavelets (see also Appendix B) and in Sect. 3, and Eq. (1) is interpreted stochastically. Finally, in Sect. 4, the notion of scale itself is generalized by introducing a scale function that replaces the usual (Euclidean) distance function (metric). These anisotropic-scale functions are needed to handle scale in 2D or higher spaces, especially with regard to stratification.

In atmospheric regimes where Eq. (1) holds, average fluctuations over durations λΔt are λH times those at duration Δt; i.e. they differ only in their amplitudes, they are qualitatively of the same nature, and they are therefore part of the same dynamical regime. More generally (Eq. 1), appropriately rescaled probabilities of random fluctuations also have scale-invariant exponents (“codimensions”, Sect. 3), so that the entire statistical behaviour is scaling. Scaling therefore allows us to objectively identify the different atmospheric regimes.

Over the Phanerozoic eon (the last 540 Myr), the five scaling regimes are weather, macroweather, climate, macroclimate, and megaclimate (Lovejoy, 2015). Starting at around a millisecond (the dissipation time), this covers a total range of ≈ 1019 in scale (Sect. 2.5; Tuck (2022) argues that the true dissipation scale is much smaller: molecular scales). Scaling therefore gives an unequivocal answer to the question posed in the title: “How long does weather last?”. The answer is the lifetime of planetary structures, typically around 10 d (Sect. 2.6).

If the key statistical characteristics of the atmosphere at any given scale are determined by processes acting over wide ranges of scales – and not by a plethora of narrow-range ones – then we must conclude that the fundamental dynamical processes are in fact dynamical “regimes” – not uninteresting “backgrounds”. While there may also be narrow-range processes, they can only be properly understood in the context of the dynamical regime in which they operate, and in any event, spectral or other analysis shows that they generally contribute only marginally to the overall variability. The first task is therefore to define and understand the dynamical regimes and then – when necessary – the narrow-range processes occurring within them.

Figure 1Cigarette smoke (left) showing wisps and filaments smaller than 1 mm up to about 1 m in overall size. The upper right shows two clouds, each several kilometres across with resolutions of 1 m or so. The lower right shows the global-scale arrangement of clouds taken from an infrared satellite image of the Earth with a resolution of several kilometres. Taken together, the three images span a range of several billion in spatial scale. Reproduced from Lovejoy (2019).

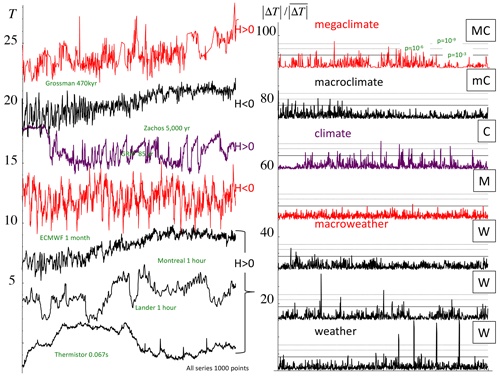

Figure 2Left: 1000 points of various time series collectively spanning the range of scales of 470 Myr to 0.067 s = 2.4 × 1017; each series was normalized so as to have the same overall range and offset in the vertical for clarity. The right-hand-side column shows the absolute first differences normalized by the mean. The solid horizontal line shows the maximum value expected for Gaussian variables (), and the dashed lines show the corresponding , 10−9 probability levels. Representative series from each of the five scaling regimes were taken with the addition of the hourly surface temperatures from Lander, Wyoming (bottom, detrended daily and annually). The Berkeley series was taken from a fairly well-estimated period before significant anthropogenic effects and was annually detrended. The top was taken over a particularly data-rich epoch, but there are still traces of the interpolation needed to produce a series at a uniform resolution. The resolutions (indicated) were adjusted so that, as much as possible, the smallest scale was at the inner scale of the regime indicated. In the macroclimate regime, the inner scale was a bit too small and the series length a bit too long. The resulting megaclimate regime influence on the low frequencies was therefore removed using a linear trend of 0.25 δ18O Myr−1. The resolutions and time periods are indicated next to the curves. The black curves have H>0 and the red ones H<0: see the parameter estimates in Appendix A. The figure is from Lovejoy (2018), updated only in the top megaclimate series that is at a higher resolution than the previous one (from Grossman and Joachimski, 2022).

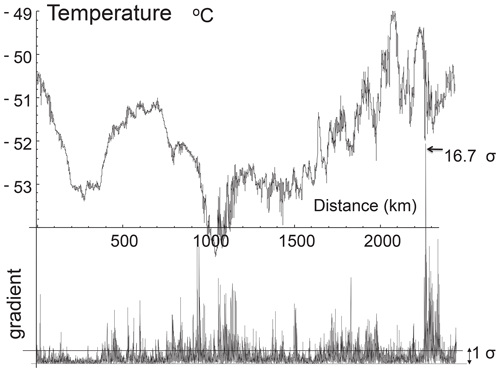

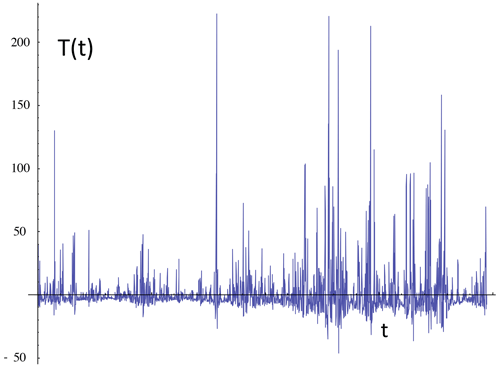

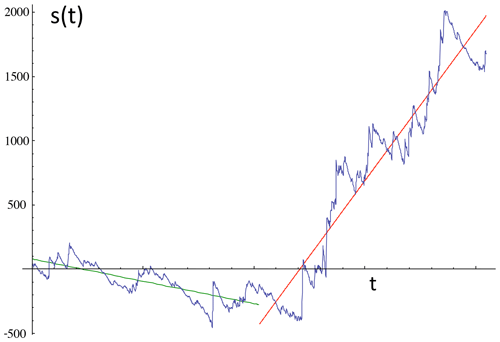

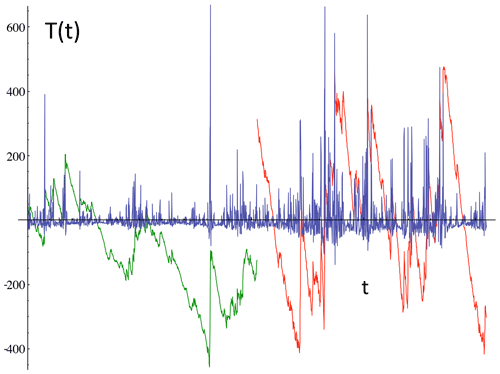

Figure 3The first 8196 points of the temperature series measured by a GulfStream 4 flight over the Pacific Ocean at 196 mb and 1 s resolution (corresponding to 280 m). Because the aircraft speed is much greater than the wind, this can be considered a spatial transect. The bottom shows the absolute change in temperature from one measurement to the next normalized by dividing by the typical change (the standard deviation). This differs from the spike plot on the right-hand side of Fig. 2 only in the normalization, here by the standard deviation, not by the absolute difference. Reproduced from Lovejoy and Schertzer (2013).

1.2 A multiscaling/multifractal complication

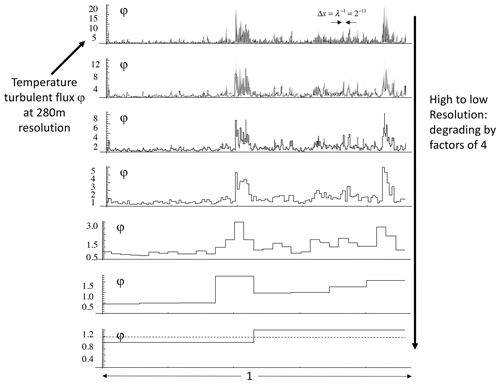

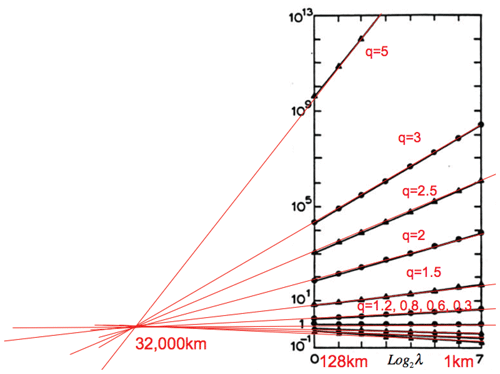

Before answering the quite different scaling question “How big is a cloud?”, it is first necessary to discuss a complication: that the scaling is different for every level of activity. It turns out that the wide range over which the variability occurs is only one of its aspects: even at fixed scales, the variability is much more extreme than is commonly believed. Interestingly, the extremeness of the variability at a fixed scale is a consequence of the wide range of scaling itself: it allows the variability to build up scale by scale in a multiplicative cascade manner. As a result, mathematically, the scaling of the average fluctuation (Eq. 2) gives only a partial view of the variability, and we need to consider Eq. (1) in its full stochastic sense. In particular, if the exponent ζ is random, then it is easy to imagine that the variability may be huge.

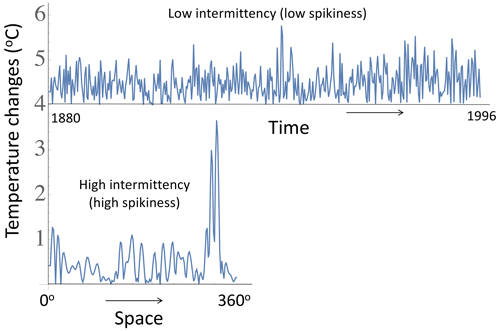

To graphically see this, it is sufficient to produce a “spike plot” (the right-hand-side columns of Fig. 2, time, and the corresponding spatial plot, Fig. 3). These spike plots are simply the absolute first differences in the values normalized by their overall means (in Fig. 3, the normalization is slightly different, by the standard deviation). In the right-hand-side column of Fig. 2 and the bottom of Fig. 3, we see – with a single but significant exception, macroweather in time (Fig. 2) – that they all have strong spikes signalling sharp transitions. In turbulence jargon, the series are highly “intermittent”.

How strong are the spikes? Using classical (Gaussian) statistics, we may use probability levels to quantify them. For example, Fig. 2 (right) shows solid horizontal lines that indicate the maximum spike that would be expected from a Gaussian process with the given number of spikes. For the 1000 points in each series in Fig. 2, this line thus corresponds to a Gaussian probability . In addition, horizontal dashed lines show spikes at levels and . Again, with the exception of macroweather, we see that the level is exceeded in every series and that the megaclimate, climate, and weather regimes are particularly intermittent, with spikes exceeding the levels. In Sect. 3 we show how the spikes can be tamed by multifractal theory and the maxima predicted reasonably accurately (Appendix A) by simply characterizing the statistics of the process near the mean (i.e. using their non-extreme behaviour).

The spikes visually underline the fact that variability is not simply a question of the range of scales that are involved: at any given scale, variability can be strong or weak. In addition, events can be highly clustered, with strong ones embedded inside weak ones and even stronger ones inside strong ones in a fractal pattern repeating to smaller and smaller scales. This fractal sparseness itself can itself become more and more accentuated for the more and more extreme events/regions: the series will generally be multifractal.

1.3 How big is a cloud?

Scaling is also needed to answer the question “how big is a cloud?” (here “cloud” is taken as a catch-all term meaning an atmospheric structure or eddy). Now the problem is what we mean by “scale”. The series and transects in Figs. 2 and 3 are 1D, so that it is sufficient to define the scale of a fluctuation by the duration (time) or length (space) over which it occurs (actually, time involves causality, so that the sign of Δt is important; see Marsan et al. (1996): we ignore this issue here). However, the state of the atmosphere is mathematically represented by fields in 3D space evolving in time.

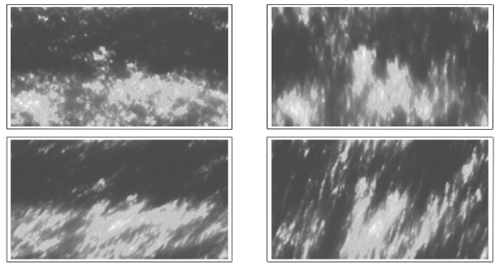

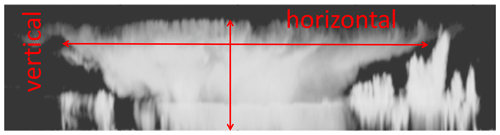

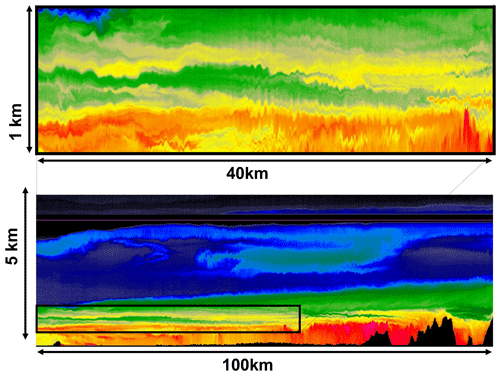

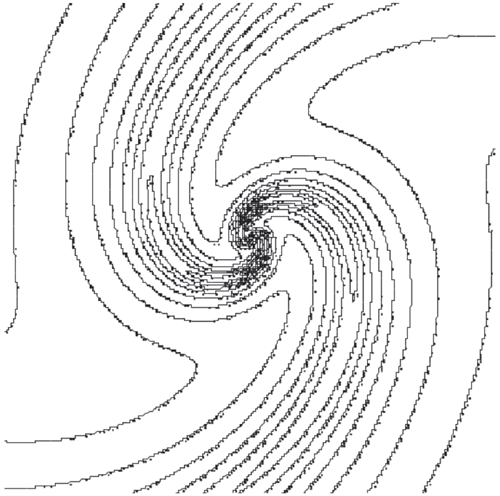

Consider Fig. 4, which displays a cloud vertical cross section from the CloudSat radar. In the figure, the gravitationally induced stratification is striking, and since each pixel in the figure has a horizontal resolution of 1 km but a vertical resolution of 250 m, the actual stratification is 4 times stronger than it appears. What is this cloud's scale? If we use the usual Euclidean distance to determine the scale, should we measure it in the horizontal or vertical direction? In this case, is the cloud scale its width (200 km) or its height (only ≈ 10 km)?

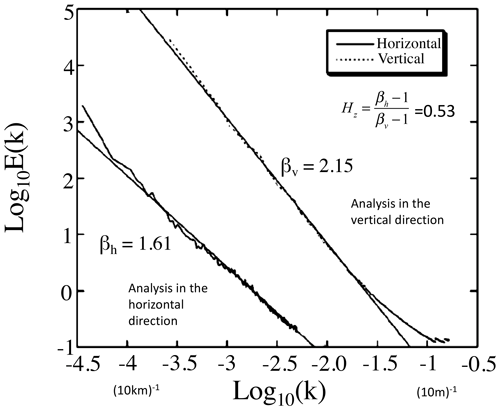

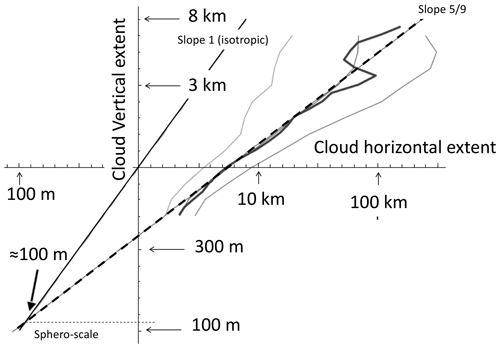

If the horizontal–vertical aspect ratio were the same for all clouds, the two choices would be identical to within a constant factor, and the anisotropy would be “trivial”. The trouble is that the aspect ratio itself turns out to be a strong power-law function of (either) horizontal or vertical scale, so that, for any cloud,

In Sect. 4, we will see that, theoretically, the stratification exponent is , a value that we confirm empirically on various atmospheric fields, including clouds.

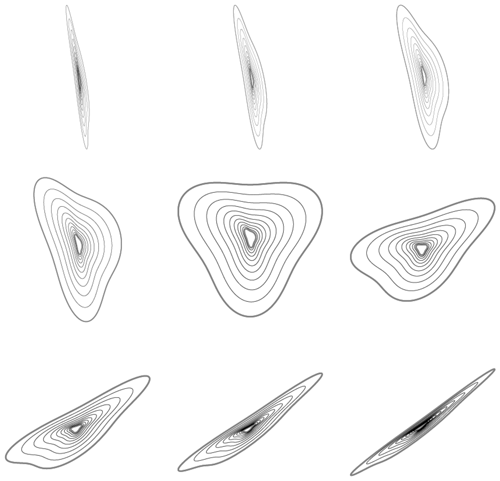

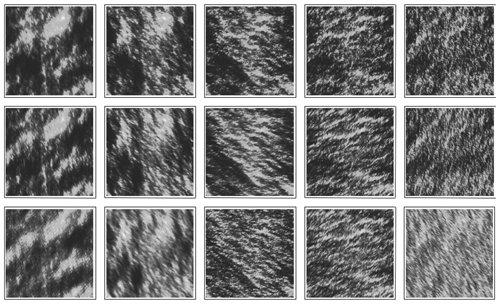

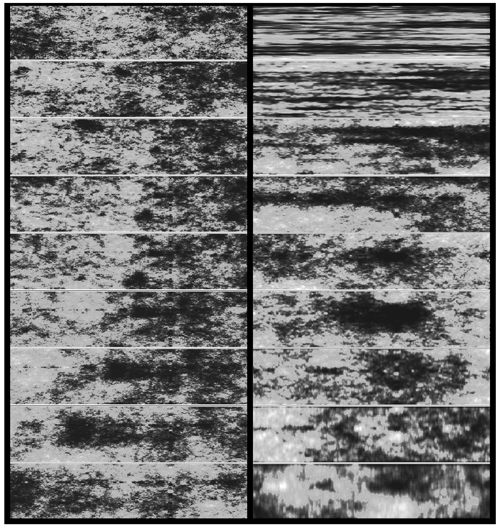

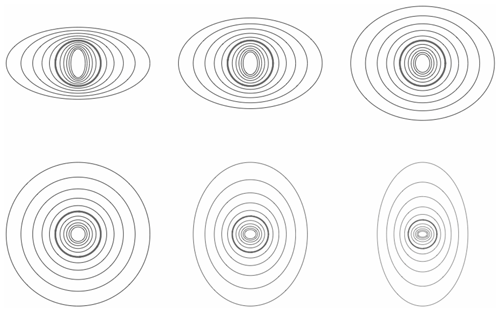

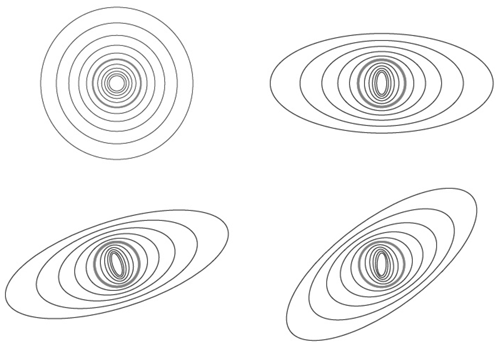

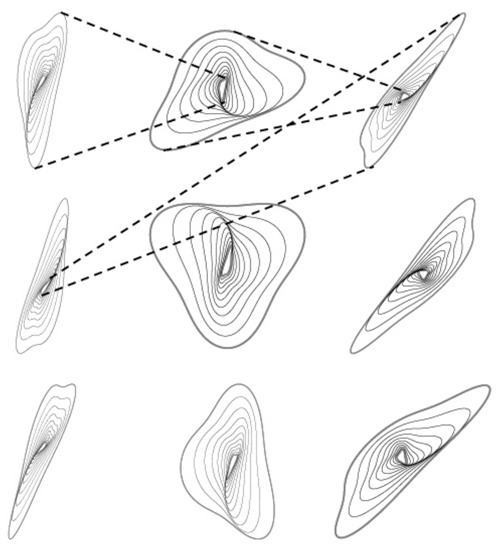

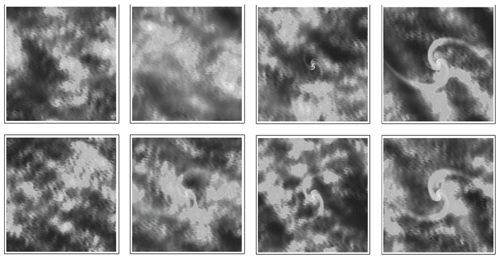

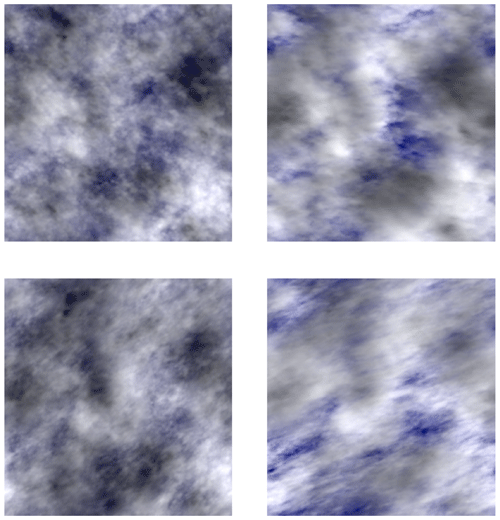

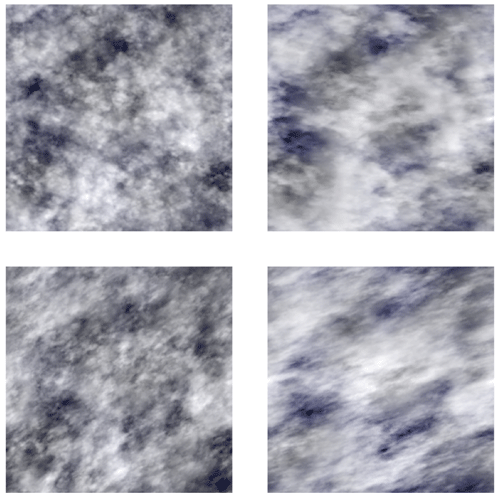

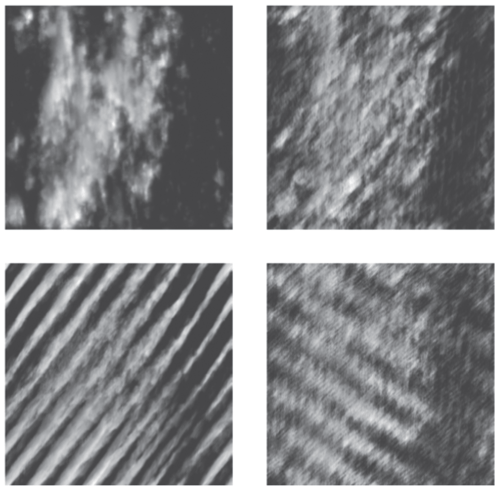

To further appreciate the issue, consider the simulation in Fig. 5 that shows a vertical cross section of a multifractal cloud liquid water density field. The left-hand-side column (top to bottom) shows a series of blow-ups in an isotropic (“self-similar”) cloud. Moving from top to bottom, blow-ups of the central regions by successive factors of 2.9 are displayed. In order for the cross sections to maintain a constant 50 % “cloud cover”, the density threshold distinguishing the cloud (white or grey) from the non-cloud (black) must be systematically adjusted to account for this change in resolution. This systematic readjustment of the threshold is required due to the multifractality, and with this adjustment, we see that the cross sections are self-similar; i.e. they look the same at all scales.

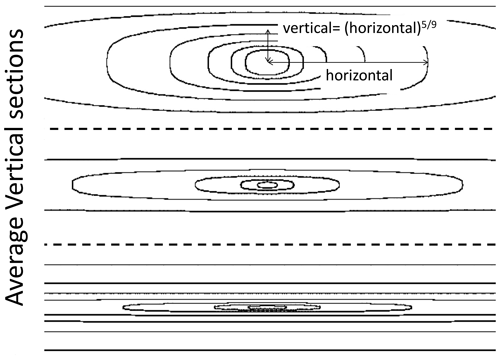

The effect of differential (scale-dependent) stratification is revealed in the right-hand-side column that shows the analogous zoom through an anisotropic multifractal simulation with a stratification exponent . The low-resolution (top) view of the simulation is highly stratified in the horizontal. Now, the blow-ups reveal progressively more and more roundish structures. Eventually – with the bottom cross section (a blow-up of a total factor of ≈ 5000) – we can start to see vertically oriented structures “dangling” below more roundish ones.

In the isotropic simulations (left-hand side), the only difficulty in defining the size of the cloud is the multifractal problem of deciding, for each resolution, which threshold should be used to distinguish cloud from no cloud. However, in the more realistic anisotropic simulation on the right, there is an additional difficulty in answering the question of “how big is a cloud?” Should we use the horizontal or vertical cloud extent? It turns out (in Sect. 4) that, to ensure that the answer is well defined, we need a new notion of scale itself: generalized scale invariance (GSI).

Figure 4A vertical cloud cross section of radar backscatter taken by the radar on the CloudSat satellite with resolutions of 250 m in the vertical and 1 km in the horizontal. The black areas are those whose radar reflectivities are below the radar's minimum detectable signal. The arrows show rough estimates of the horizontal and vertical extents of the cloud. The two differ by a factor of more than 10. How do they characterize the size of this cloud? Adapted from Lovejoy et al. (2009b).

Figure 5Left column: a sequence “zooming” into a vertical cross section of an isotropic multifractal cloud (the density of liquid water was simulated and then displayed using false colours with a grey sky below a low threshold). From top to bottom, we progressively zoom in by a factor of 2.9 (total factor ≈ 1000). We can see that typical cloud structures are self-similar. In the right-hand-side column, a multifractal cloud with the same statistical parameters as on the left, but anisotropic, the zoom is still by factors of 2.9 in the horizontal, but the structures are progressively “squashed” in the horizontal. Note that while at large scales the clouds are strongly horizontally stratified, when viewed close up they show structures in the opposite direction. The spheroscale is equal to the vertical scale in the right-most simulation in the bottom row. The film version of this and other anisotropic space–time multifractal simulations can be found at http://www.physics.mcgill.ca/~gang/multifrac/index.htm, last access: 14 July 2023). This is reproduced from Blöschl et al. (2015).

1.4 Wide-range scaling and the scalebound and isotropic turbulence alternatives

1.4.1 Comparison with narrow-scale-range, “scalebound” approaches

The presentation and emphasis of this review reflect experience over the last years that has shown how difficult it is to shake traditional ways of thinking. In particular, traditional mechanistic meteorological approaches are based on a widely internalized but largely unexamined “scalebound” view that prevents scaling from being taken as seriously as it must be. As we will see (Sect. 2), the scalebound view persists in spite of its increasing divorce from the real world. Such a persistent divorce is only possible because practising atmospheric scientists rely almost exclusively on numerical weather prediction (NWP) or global circulation models (GCMs), and these inherit the scaling symmetry from the atmosphere's primitive equations upon which they are built.

The problem with scaleboundedness is not so much that it does not fit the facts, but rather that it blinds us to promising alternative scaling approaches. New approaches are urgently needed. As argued in Lovejoy (2022a), climate projections based on GCMs are reaching diminishing returns, with the latest IPCC AR6 (Arias, Bellouin et al., 2021) uncertainty ranges larger than ever before: cf. the latest climate sensitivity range of 2–5.5 K rise in global temperature following a CO2 doubling. This is currently more than double the range of expert judgement: (2.5–4 K). New low-uncertainty approaches are thus needed, and scaling approaches based on direct stochastic scaling macroweather models are promising (Hébert et al., 2021b; Procyk et al., 2022).

1.4.2 “Scaling-primary” versus “isotropy-primary” turbulence approaches

There are also sticking points whose origin is in the other, statistical, turbulence strand of atmospheric science. Historically, turbulence theories have been built around two statistical symmetries: a scale symmetry (scaling) and a direction symmetry (isotropy). While these two are conceptually quite distinct, even today, they are almost invariably considered together in the special case called “self-similarity”, which is a basic assumption of theories and models of isotropic 2D and isotropic 3D turbulence. Formalizing scaling as a (nonclassical) symmetry principle clarifies the distinct nature of scale and direction symmetries. In the atmosphere, due to gravity (not to mention sources of differential rotation), there is no reason to assume that the scale symmetry is an isotropic one: indeed, atmospheric scaling is fundamentally anisotropic. The main unfortunate consequence of assuming isotropy is that it implies an otherwise unmotivated (and unobserved) scale break somewhere near the scale height (≈ 7.4 km).

As we show (Sect. 4), scaling accounts for both the stratification that systematically increases with scale as well as its intermittency. Taking into account gravity in the governing equations provides an anisotropic scaling alternative to quasi-geostrophic turbulence (“fractional vorticity equations”; see Schertzer et al., 2012). The argument in this review is thus that scaling is the primary scale symmetry: it takes precedence over other scale symmetries such as isotropy. Indeed, it seems that isotropic turbulence is simply not relevant in the atmosphere (Lovejoy et al., 2007).

1.5 The scope and structure of this review

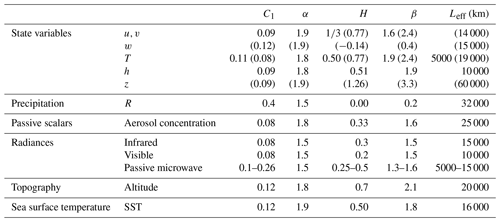

This review primarily covers scaling research over the last 4 decades, especially multifractals, generalized scale invariance, and their now extensive empirical validations. This work involved theoretical and technical advances, revolutions in computing power, the development of new data analysis techniques, and the systematic exploitation of mushrooming quantities of geodata. The basic work has already been the subject of several reviews (Lovejoy and Schertzer, 2010c, 2012b), but especially a monograph (Lovejoy and Schertzer, 2013). Although a book covering some of the subsequent developments was published more recently (Lovejoy, 2019), it was nontechnical, so that this new review brings its first four chapters up to date and includes some of the theory and mathematics that were deliberately omitted so as to render the material more accessible. The last three chapters of Lovejoy (2019) focused on developments in the climate-scale (and lower-frequency) regimes that will be reviewed elsewhere. The present review is thus limited to the (turbulent) weather regime and its transition to macroweather at scales of ≈ 10 d.

In order to maintain focus on the fundamental physical scaling issues and implications, the mathematical formalism is introduced progressively – as needed – so that it will not be an obstacle to accessing the core scientific ideas.

This review also brings to the fore several advances that have occurred in the last 10 years, especially Haar fluctuation analysis (developed in detail in Appendix B), and a more comprehensive criticism of scalebound approaches made possible by combining Haar analysis with new high-resolution instrumental and paleodata sources (Lovejoy, 2015). On the other hand, it leaves out an emerging body of work on macroweather modelling based on the fractional energy balance equation for both prediction and climate projections (Del Rio Amador and Lovejoy, 2019, 2021a, b; Procyk et al., 2022) as well as their implications for the future of climate modelling (Lovejoy, 2022a).

The presentation is divided into three main sections. Keeping the technical and mathematical aspects to a minimum, Sect. 2 focuses on a foundational atmospheric science issue: what is the appropriate conceptual and theoretical framework for handling the atmosphere's variability over huge ranges of scales? It discusses how the classical scalebound approach is increasingly divorced from real-world data and numerical models. Scaling is discussed but with an emphasis on its role as a symmetry principle. It introduces fluctuation analysis based on Haar fluctuations that allow for a clear quantitative empirical overview of the variability over 17 orders of magnitude in time. Scaling is essential for defining the basic dynamical regimes, underlining the fact that between the weather and the climate sits a new macroweather regime.

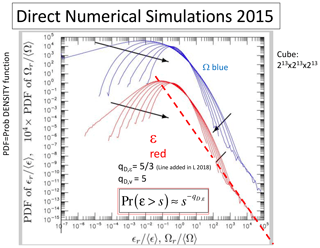

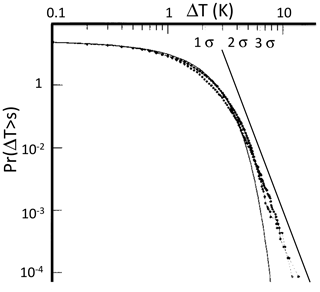

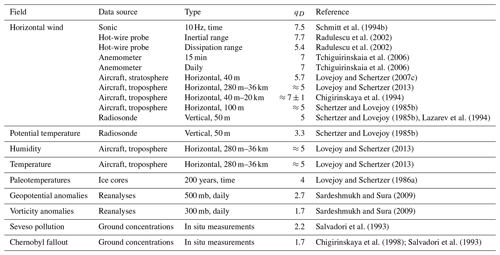

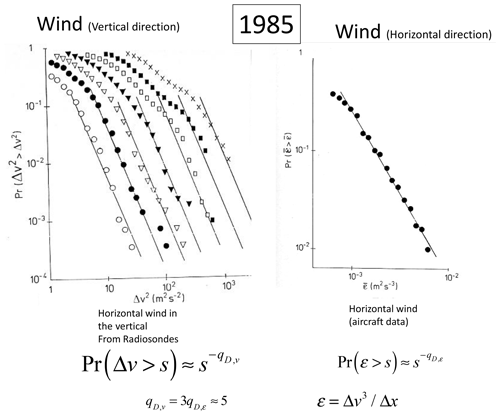

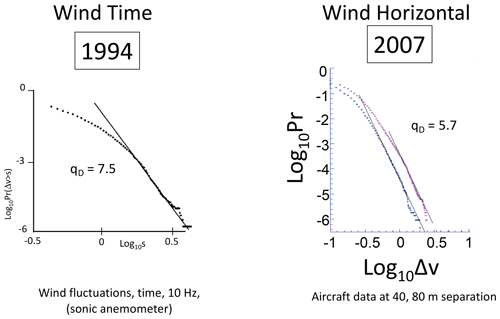

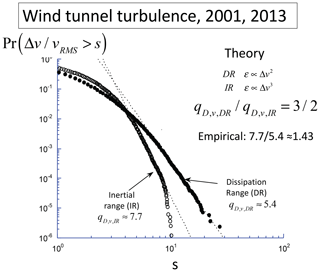

Section 3 discusses the general scaling process: multifractals. Multifractals naturally explain and quantify the ubiquitous intermittency of atmospheric processes. The section also discusses an underappreciated consequence, the divergence of high-order statistical moments – equivalently power-law probability tails – and relates this to “tipping points” and “black swans”. The now large body of evidence for the divergence of moments is discussed and special attention paid to the velocity field where the divergence of moments was first empirically shown 40 years ago in the atmosphere, then in wind tunnels, and most recently in large direct numerical simulations of hydrodynamic turbulence.

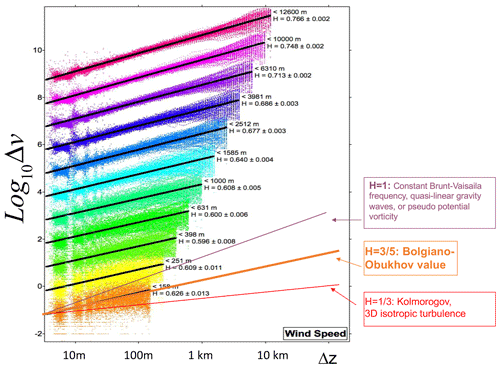

In Sect. 4 a totally different aspect of scaling is covered: anisotropic scaling, notably scaling stratification. The section outlines the formalism of GSI needed to define the notion of scale in anisotropic scaling systems. By considering buoyancy-driven turbulence, the 23/9D model is derived: it is a consequence of Kolmogorov scaling in the horizontal and Bolgiano–Obukhov scaling in the vertical. This model is “in between” flat 2D isotropic turbulence and “voluminous” isotropic 3D turbulence – it is strongly supported by now burgeoning quantities of atmospheric data. It not only allows us to answer the question “how big is a cloud?”, but also to understand and model differentially rotating structures needed to quantify cloud morphologies.

2.1 The scalebound view examined

In the introduction, the conventional paradigm based on (typically deterministic) narrow-range explanations and mechanisms was contrasted with the alternative scaling paradigm that builds statistical models expressing the collective behaviour of high numbers of degrees of freedom and that provides explanations over huge ranges of scales.

Let us consider the narrow-range paradigm in more detail. It follows in the steps of van Leuwenhoek, who – peering through an early microscope – was famously said to have discovered a “new world in a drop of water” – microorganisms (circa 1675). Over time, this evolved into a “powers of ten” view (Boeke, 1957) in which every factor of 10 or so of zooming revealed qualitatively different processes and morphologies. Mandelbrot (1981) termed this view “scalebound” (written as one word), which is a useful shorthand for the idea that every factor of 10 or so involves something qualitatively new: a new world, new mechanisms, new morphologies, etc.

The first weather maps were at extremely low spatial resolution, so that only a rather narrow range of phenomena could be discerned. Unsurprisingly, the corresponding atmospheric explanations and theories were scalebound. Later, in the 1960s and 1970s under the impact of new data, especially in the mesoscale, the ambient scalebound paradigm was quantitatively made explicit in space–time Stommel diagrams (discussed at length in Sect. 2.6) in which various conventional mechanisms, morphologies, and phenomena were represented by the space scales and timescales over which they operate. For a recent inventory of scalebound mechanisms from seconds to decades, see Williams et al. (2017).

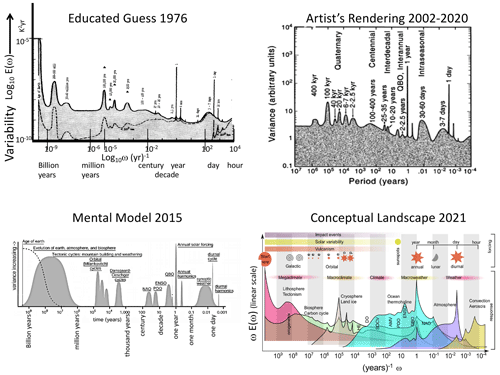

While Stommel diagrams reflected scalebound thinking, the goal was the modest one of organizing and classifying existing empirical phenomenology, and it did this in the light of the prevailing mechanistic analytic dynamical meteorology. It was Mitchell (1976), writing at the dawn of the paleoclimate revolution, who, more than anyone, ambitiously elevated the scalebound paradigm into a general framework spanning a range of scales from (at least) an hour to the age of the planet (a factor of tens of billions, upper left, Fig. 6). Mitchell's data were limited, and he admitted that his spectrum was only an “educated guess”. He imagined when the data would become available that their spectra would consist of an essentially uninteresting white-noise “background” interspersed with interesting quasi-periodic signals representing the important physical processes. Ironically, Mitchell's scalebound paradigm was proposed at the same time as the first GCMs (Manabe and Wetherald, 1975). Fortunately, the GCMs are scaling, inheriting the symmetry from the governing equations (Schertzer et al., 2012); see Chap. 2 of Lovejoy and Schertzer (2013).

Mitchell's schematic (upper-left panel) was so successful that, more than 4 decades later, his original figure is still faithfully reproduced (e.g. Dijkstra, 2013) or updated by very similar scalebound schematics with only minor updates. Even though the relevant geodata have since mushroomed, the updates notably have less quantification and weaker empirical support than the original. The 45-year evolution of the scalebound paradigm is shown in the other panels of Fig. 6. Moving to the right of the figure, there is a 25-year update, modestly termed an “artist's rendering” (Ghil, 2002). This figure differs from the original in the excision of the lowest frequencies and by the inclusion of several new multimillennial-scale “bumps”. In addition, whereas Mitchell's spectrum was quantitative, the artist's rendering retreated to using “arbitrary units”, making it more difficult to verify empirically. Nearly 20 years later, the same author approvingly reprinted it in a review (Ghil and Lucarini, 2020).

As time passed, the retreat from quantitative empirical assessments continued, so that the scalebound paradigm has become more and more abstract. The bottom left of Fig. 6 shows an update downloaded from the NOAA paleoclimate data site in 2015 claiming to be a “mental model”. Harkening back to Boeke (1957), the site went on to state that the figure is “intended … to provide a general “powers of ten” overview of climate variability”. Here, the vertical axis is simply “variability”, and the uninteresting background – presumably a white noise – is shown as a perfectly flat line.

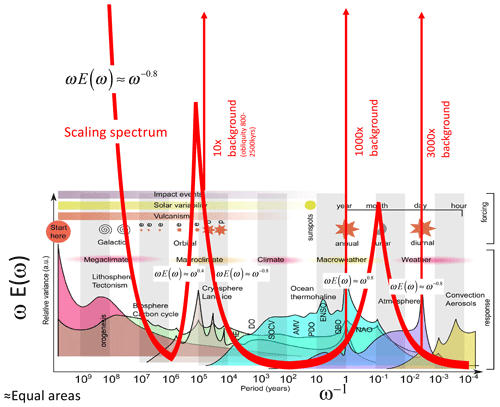

At about the same time, Lovejoy (2015) pointed out that Mitchell's original figure was in error by an astronomical factor (Sect. 2.4), so that – in an effort to partially address the criticism – an update in the form of a “conceptual landscape” was proposed (Fig. 6, bottom right, von der Heydt et al., 2021). Rather than plotting the log of the spectrum E(ω) as a function of the log frequency ω, the “landscape's” main innovation was the use of the unitless “relative variance” ωE(ω) plotted linearly, indicated as a function of log ω (bottom right of the figure). Such plots have the property that areas under the curves are equal to the total variance contributed over the corresponding frequency range. Before returning to these schematics, let us discuss the scaling alternative.

Figure 6The evolution of the scalebound paradigms of atmospheric dynamics (1976–2021). The upper-left “educated guess” is from Mitchell (1976), and the upper-right “artist's rendering” is from Ghil (2002) and Ghil and Lucarini (2020). The lower left shows the NOAA's “mental model” (downloaded from the site in 2015), and the lower right shows the “conceptual model” from von der Heydt et al. (2021).

2.2 The scaling alternative

2.2.1 Scaling as a symmetry

Although scaling in atmospheric science goes back to Richardson in the 1920s, it was the Fractal Geometry of Nature (Mandelbrot, 1977, 1982) that first proposed scaling as a broad alternative to the scalebound paradigm. Alongside deterministic chaos and nonlinear waves, fractals rapidly became part of the nascent nonlinear revolution. In contrast to scaleboundedness, scaling supposes that zooming results in something that is qualitatively unchanged.

Although Mandelbrot emphasized fractal geometry, i.e. the scaling of geometrical sets of points, it soon became clear (Schertzer and Lovejoy, 1985c) that the physical basis of scaling (more generally scaling fields and scaling processes) is in fact a scale-symmetry principle – effectively a scale-conservation law that is respected by many nonlinear dynamical systems, including those governing fluids (Schertzer and Lovejoy, 1985a, 1987; Schertzer et al., 2012).

Scaling is seductive because it is a symmetry. Ever since Noether published her eponymous theorem (Noether, 1918) demonstrating the equivalence between symmetries and conservation laws, physics has been based on symmetry principles. Thanks to Noether's theorem, by formulating scaling as a general symmetry principle, the scaling is the physics. Symmetry principles represent a kind of maximal simplicity, and since “entities must not be multiplied beyond necessity” (Occam's razor), physicists always assume that symmetries hold unless there is evidence for symmetry breaking.

In the case of fluids, we can verify this symmetry on the equations as implemented for example in GCMs (e.g. Stolle et al., 2009, 2012, and the discussion in Sect. 2.2.3) – but only for scales larger than the (millimetric) dissipation scales, where the symmetry is broken and mechanical energy is converted into heat: this is true for Navier–Stokes turbulence; however, the atomic-scale details are not fully clear. Kadau et al. (2010) and Tuck (2008, 2022) argue that scaling can continue to much smaller scales. The scaling is also broken at the large scales by the finite size of the planet. In between, boundary conditions such as the ocean surface or topography might potentially have broken the scaling, but in fact they turn out to be scaling themselves and so do not introduce a characteristic scale (e.g. Gagnon et al., 2006).

In the atmosphere one therefore expects scaling. It is expected to hold unless processes can be identified that act preferentially and strongly enough at specific scales that could break it. This turns the table on scalebound thinking: if we can explain the atmosphere's structure in a scaling manner, then this is the simplest explanation and should a priori be adopted. The onus must be on the scalebound approach to demonstrate the inadequacy of scaling and the need to replace the hypothesis of a unique wide scaling-range regime by (potentially numerous) distinct scalebound mechanisms.

Once a scaling regime is identified – either theoretically or empirically (preferably by a combination of both) – it is associated with a single basic dynamical mechanism that repeats scale after scale over a wide range, and hence it provides an objective classification principle.

2.2.2 Wide-range scaling in atmospheric science

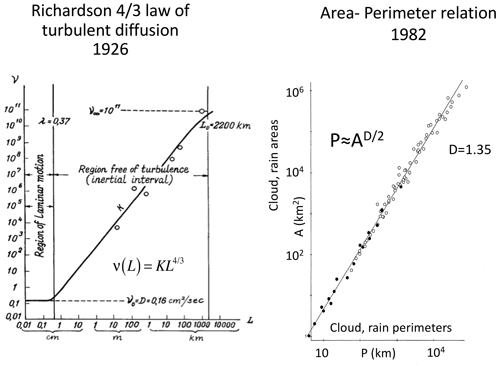

The atmospheric scaling paradigm is almost as old as numerical weather prediction, both being proposed by Richardson in the 1920s. Indeed, ever since Richardson's scaling law of turbulent diffusion (Richardson, 1926 – the precursor of the better-known Kolmogorov law, Kolmogorov, 1941), scaling has been the central turbulence paradigm.

From the beginning, Richardson argued for a wide-range scaling holding from millimetres to thousands of kilometres (Fig. 7). Richardson himself attempted an empirical verification, notably using data from pilot balloons and volcanic ash (and later – in the turbulent ocean – with bags of parsnips that he watched diffusing from a pier on Loch Lomond; Richardson and Stommel, 1948). However, there remained a dearth of data spanning the key “mesoscale” range ≈ 1–100 km corresponding to the atmosphere's scale height, so that for several decades following Richardson, progress in atmospheric turbulence was largely theoretical. In particular, in the 1930s the turbulence community made rapid advances in understanding the simplified isotropic turbulence problem, notably the Karman–Howarth equations (Karman and Howarth, 1938) and the discovery of numerous isotropic scaling laws for passive scalar advection and for mechanically driven and buoyancy-driven turbulence. At first, Kolmogorov and the other pioneers recognized that atmospheric stratification strongly limited the range of applicability of isotropic laws. Kolmogorov, for example, estimated that his famous law of 3D isotropic turbulence would only hold at scales below 100 m. As discussed in Sect. 4, modern data show that this was a vast overestimate: if his isotropic law ever holds anywhere in the atmosphere, it is below 5 m. However, at the same time, in the horizontal, the anisotropic generalization of the Kolmogorov law apparently holds up to planetary scales.

In the 1970s, motivated by Charney's isotropic 2D geostrophic turbulence (Charney, 1971), the ambitious “EOLE” experiment was undertaken specifically to study large-scale atmospheric turbulence. EOLE (for the Greek wind god) ambitiously used a satellite to track the diffusion of hundreds of constant-density balloons (Morel and Larchevêque, 1974), but the results turned out to be difficult to interpret. Worse, the initial conclusions – that the mesoscale wind did not follow the Kolmogorov law – turned out to be wrong, and they were later re-interpreted (Lacorta et al., 2004) and then further re-re-interpreted (Lovejoy and Schertzer, 2013), finally vindicating Richardson nearly 90 years later.

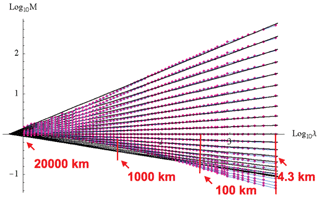

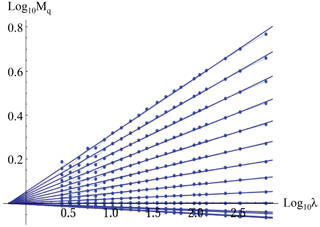

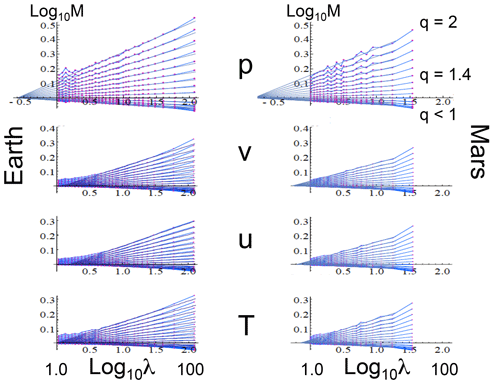

Therefore, when Lovejoy (1982), benefitting from modern radar and satellite data, discovered scaling right through the mesoscale (Fig. 7, right), it was the most convincing support to date for Richardson's daring 1926 wide-range scaling hypothesis. Although at first it was mostly cited for its empirical verification that clouds were indeed fractals, today, 40 years later, we increasingly appreciate its vindication of Richardson's scaling from 1 to 1000 km, right through the mesoscale. It marks the beginning of modern scaling theories of the atmosphere. This has since been confirmed by massive quantities of remotely sensed and in situ data, both on Earth (Fig. 8) and more recently on Mars (Fig. 9, discussed in detail in Sect. 3.4).

Figure 7Richardson's pioneering scaling model (Richardson, 1926) of turbulent diffusion (left) with an early update (Lovejoy, 1982) (right) using radar rain data (black) and satellite cloud data (open circles).

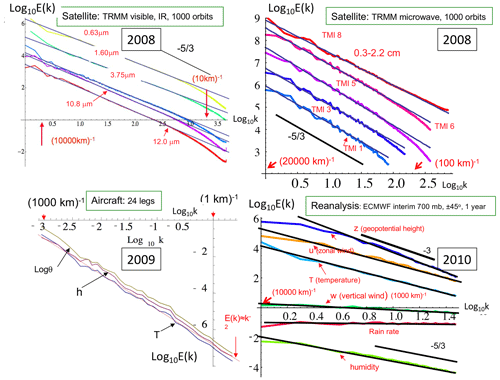

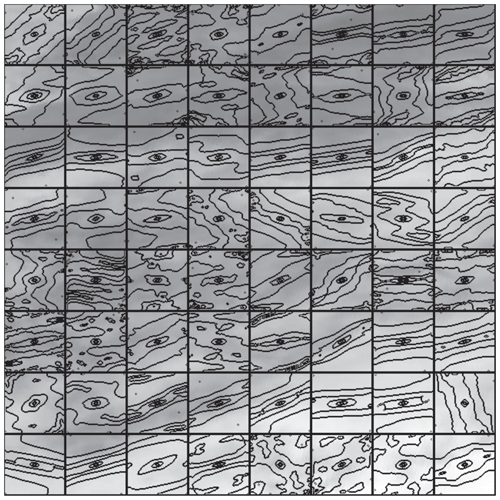

Figure 8Planetary-scale power-law spectra () from satellite radiances (top), aircraft (bottom left), and reanalyses (bottom right). Upper right: spectra from over 1000 orbits of the Tropical Rainfall Measurement Mission (TRMM), of five channels visible through thermal IR wavelengths displaying the very accurate scaling down to scales of the order of the sensor resolution (≈ 10 km). Adapted from Lovejoy et al. (2008b). Upper left: spectra from five other (microwave) channels from the same satellite. The data are at lower resolution, and the latter depend on the wavelength: again, the scaling is accurate up to the resolution. Adapted from Lovejoy et al. (2008b). Lower left: the spectrum of temperature (T), humidity (h), and log potential temperature (logθ) averaged over 24 legs of aircraft flight over the Pacific Ocean at 200 mb. Each leg had a resolution of 280 m and had 4000 points (1120 km). A reference line corresponding to the k−2 spectrum is shown in red. The mesoscale (1–100 km) is right in the middle of the figure and is not at all visible. Adapted from Lovejoy et al. (2010). Lower right: zonal spectra of reanalyses from the European Centre for Medium-Range Weather Forecasts (ECMWF), once daily for the year 2008 over the band ±45∘ latitude. Adapted from Lovejoy and Schertzer (2011).

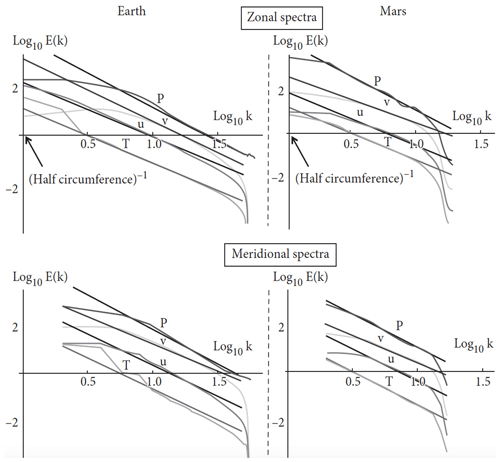

Figure 9Earth (left) and Mars (right). The zonal spectra (top right) of Mars as functions of the nondimensional wavenumbers for pressure (p, purple), meridional wind (v, green), the zonal wind (u, blue), and temperature (T, red). The data for Earth were taken for the year 2006 at 69 % atmospheric pressure between latitudes ±45∘. The data for Mars were taken at 83 % atmospheric pressure for Martian years 24 to 26 between latitudes ±45∘. The reference lines (in all the plots) have absolute slopes from top to bottom: β=3.00, 2.05, 2.35, and 2.35 (for , and T respectively). The spectra have been rescaled and an offset added for clarity. Wavenumber k=1 corresponds to the half-circumference of the respective planets. Reproduced from Chen et al. (2016).

2.2.3 Which is more fundamental: scaling or isotropy?

In Sect. 2.1, we discussed the debate between scaling and mechanistic, generally deterministic, scalebound approaches. However, even in the statistical (turbulence) strand of atmospheric science, there evolved an alternative to Richardson's wide-range scaling: the paradigm of isotropic turbulence.

In the absence of gravity (or another strong source of anisotropy), the basic isotropic scaling property of the fluid equations has been known for a long time (Taylor, 1935; Karman and Howarth, 1938). The scaling symmetry justifies the numerous classical fluid dynamics similarity laws (e.g. Sedov, 1959), and it underpins models of statistically isotropic turbulence, notably the classical turbulence laws of Kolmogorov (Kolmogorov, 1941), Bolgiano and Obukhov (buoyancy-driven, Sect. 4.1) (Bolgiano, 1959; Obukhov, 1959), and Corrsin and Obukhov (passive scalar) (Corrsin, 1951; Obukhov, 1949).

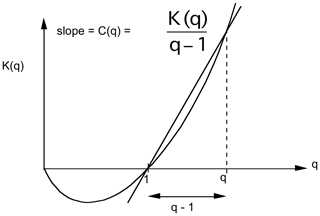

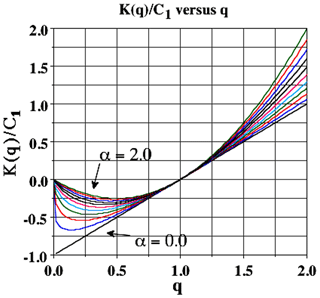

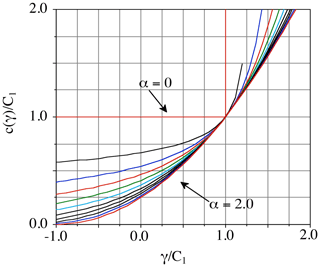

These classical turbulence laws can be expressed in the form

where scale was interpreted in an isotropic sense, H is the fluctuation exponent, and, physically, the turbulent fluxes are the drivers (compare with Eq. 2). The first and most famous example is the Kolmogorov law for fluctuations in the wind, where the turbulent flux is the energy rate density (ε, ) and . Equation (4) is the same as Eq. (2), except that the randomness is hidden in the turbulent flux that classically was considered to be quasi-Gaussian, the non-intermittent special case (Sect. 3.2).

Theories and models of isotropic turbulence were developed to understand the fundamental properties of high Reynolds number turbulence, and this was independent of whether or not it could be applied to the atmosphere. Since the atmosphere is a convenient very high Reynolds number laboratory (Re≈1012), the question is therefore “Is isotropic turbulence relevant in the atmosphere?” (the title of Lovejoy et al., 2007).

Figure 10 graphically shows the problem: although the laws of isotropic turbulence are themselves scaling, they imply a break in the middle of the “mesoscale” at around 10 km. To model the larger scales, Fjortoft (1953) and Kraichnan (1967) soon found another isotropic scaling paradigm: 2D isotropic turbulence. Charney in particular adapted Kraichnan's 2D isotropic turbulence to geostrophic turbulence (Charney, 1971), and the result is sometimes called “layerwise” 2D isotropic turbulence. While Kraichnan's 2D model was rigidly flat with strictly no vortex stretching, Charney's extension allowed for some limited vortex stretching. Figure 10 shows the implied difference between the 2D isotropic and 3D isotropic regimes.

Even though isotropy had originally been proposed purely for theoretical convenience, armed with two different isotropic scaling laws, it was now being proposed as the fundamental atmospheric paradigm. If scaling in atmospheric turbulence is always isotropic, then we are forced to accept a scale break. The assumption that isotropy is the primary symmetry implies (at least) two scaling regimes with a break (presumably) near the 10 km scale height, i.e. in the mesoscale. The 2D–3D model with its implied “dimensional transition” (Schertzer and Lovejoy, 1985c) already contradicted the wide-range scaling proposed by Richardson.

An important point is that the implied scale break is neither physically nor empirically motivated: it is purely a theoretical consequence of assuming the predominance of isotropy over scaling. One is forced to choose: which of the fundamental symmetries is primary, isotropy or scaling?

By the time a decade later that the alternative (wide-range) anisotropic scaling paradigm (see Fig. 11 for a schematic) was proposed (Schertzer and Lovejoy, 1985c, a), Charney's beautiful theory along with its 2D–3D scale break had already been widely accepted, and even today it is still taught. More recently (Schertzer et al., 2012), generalized scale invariance was linked directly to the governing equations, so that a clear anisotropic theoretical alternative to Charney's isotropic theory is available.

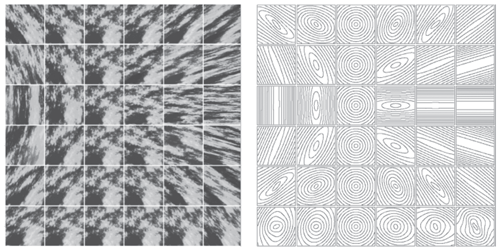

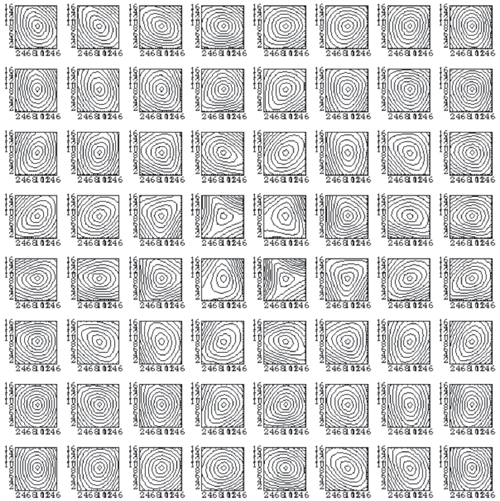

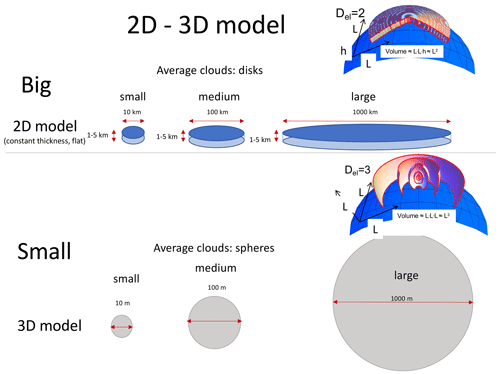

Figure 10A schematic showing the geometry of isotropic 2D models (top, for the large scales); the volumes of average structures (disks) increase as the square of the disk diameter. The isotropic 3D model is a schematic of 3D turbulence models for the small scales, with the volumes of the spheres increasing as the cube of the diameter. These geometries are superposed on the Earth's curved surface (the blue spherical segments on the right). We see (bottom right, Earth's surface) that – unless they are strongly restricted in range – the 3D isotropic models quickly imply structures that extend into outer space.

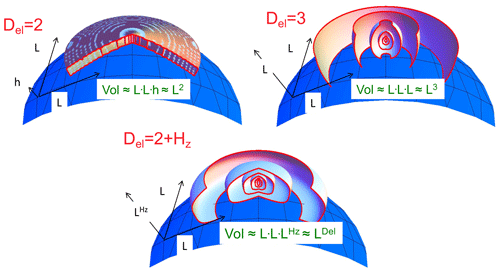

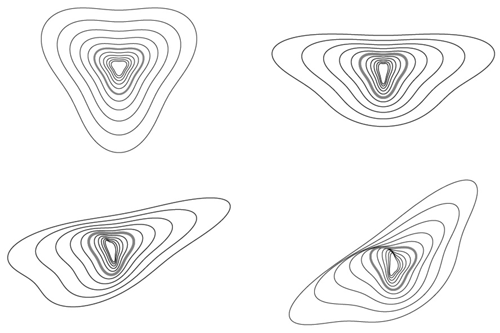

Figure 11A schematic diagram showing the change in shape of average structures which are isotropic in the horizontal (slightly curved to indicate the Earth's surface) but with scaling stratification in the vertical. Hz increases from 0 (upper left) to 1 (lower right) with an effective “elliptical dimension” . In order to illustrate the change in structures with scale, the ratio of tropospheric thickness to Earth's radius has been increased by nearly a factor of 1000. Note that, in the Del=3 case, the cross sections are exactly circles; the small distortion is an effect of perspective due to the mapping of the structures onto the curved surface of the Earth. Reproduced from Lovejoy and Schertzer (2010c).

2.3 Aspects of scaling in one dimension

The basic signature of scaling is a power-law relation of a statistical characteristic of a system as a function of space scale and/or timescale. In the empirical test of the Richardson law (Fig. 7, left panel), it is the turbulent viscosity as a function of horizontal scale that is a power law. On the right-hand side, it is rather the complicated (fractal) perimeters of clouds and rain zones that are power-law functions of the corresponding areas. The Fig. 7 analysis methods lack generality, so let us instead consider spectra (Fourier space) and, then, fluctuations (real space, Sect. 2.5, Appendix B).

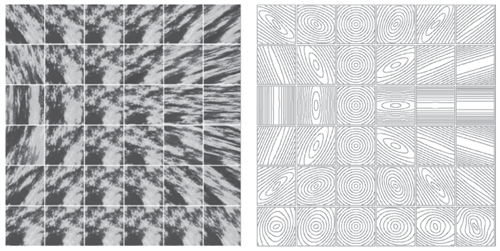

Following Mitchell, we may consider variability in the spectral domain: for example, the power spectrum of the temperature T(t) is

where is its Fourier transform and ω is the frequency. A scaling process E(ω) has the same form if we consider it at a timescale λ times smaller or, equivalently, at a frequency λ times larger:

where β is the “spectral exponent”. The solution of this functional equation is a power law:

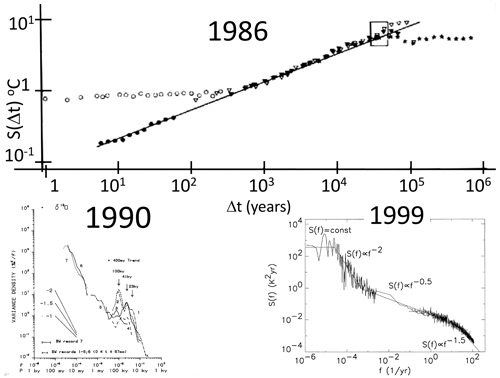

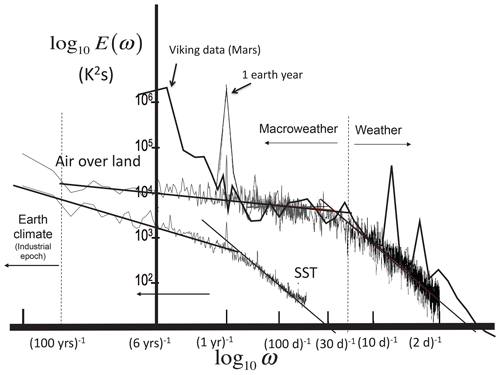

Therefore, a log–log plot of the spectrum as a function of frequency will be a straight line; see Fig. 12 for early quantitative applications to climate series.

Alternatively, we can consider scaling in real space. Due to “Tauberian theorems” (e.g. Feller, 1971), power laws in real space are transformed into power laws in Fourier space (and vice versa). This result holds whenever the scaling range is wide enough – i.e. even if there are high- and/or low-frequency cutoffs (needed if only for the convergence of the transforms). If we consider fluctuations ΔT over time interval Δt, then if the system is scaling, we can introduce the (“generalized”, qth-order) structure function as

where the “〈〉” sign indicates statistical (ensemble) averaging (assuming statistical stationarity, there is no t dependence). Once again, classical fluctuations are defined simply as differences, i.e. , although more general fluctuations are needed as discussed in Sect. 2.5. For stationary scaling processes, the Wiener–Khintchin theorem implies a simple relation between real space and Fourier scaling exponents:

(the “2” is because the variance is a second-order moment). If, in addition, the system is “quasi-Gaussian”, then S2 gives a full statistical characterization of the process. Therefore, often only the second-order structure function S2(Δt) is considered (e.g. Fig. 12, top). However, as discussed above, geo-processes are typically strongly intermittent and rarely quasi-Gaussian, and the full exponent function ξ(q) is needed (Sect. 3.1). In the next section, we discuss this figure in more detail, including its physical implications. For the moment, simply note the various linear (scaling) regimes in the log–log plots.

Figure 12The evolution of the scaling picture for 1986–1999. Top: the rms difference structure functions estimated from local (central England) temperatures since 1659 (open circles, upper left), Northern Hemisphere temperature (black circles), and paleotemperatures from Vostok (Antarctic, solid triangles), Camp Century (Greenland, open triangles), and an ocean core (asterisks). For the Northern Hemisphere temperatures, the (power-law, linear in this plot) climate regime starts at about 10 years. The reference line has a slope H=0.4. The rectangle (upper right) is the “glacial–interglacial window” through which the structure function must pass in order to account for typical variations of ±2 to ±3 K for cycles with half-periods ≈ 50 kyr. Reproduced from Lovejoy and Schertzer (1986b). Note the two essentially flat sets of points, one from the local central England temperature up to roughly 300 years and the other from an ocean core that is flat from scales 100 000 years and longer. These correspond to the macroweather and macroclimate regimes where H<0, so that the flatness is an artefact of the use of differences in the definition of fluctuations (Appendix B2). Bottom left: composite spectrum of δ18O paleotemperatures from Shackleton and Imbrie (1990). Bottom right: composite using instrumental temperatures (right) and paleotemperatures (left) with piecewise linear (power-law) reference lines. The composite is not very different from the updated one shown in Fig. 13. The lower-right composite is reproduced from Pelletier (1998).

2.4 The impact of data on the scalebound view

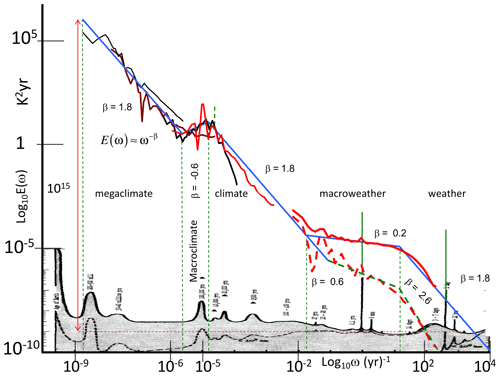

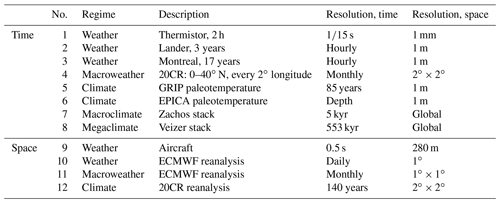

In spite of its growing disconnect with modern data, Mitchell's figure and its scalebound updates continue to be influential. However, within 15 years of Mitchell's famous paper, two scaling composites, over the ranges 1 h to 105 years and 103 to 108 years, already showed huge discrepancies (Lovejoy and Schertzer, 1986b; Fig. 12, top panel; Shackleton and Imbrie, 1990, bottom left; see also Pelletier, 1998, bottom right, and Huybers and Curry, 2006). Returning to Mitchell's original figure, Lovejoy (2015) superposed the spectra of several modern instrumental and paleo series; the differences are literally astronomical (Fig. 13). While over the range 1 h to 109 years, Mitchell's background varies by a factor ≈ 150, the spectra from real data imply that the true range is a factor greater than a quadrillion (1015).

Figure 13A comparison of Mitchell's educated guess of a log–log spectral plot (grey, bottom, Mitchell, 1976) superposed with modern evidence from spectra of a selection of the series described in Table 1 and Lovejoy (2015) from which this figure is reproduced. On the far right, the spectra from the 1871–2008 20CR (at daily resolution) quantify the difference between the globally averaged temperature (bottom right, red line) and local averages (2∘ × 2∘, top right, red line). The spectra were averaged over frequency intervals (10 per factor of 10 in frequency), thus “smearing out” the daily and annual spectral “spikes”. These spikes have been re-introduced without this averaging and are indicated by green spikes above the red daily-resolution curves. Using the daily-resolution data, the annual cycle is a factor ≈ 1000 above the continuum, whereas using hourly-resolution data, the daily spike is a factor ≈ 3000 above the background. Also shown is the other striking narrow spectral spike at (41 kyr)−1 (obliquity circa a factor of 10 above the continuum); this is shown in dashed green since it is only apparent over the period 0.8–2.56 Myr BP (before present). The blue lines have slopes indicating the scaling behaviours. The thin dashed green lines show the transition periods that separate out the scaling regimes; these are (roughly) at 20 d, 50 years, 80 000 years, and 500 000 years. This is reproduced from Lovejoy (2015).

Figure 14Artist's rendering with data superposed. Adapted from Ghil (2002) and reprinted in Ghil and Lucarini (2020).

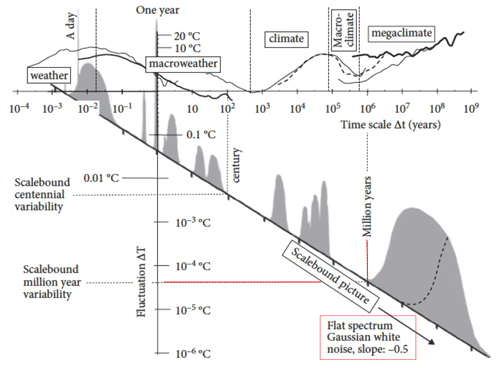

Returning to the artist's rendering, Fig. 14 shows that, when compared to the data, it fares no better than Mitchell's educated guess. The next update – the NOAA's mental model – only specified that its vertical axis be proportional to “variability”. If we interpret variability as the root-mean-square (rms) fluctuation at a given scale and the flat “background” between the bumps as white noise, then we obtain the comparison in Fig. 15. Although the exact definition of these fluctuations is discussed in Sect. 2.5, they give a directly physically meaningful quantification of the variability at a given timescale. In Fig. 15, we see that the mental model predicts that successive average Earth temperatures of 1 million years would differ by only tens of micro-Kelvin. A closely similar conclusion would hold if we converted Mitchell's spectrum into rms real-space fluctuations.

The most recent scalebound update – the “conceptual landscape” – is compared with modern data in Fig. 16. Although the various scaling regimes proposed in Lovejoy (2013) (updated in Fig. 18 and discussed below) are discreetly indicated in the background, in many instances, there is no obvious relation between the regimes and the landscape. In particular, the word “macroweather” appears without any obvious connection to the figure, but even the landscape's highlighted scalebound features are not very close to the empirical curve (red). Although the vertical axis is only “relative”, this quantitative empirical comparison was made by exploiting the equal-area property mentioned above. The overlaid solid red curve was estimated by converting the disjoint spectral power laws shown in the updated Mitchell graph (Fig. 8). In addition, there is also an attempt to indicate the amplitudes of the narrow spectral spikes (the green spikes in Fig. 13) at diurnal, annual, and – for the epoch 2.5–0.8 Myr – obliquity spectral peaks at (41 kyr)−1. In conclusion, the conceptual landscape bears little relation to the real world.

Figure 15Mental model with data. The data spectrum in Fig. 13 is replotted in terms of fluctuations (grey, top; see Fig. 17). The diagonal axis corresponds to the flat baseline of Fig. 6 (lower left) that now has a slope of corresponding to an uncorrelated Gaussian “white noise” background. Since the amplitudes in Fig. 6 (lower left) were not specified, the amplitudes of the transformed “bumps” are only notional. At the top are superposed the typical Haar fluctuations at timescale Δt as estimated from various instrumental and paleo-series, from Fig. 17 (bottom, using the data displayed in Fig. 2). We see (lower right) that consecutive 1 Myr averages would only differ by several micro-Kelvin. Reproduced from Lovejoy (2019).

Figure 16Conceptual landscape with data. The superposed red curves use the empirical spectra in Fig. 13 and adjust the (linear) vertical scale for a rough match with the landscape. The vertical lines indicate huge periodic signals (the diurnal and annual cycles on the right and on the left, the obliquity signal seen in spectra between 0.8 and 2.5 Myr ago). Adapted from von der Heydt et al. (2021).

2.5 Revisiting the atmosphere with the help of fluctuation analysis

The scalebound framework for atmospheric dynamics emphasized the importance of numerous processes occurring at well-defined timescales, the quasi-periodic “foreground” processes illustrated as bumps – the signals – on Mitchell's nearly flat background. The point here is not that these processes and mechanisms are wrong or non-existent: it is rather that they only explain a small fraction of the overall variability, and this implies that they cannot be understood without putting them in the context of their dynamical (scaling) regime. This was also demonstrated quantitatively and explicitly over at least a significant part of the climate range by Wunsch (2003).

One of the lessons to be drawn from the educated guesses, artists' renderings, and conceptual landscapes is that, although spectra can be calculated for any signal, the interpretations are often not obvious. The problem is that we have no intuition about the physical meaning of the units – K2 s, K2 yr, or even K2 Myr – so that often (as here) the units used in spectral plots are not even given. It then becomes impossible to take data from disparate sources and at different timescales to make the spectral composites needed to make a meaningful check of the scalebound paradigm.

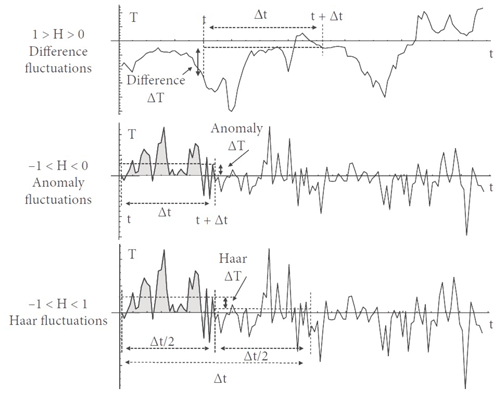

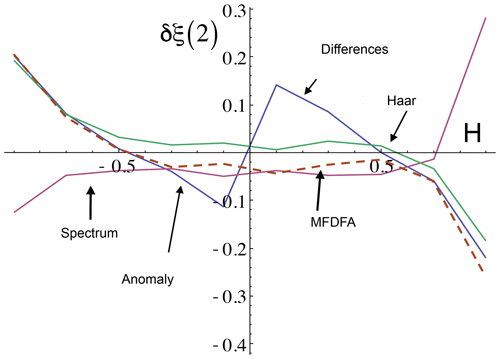

The advantage of fluctuations such as in Fig. 12 (top) is that the numbers – e.g. the rms temperature fluctuations at some scale – have a straightforward physical interpretation. However, the differences used to define fluctuations (see Fig. 17, top) have a non-obvious problem: on average, differences cannot decrease with increasing time intervals (in Appendix B, this problem is discussed more precisely in the Fourier domain). This is true for any series that has correlations that decrease with Δt (as physically relevant series always do). A consequence is that whenever the value of ξ(2) is negative – implying that the mean fluctuations decrease with scale – the difference fluctuations will at best give a constant result (the flat parts of Fig. 12, top).

However, do regions of negative ξ(2) exist? One way to investigate this is to try to infer the exponent ξ(2) from the spectrum that does not suffer from an analogous restriction: its exponent β can take any value. In this case we can use the formula (Eq. 9). The latter implies that negative ξ(2) corresponds to β<1, and a check on the spectrum in Fig. 7 indicates that several regions (notably the macroweather regime) are indeed flat enough (β<1) to imply negative ξ(2). How do we fix the problem and estimate the correct ξ(2) when it is negative?

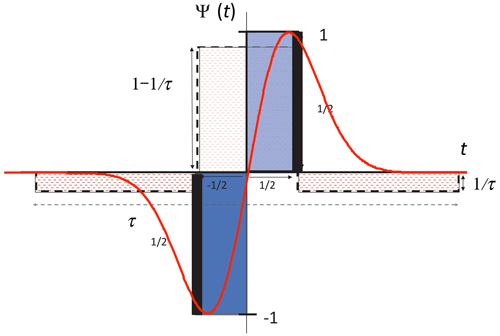

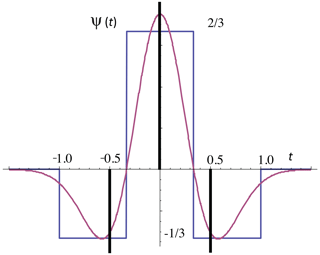

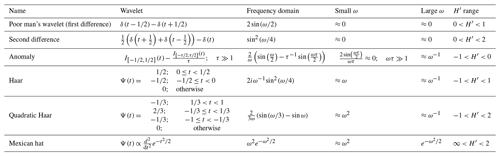

It took a surprisingly long time to clarify this issue. To start with, in classical turbulence, ξ(2)>0 (e.g. the Kolmogorov law), there was no motivation to look further than differences. Mathematically, the main advance came in the 1980s from wavelets. It turns out that, technically, fluctuations defined as differences are indeed wavelets, but mathematicians mock them, calling them the poor man's wavelet, and they generally promote more sophisticated wavelets (see Appendix B2): the simplicity of the physical interpretation is not their concern. This was the situation in the 1990s, when scaling started to be systematically applied to geophysical time series involving negative ξ(2) (i.e. to any macroweather series, although at the time this was not clear). A practical solution adopted by many was to use the detrended fluctuation analysis (DFA) method (Peng et al., 1994). One reason the DFA method is popular is that the raw DFA fluctuations are not too noisy. However, this is in fact an artefact since they are fluctuations of the running sum of the process, not of the process itself. When DFA fluctuations of the process are used, they are just as variable as the Haar fluctuations (Lovejoy and Schertzer, 2012a; Hébert et al., 2021a). Unfortunately, DFA fluctuations are difficult to interpret, so that typically only exponents are extracted: the important information contained in the fluctuation amplitudes is not exploited (see Appendix B).

New clarity was achieved with the help of the (first) Haar wavelet (Haar, 1910). There were two reasons for this: the simplicity of its definition and calculation and the simplicity of its interpretation (Lovejoy and Schertzer, 2012a). To determine the Haar fluctuation over a time interval Δt, one simply takes the average of the first half of the interval and, from this, subtracts the average of the second half (Fig. 17, bottom; see Appendix B2 for more details). As for the interpretation, when H is positive, then it is (nearly) the same as a difference, whereas whenever H is negative, the fluctuation can be interpreted as an “anomaly” (in this context, an anomaly is simply the average over a segment length Δt of the series with its long-term average removed, Appendix B2). In both cases we also recover the correct value of the exponent H. Although the Haar fluctuation is only useful for H in the range −1 to 1, this turns out to cover most of the series that are encountered in geoscience.

Figure 17Schematic illustration of difference (top) and anomaly (middle) fluctuations for a multifractal simulation of the atmosphere in the weather regime (), top, and in the lower-frequency macroweather regime (), middle. Note the wandering or drifting of the signal in the top panel and the cancelling behaviour in the middle one. The bottom panel is a schematic illustration of Haar fluctuations (useful for processes with ). The Haar fluctuation over the interval Δt is the mean of the first half subtracted from the mean of the second half of the interval Δt. Reproduced from Lovejoy (2019).

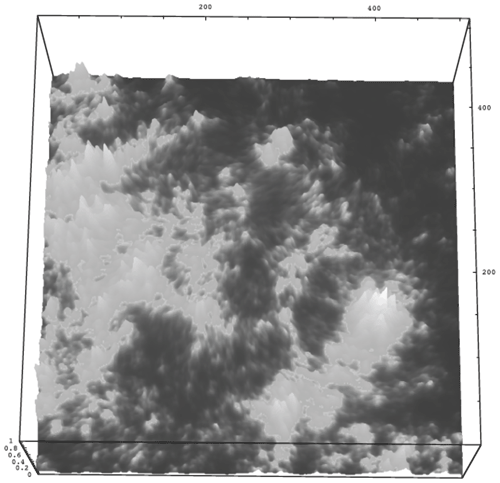

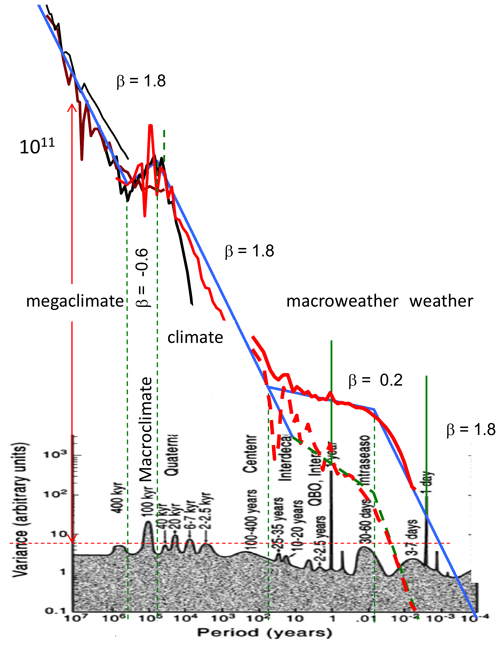

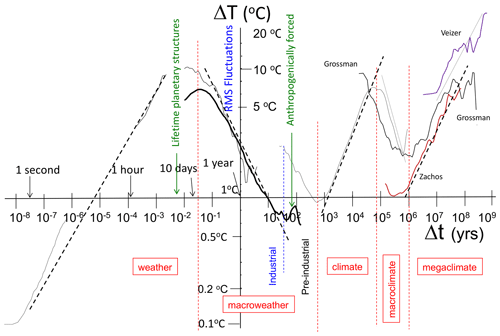

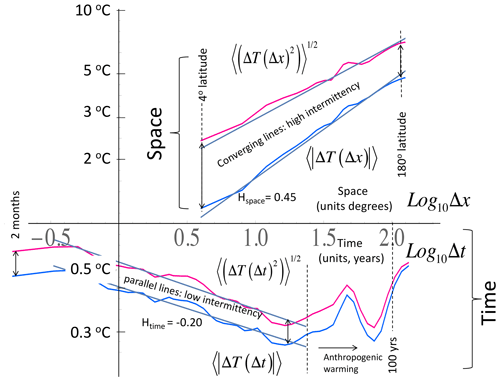

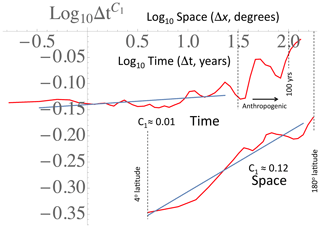

Figure 18 shows a modern composite using the rms Haar fluctuation, spanning a range of scales of ≈ 1017 (compare this with Fig. 12, top, for the earlier version using fluctuations as differences). The same five regimes as in Fig. 13 are shown, but now the typical variations in temperature over various timescales are very clear.

Also shown in Fig. 16 are reference lines indicating the typical scale dependencies. These correspond to typical temperature fluctuations , where ξ(1)=H is the “fluctuation exponent” (the exponent of the mean absolute fluctuation, the relationship ξ(2)=2H, is valid if we ignore intermittency: it is the quasi-Gaussian relationship still often invoked; see Eq. 15). In the figure, we see that the character of the regimes alternates between regimes that grow (H>0) and ones that decrease (H<0) with timescale. The sign of H has a fundamental significance; to see this, we can return to typical series over the various regimes in Fig. 2 (left-hand-side column). In terms of their visual appearances, the H>0 regimes have signals that seem to “wander” or “drift”, whereas for H<0 regimes, fluctuations tend to cancel. In the former, waiting longer and longer typically leads to larger changes in temperature, whereas in the latter, longer and longer temporal scales lead to convergence to well-defined values.

With the help of the figure, we can now understand the problem with the usual definition of climate as “long-term” weather. As we average from 10 d to longer durations, temperature fluctuations do indeed tend to diminish – as expected if they converged to the climate. Consider for example the thick solid line in Fig. 18 (corresponding to data at 75∘ N), which shows that, at about 10 d, the temperature fluctuations are 3 K ( K), diminishing at 20 years to .3 K. Since H<0, the Haar fluctuations are nearly equivalent to the anomalies, i.e. to averages of the series with the long-time mean removed. Over this range, increasing the scale leads to smaller and smaller fluctuations about the point of the apparent point of convergence: the average climate temperature. Figure 18 also shows the longer scales deduced purely from paleodata (isotope ratios from either ice or ocean cores).

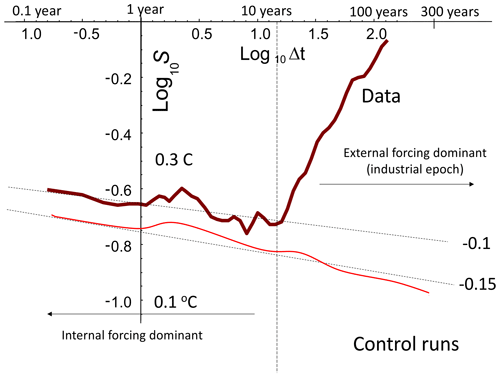

The interpretation of the apparent point of convergence as the climate state is supported by the analysis of global data compared with GCMs in “control runs” (i.e. with fixed external conditions, Fig. 19). When averaged over long enough times, the control runs do indeed converge, although the convergence is “ultra slow” (at a rate characterized by the exponent 0.15 for the GCMs). Extrapolating from the figure shows that, even after averaging over 1 million simulated years, the GCMs would still typically be only within ±0.01 K of their respective climates.

Figure 18The broad sweep of atmospheric variability with rms Haar fluctuations showing the various (roughly power-law) atmospheric regimes, adapted and updated from the original (Lovejoy, 2013) and the update in Lovejoy (2015), where the full details of the data sources are given (with the exception of the paleo-analysis marked “Grossman”, which is from Grossman and Joachimski, 2022). The dashed vertical lines show the rough divisions between regimes; the macroweather–climate transition is different in the preindustrial epoch. Starting at the left, we have, the high-frequency analysis (lower left) from thermistor data taken at McGill at 15 Hz. Then, the thin curve starting at 2 h is from a weather station, the next (thick) curve is from the 20th century reanalysis (20CR), and the next, “S”-shaped curve is from the EPICA core. Finally, the three far-right curves are benthic paleotemperatures (from “stacks”). The quadrillion estimate is for the spectrum: it depends somewhat on the calibration of the stacks. With the calibration in the figure, the typical variation of consecutive 50 million year averages is ±4.5 K (Δt=108 years, rms ΔT=9 K). If the calibration is lowered by a factor of ≈ 3 (to variations of ±1.5 K), then the spectrum would be reduced by a factor of 9. On the other hand, the addition of the 0.017 s resolution thermistor data increases the overall spectral range by another factor of 108 for a total spectral range of a factor ≈ 1017 for scales from 0.017 s to 5 × 108 years.

Figure 19Top (brown): the globally averaged, rms Haar temperature fluctuations averaged over three data sets (adapted from Lovejoy, 2019, where there are full details: the curve over the corresponding timescale range in Fig. 19 is at 75∘ N and is a bit different). At small timescales, one can see reasonable power-law behaviour with .1. However, for scales longer than about 15 years, the externally forced variability becomes dominant, although, in reality, the internal variability continues to larger scales and the externally forced variability to smaller ones. The two can roughly be separated at decadal scales as indicated by the vertical dashed line. The curve is reproduced from Lovejoy (2017a). Bottom (red): the rms Haar fluctuations for 11 control runs from the Climate Model Intercomparison Project 5 (CMIP5). The reference slope .15 is adapted from Lovejoy (2019).

Returning to Fig. 18, however, we see that, beyond a critical timescale τc, the convergence is reversed and fluctuations tend rather to increase with timescale. In the Anthropocene (roughly since 1900), the ≈ 15-year timescale where fluctuations stop decreasing and begin increasing with scale is roughly the time that it has taken for anthropogenic warming (over the last decades) to become comparable to the natural internal variability (about ±0.2 K for these globally averaged temperatures). However, for the preindustrial epoch (see the “S”-shaped paleotemperature curve from the EPICA (European Project for Ice Coring in Antarctica) ice core, Fig. 18), the transition time is closer to 300 years. The origin of this larger τc value is not clear; it is a focus of the PAGES–CVAS (Past Climate – Climate Variability Across Scales) working group (Lovejoy, 2017b).

Regarding the last 100 kyr, the key point about Fig. 18 is that we have three regimes – not two. Since the intermediate regime is well reproduced by control runs (Fig. 19), it is termed “macroweather”: it is essentially averaged weather.

If the macroweather regime is characterized by slow convergence of averages with scale, it is logical to define a climate state as an average over durations that are long enough so that the maximum convergence has occurred – i.e. over periods Δt>τc. In the Anthropocene, this gives some objective justification for the official World Meteorological Organization's otherwise arbitrary climate-averaging period of 30 years. Similarly, the roughly 10 d to 1-month weather–macroweather transition at τw gives some objective justification for the common practice of using monthly average anomalies: these define analogous macroweather states. The climate regime is therefore the regime beyond τc where the climate state itself starts to vary. In addition to the analyses presented here, there are numerous papers claiming evidence for power-law climate regimes: Lovejoy and Schertzer (1986b), Shackleton and Imbrie (1990), Schmitt et al. (1995), Ditlevsen et al. (1996), Pelletier (1998), Ashkenazy et al. (2003), Wunsch (2003), and Huybers and Curry (2006); for a more comprehensive review, see the discussion and Table 11.4 in Lovejoy and Schertzer (2013).

Again from Fig. 18, we see that the climate state itself starts to vary in a roughly scaling way up until Milankovitch timescales (at about 50 kyr, half the period of the main 100 kyr eccentricity frequency) over which fluctuations are typically of the order ±2 to ±4 K: the glacial–interglacial “window” (Lovejoy and Schertzer 1986) over typical variability that is quite clear in the figure (the most recent estimate is a total range of 6 K or ±3 K; Tierney et al., 2020). At even larger scales there is evidence (from ice core and benthic paleodata, notably updated with a much improved megaclimate series by Grossman and Joachimski, 2022, bold curve on the right) that there is a narrow macroclimate regime and then a wide-range megaclimate regime, but these are outside our present scope (see Lovejoy, 2015, for more discussion).

2.6 Lagrangian space–time relations, Stommel diagrams, and the weather–macroweather transition time

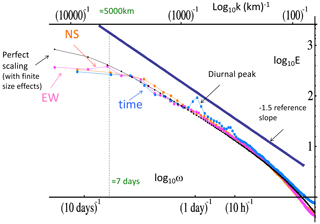

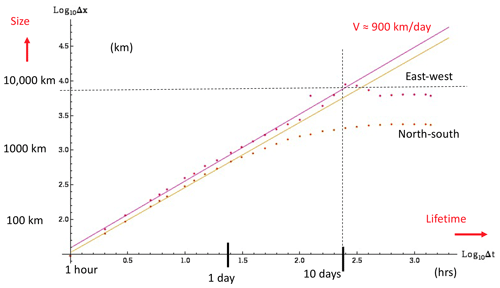

2.6.1 Space–time scaling from the anisotropic Kolmogorov law

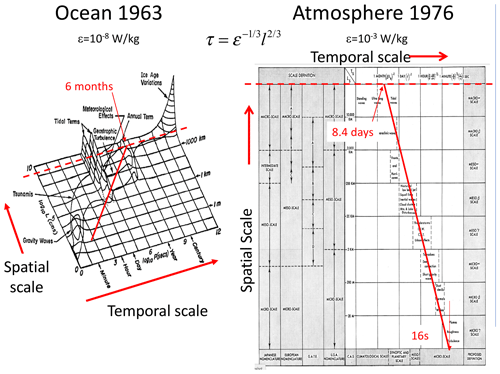

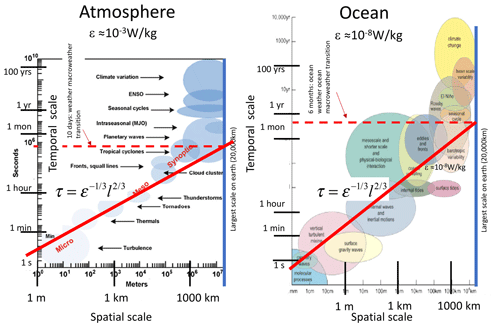

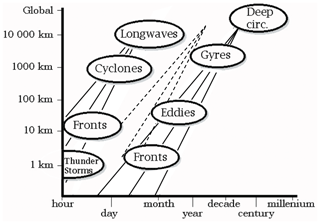

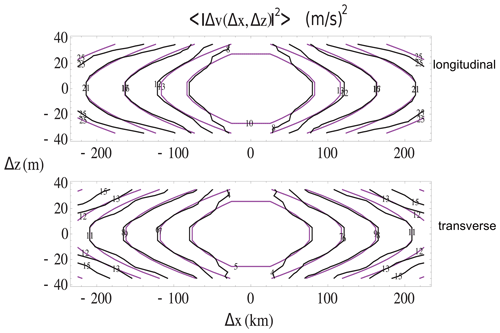

Space–time diagrams are log-time–log-space plots for the ocean (Stommel, 1963, Fig. 20, left) and the atmosphere (Orlanski, 1975, Fig. 20, right). They highlight the conventional morphologies, structures, and processes typically indicated by boxes or ellipses in the space–time regions in which they have been observed. Since the diagrams refer to the lifetimes of structures co-moving with the fluid, these are Lagrangian space–time relations. The Eulerian (fixed-frame) relations are discussed in the next section.

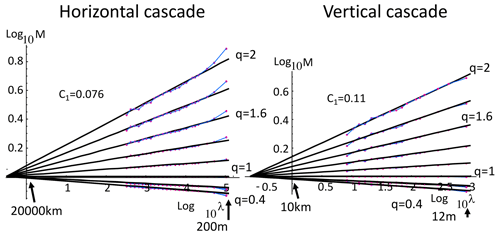

A striking feature of these diagrams – especially in Orlanski's atmospheric version (Fig. 20, right panel) but also in the updates (Fig. 21) – is the near-linear, i.e. power-law, arrangement of the features. As pointed out in Schertzer et al. (1997a), in the case of Orlanski's diagram, the slope of the line is very close to the theoretically predicted value . This is the value that holds if the atmosphere respects (anisotropic) Kolmogorov scaling in the horizontal: , where ε is the power per mass, l is the horizontal length scale, and Δv(l) is the typical velocity difference across a structure of size l. In the scaling “inertial” range where this relationship holds – if only on dimensional grounds – the lifetime τ of a structure is given by . This implies the lifetime–size relation

In isotropic turbulence, this is a classical result, yet it was first applied to the anisotropic Kolmogorov law (and hence up to planetary scales) in Schertzer et al. (1997a). Equation (10) predicts both the exponent (the log–log slope) and – if we know ε – the prefactor (Figs. 20–22; see Lovejoy et al., 2001).

Figure 20The original space–time diagrams (Stommel, 1963, ocean, left; Orlanski, 1975, the atmosphere, right). The solid red lines are theoretical lines assuming the horizontal Kolmogorov scaling with the measured mean energy rate densities indicated. The dashed red lines indicate the size of the planet (half-circumference 20 000 km), where the timescale at which they meet is the lifetime of planetary structures (≈ 10 d in the atmosphere, about 6 months in the ocean). It is equal to the weather–macroweather and ocean weather–ocean macroweather transition scales, and it is also close to the corresponding deterministic predictability limits.

Figure 21The original figures are space–time diagrams for the ocean (left) and atmosphere (right) from Ghil and Lucarini (2020); note that space and time have been swapped as compared to Fig. 20. As in Fig. 20, solid red lines have been added, showing the purely theoretical predictions. On the right, a solid blue line was added showing the planetary scale. The dashed red line (also added) shows the corresponding lifetimes of planetary structures (the same as in Fig. 20). We see once again that wide-range horizontal Kolmogorov scaling is compatible with the phenomenology, especially when taking into account the statistical variability of the space–time relationship itself, as indicated in Fig. 22.

Figure 22A space–time diagram showing the effects of intermittency and, for the oceans, the deep currents associated with very low ε. The original was published in Steele (1995), with solid reference lines added in Lovejoy et al. (2001), and the dashed lines were added in a further update in Lovejoy and Schertzer (2013). The central black lines indicate the mean theory (i.e. with W kg−1 left and right, appropriate for deep water). The central dashed lines (surface layers) represent W kg−1. The lines to the left and right of the central lines represent the effects of intermittency with exponent C1=0.25 (slopes .75 and 0.59; see Sect. 3: this corresponds to roughly 1 standard deviation variation of the singularities in the velocity field). Reproduced from Lovejoy and Schertzer (2013).

2.6.2 The atmosphere as a heat engine: space–time scaling and the weather–macroweather transition scale

Thinking of the atmosphere as a heat engine that converts solar energy into mechanical energy (wind) allows us to estimate ε directly from first principles. Taking into account the average albedo and averaging over day, night, and the surface of the globe, we find that solar heating is ≈ 238 W m−2. The mass of the atmosphere is ≈104 kg m−2, so that the heat engine operates with a total power of 23.8 mW kg−1. However, heat engines are never 100 % efficient, and various thermodynamic models (e.g. Laliberté et al., 2015) predict efficiencies of a few percent. For example, an engine at about 300 K that operates over a range of 12 K has a Carnot efficiency of 4 %.

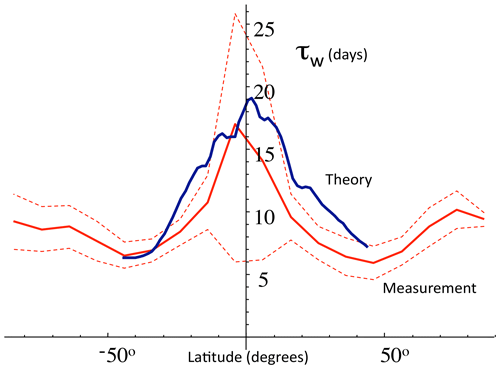

On Earth, direct estimates of ε from wind gradients (using find large-scale average values of ≈ 1 mW kg−1, implying an efficiency of (1 mW kg−1)(23.8 mW kg−1) ≈ 4 %, confirming the theory (Lovejoy and Schertzer, 2010c) (the values 1 mW kg−1 and 23.8 mW kg−1 are for global averages, there are systematic latitudinal variations, and Fig. 23 confirms that the theory works well at each latitude).

Using the value ε≈1 mW kg−1 and the global length scale Le gives the maximum lifetime τ≈10 d (this is where the lines in the Stommel diagrams intersect the Earth scales in the atmospheric Stommel diagrams). For the surface ocean currents, as reviewed in Chap. 8 of Lovejoy and Schertzer (2013), ocean drifter estimates yield ε≈ 10−8 W kg−1, implying a maximum ocean gyre lifetime of about 6 months to 1 year. Deep ocean currents have much smaller values –10−15 W kg−1 (or less) that explain the right-hand side of the Stommel diagram (Fig. 22). This diagram indicates these values, with the theoretical slope fitting the phenomenology well. The figure also shows the effect of intermittency (Sect. 3.3) that implies a statistical distribution about the exponent (this is simply the exponent of the mean), the width of which is also theoretically estimated and shown in the plot, thus potentially explaining the statistical variations around the mean behaviour.

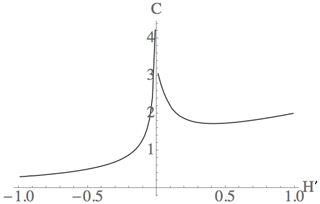

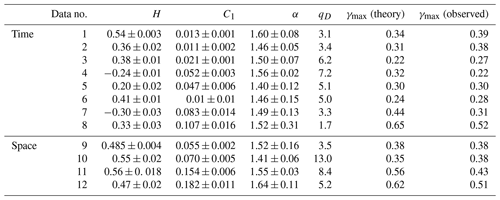

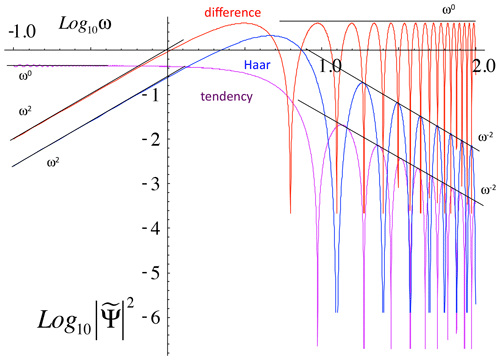

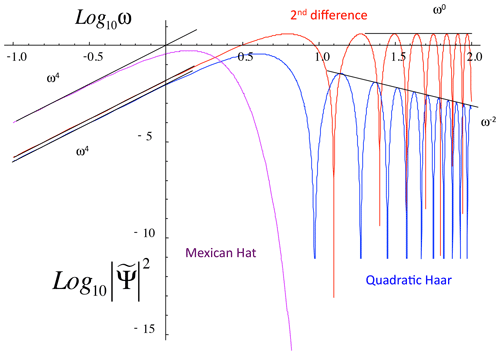

In space, up to planetary scales, the basic wind statistics are controlled by ε; hence, up to τw, they also determine the corresponding temporal statistics. Beyond this timescale, we are considering the statistics of many planetary-scale structures. That the problem becomes a statistical one is clear since the lifetime in this anisotropic 23/9D turbulence is essentially the same as its predictability limit, the error-doubling time for the l-sized eddies (e.g. Chap. 2 of Lovejoy and Schertzer, 2013). If the atmosphere had been perfectly flat (or “layerwise flat” as in quasi-geostrophic 2D turbulence), then its predictability limit would have been much longer (e.g. Chap. 2 of Lovejoy and Schertzer, 2013). Therefore, at this transition scale, even otherwise deterministic GCMs become effectively stochastic. Since the longer timescales are essentially large-scale weather, this has been dubbed “macroweather”.