the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Explaining the high skill of reservoir computing methods in El Niño prediction

Francesco Guardamagna

Claudia Wieners

Henk A. Dijkstra

Accurate prediction of the extreme phases of the El Niño–Southern Oscillation (ENSO) is important to mitigate the socioeconomic impacts of this phenomenon. It has long been thought that prediction skill was limited to a 6-month lead time. However, machine learning methods have shown to have skill at lead times of up to 21 months. In this paper, we aim to explain for one class of such methods, i.e. reservoir computers (RCs), the origin of this high skill. Using a conditional nonlinear optimal perturbation (CNOP) approach, we compare the initial error propagation in a deterministic Zebiak–Cane (ZC) ENSO model and that in an RC trained on synthetic observations derived from a stochastic ZC model. Optimal initial perturbations at long lead times in the RC involve both sea surface temperature and thermocline anomalies, which leads to decreased error propagation compared to the ZC model, where mainly thermocline anomalies dominate the optimal initial perturbations. This reduced error propagation allows the RC to provide a higher skill at long lead times than the deterministic ZC model.

- Article

(3112 KB) - Full-text XML

- BibTeX

- EndNote

The El Niño–Southern Oscillation (ENSO) phenomenon, driven by ocean–atmosphere interactions in the tropical Pacific, is one of the biggest sources of interannual climate variability (Neelin et al., 1998). The full ENSO cycle shows an irregular period of 2–7 years. During its warm (El Niño) and cold (La Niña) phases, ENSO strongly affects the climate all over the globe through well-known teleconnections (McPhaden et al., 2006), increasing the incidence of extreme weather events such as global droughts (Yin et al., 2022) and tropical cyclones (Wang et al., 2010). ENSO can therefore have a substantial impact on the worldwide economy (Liu et al., 2023a), and accurate and reliable forecasts are necessary to mitigate its socioeconomic consequences.

For this reason, ENSO modelling and forecasting have been a central topic of extensive research, which, thanks to the contribution of the Tropical Ocean–Global Atmosphere programme, led to the development of a complete hierarchy of models. This hierarchy includes conceptual models (Jin, 1997; Suarez and Schopf, 1988; Takahashi et al., 2019; Timmermann et al., 2003), intermediate complexity models (Zebiak and Cane, 1987; Battisti and Hirst, 1989), and global climate models (Planton et al., 2021). Many of these classical dynamical models can reasonably forecast ENSO for up to a lead time of 6 months, with a correlation between predictions and observations larger than 0.5 (Barnston et al., 2012), but their skill rapidly decreases for longer lead times.

In recent years, the application of machine learning (ML) techniques for predicting ENSO has significantly advanced (Bracco et al., 2024). Ham et al. (2019) showed that convolutional neural networks (CNNs) trained with CMIP5 and reanalysis data could obtain reasonable skill at lead times of up to about 17 months. Hu et al. (2021) advanced the CNN approach by integrating dropout and transfer learning with a residual CNN, obtaining a good performance for a lead time of up to 21 months. Long short-term memory (LSTM) networks, able to exploit the temporal dynamics present in the training data, have also been successfully applied to ENSO forecasting (Xiaoqun et al., 2020). More recent studies have combined LSTMs with other methods such as graph neural networks (Jonnalagadda and Hashemi, 2023), CNNs (Mahesh et al., 2019) and autoencoders (Jonnalagadda and Hashemi, 2023) to create hybrid models boosting the performance as they are able to capture both the spatial and the temporal dynamics present in the data. Reservoir computer (RC) methods, a special class of recurrent neural networks (RNNs), have shown optimal performance in predicting ENSO (Hassanibesheli et al., 2022). The RC offers a good balance between performance and model simplicity, which enhances explainability and facilitates analysis of model predictions. Moreover, like other RNN-based models, the RC offers the possibility of generating a self-evolving system that does not rely on external inputs (Guardamagna et al., 2024). This characteristic is crucial to understanding the internal dynamics of the RC and the evolution of errors over time during forecasting.

All these new tools provide more accurate forecasting skills than classical dynamical models, especially for longer lead times, and seem to be able to circumvent the spring predictability barrier (SPB). The SPB (Webster and Yang, 1992; Lau and Yang, 1996) has been identified and documented across all of ENSO's dynamical model hierarchy from conceptual models (Jin and Liu, 2021a, b; Jin et al., 2021) to comprehensive general circulation models (GCMs; Duan and Wei, 2013). In particular, in the intermediate-complexity Zebiak–Cane (ZC) model (Zebiak and Cane, 1987), the SPB has been rigorously studied and quantified using the conditional nonlinear optimal perturbation (CNOP) framework (Mu et al., 2007). This tool has been applied to investigate the sensitivity of the ZC model to both initial conditions (Duan et al., 2013) and model parameters (Yu et al., 2014) uncertainties. Thus, the ZC model is an excellent test bed to analyse why ML algorithms can have skill beyond the SPB, providing good predictions even when initialized during boreal spring.

In this paper, we aim to explain the good performance of RC methods in ENSO prediction. Specifically, we compare the evolution of optimal initial perturbations, determined using the CNOP approach, between the RC (trained with synthetic observations from the stochastic ZC model) and the deterministic ZC model. In Sect. 2, we shortly describe the ZC model and the CNOP technique, focusing on the changes introduced to adapt them to our analysis; in addition, the RC approach is briefly presented. In Sect. 3, we first assess the performance of the RC and then present results of the CNOP analysis for both the RC approach and the ZC model. A summary and discussion of the results follow in Sect. 4.

2.1 Zebiak–Cane (ZC) model

The ZC model is an intermediate-complexity ENSO model that describes the evolution of anomalies with respect to a prescribed seasonal mean climatological state across the tropical Pacific. The state vector of this model consists of two-dimensional fields of sea surface temperature, thermocline depth, oceanic and atmospheric velocities, and atmospheric geopotential. For a complete description of the model's components and equations, we refer the reader to Zebiak and Cane (1987). We use both the original deterministic ZC model and a stochastic ZC model following the approach described in Roulston and Neelin (2000). In this stochastic version, only noise in the zonal wind-stress field is applied as follows. First, a linear regression (LR) model relating sea surface temperature (SST) anomalies and surface zonal wind-stress anomalies was constructed empirically from observations using the ORAS5 dataset (Copernicus Climate Change Service, 2021) over the period between 1961 and 1991 with a time step of 10 d (corresponding to the ZC model time step). Next, the variability explained by this linear model was subtracted from the total zonal wind-stress field to obtain the residual zonal wind-stress anomalies. The first empirical orthogonal function (EOF) of this residual (Fig. A1 in Appendix A) shows a strong component over the eastern Pacific. In Feng and Dijkstra (2017), the first two EOFs were included, where the second EOF captures the westerly wind bursts, but to keep the spatial noise structure simple, we only included the first EOF. Finally, the principal component (PC) related to the first EOF was fitted to a first-order autoregressive model:

where ϵt is a white noise term following a Gaussian distribution with a zero mean and unit variance (), while a and b are the fitted parameters. This fitted first-order autoregressive model was used during integration to generate a different (random) zonal wind-stress anomaly pattern at each time step.

There is still a debate on whether the Pacific climate state is in a subcritical or supercritical regime (Kessler, 2002; Guardamagna et al., 2024). This distinction hinges on whether ENSO variability is a damped oscillation excited by stochastic forcing (subcritical) or occurs as a sustained oscillation or limit cycle (supercritical). In the supercritical case, ENSO behaviour is strongly influenced by nonlinearities, which arise from three main sources in the ZC model: heat advection, wind-stress anomalies, and subsurface water temperature variations (Duan et al., 2013). Given this ongoing debate, we study both regimes here, which can be easily distinguished in the ZC model by varying a single parameter. Following Tziperman et al. (1994), we use a parameter rd in the drag coefficient , where is the standard value in the ZC model. Given the zonal and meridional wind velocities , the ZC model computes the wind stress (τx,τy) acting on the ocean surface according to the following bulk formula:

where ρair is the air density and rd=1 is the original model configuration (Zebiak and Cane, 1987). With increasing rd, the ZC model generates a larger wind-stress response to sea surface temperature anomalies, intensifying the coupling strength between ocean and atmosphere.

In the deterministic version of the ZC model, an initial anomaly on the seasonal background state rapidly decays for rd=0.79. In contrast, for rd=0.8, ENSO variability occurs as a periodic solution with a ∼4-year period (Fig. A2). Hence, the Hopf bifurcation bounding the two regimes is located between rd=0.79 and rd=0.8; here, we choose rd=0.77 as a value in the subcritical regime and rd=0.9 in the supercritical regime. When noise is introduced, the ZC model's ENSO is phase-locked in the winter season (Fig. A3) for both rd=0.77 and rd=0.9. The SPB is identified with the initial month corresponding to the fastest decrease in autocorrelation in eastern Pacific SST anomalies (Jin and Liu, 2021a). According to this definition, the ZC model shows a clear SPB in May for both rd=0.77 and rd=0.9 (Fig. A4). All these aspects make the ZC model a good test bed for understanding why the RC can circumvent the SPB in both the subcritical and supercritical regime.

2.2 Reservoir computer

Although the procedure to generate an RC has been well described elsewhere (Pathak et al., 2018), we briefly summarize the approach here, while also introducing our notation. Given an input signal , where Nt is the total number of time steps, and a given output signal , the RC has to learn how to estimate an output signal as similar as possible to ytarget(n). To do that during the training procedure, an error measure E(y,ytarget) is minimized, for which we choose a common measure for regression problems: the mean squared error (MSE) defined by

Before the training procedure, the input data u(n) are nonlinearly expanded into a higher-dimensional so-called reservoir space, generating in this way a new signal . This new representation of the data also contains temporal information and is based on the following update equations:

where the hyperbolic tangent (tanh) is applied component-wise. Including a nonlinear activation function such as tanh in the update equations enables the RC to estimate nonlinear relationships among the input variables in contrast to less sophisticated models such as the linear regressor, which can only capture linear relationships. This gives the RC an advantage in scenarios where nonlinearities play a significant role. The two matrices and are generated randomly according to chosen hyperparameters. The non-zero elements of W and Win are sampled from a uniform distribution over the range . The sparse matrix W derives from a random network with mean degree , while Win is a dense matrix. The quantity in Eq. (4b) is the leaking rate. The output layer is defined as y(n)=Woutx(n), where , and during the training procedure, only the weights of Wout are estimated by minimizing E(y,ytarget) through a linear regression procedure. We use a ridge regression to avoid overfitting, leading to the loss function ℒ:

The hyperparameters are given by the dimension of the reservoir (Nx), the spectral radius of the matrix W (ρ), the sparsity of W's connections , the input scaling a, and the leaking rate α. Given an input sequence u(n)=ytarget(n), the RC is trained by determining Wout from the sequence using the loss function (Eq. 5).

After training, the RC can be transformed into an autonomous evolving dynamical system to be used for prediction (Pathak et al., 2018). Thereto feedback connections between the outputs at time step n and the inputs at the subsequent time step are introduced. In this way, a model is generated that autonomously evolves in time according to

where x(n) and x(n+1) are the reservoir states at time step n and n+1, while y(n) is the output at time step n, and u(n+1) is the input at the subsequent time step n+1. This property of the RC allows us to make predictions similar to classical dynamical systems. Consequently, we can study how an initial perturbation evolves in the RC.

In the results below, the input vector u consists of the following feature variables: the NINO3 index, the thermocline depth anomalies hW and hE averaged over the regions 5–5° S, 120–180° E, and 5° N–5° S, 180–290° E, respectively, and the zonal surface wind-speed anomalies τC averaged over the area 5° N–5° S, 145–190° E. Instead of directly using zonal surface wind-stress anomalies, zonal surface wind-speed anomalies are used as a proxy. The two variables are inherently correlated through the bulk formula (Eq. 2) and therefore convey similar information. However, a key distinction arises from how noise is introduced in the ZC model, specifically in the form of random zonal wind-stress anomalies. This leads to random local fluctuations in the zonal wind-stress signal, which are inherently difficult for the RC to predict and reproduce. In contrast, the surface wind-speed anomaly signal is smoother and more predictable, making it easier for the RC to learn and generalize efficiently.

In addition to the previous variables, a sine signal with a 12-month period was included to represent the seasonal cycle such that Nu=5. Although a combination of sine and cosine signals is required to uniquely identify each month of the year, we found that including both made little difference in performance. Therefore, to minimize the number of input variables and reduce the complexity of the learned function, we decided to use only the sine signal. The output vector consists of the same variables as in the input except for the sine signal; hence, Ny=4. In self-evolving mode, the sine signal encoding the seasonal cycle is provided as an external input rather than generated directly by the RC.

2.3 CNOP computation

Our implementation of the CNOP methodology follows the one described by Duan et al. (2013). Let be the propagator of a nonlinear model from initial time t0 to a chosen end time te. We indicate v0 as the initial perturbation superimposed on the model's background state V0 at time t0. For a selected norm , an initial perturbation v0δ is defined as a CNOP if and only if

where C(v0) is the constraint condition and represents the model state at time t when the integration starts from the background state V0 at time t0. In Duan et al. (2013), an initial perturbation is applied to all the grid points over the tropical area, and the constraint condition to the initial perturbation amplitude C(v0) is defined as

where and are the initial sea surface temperature anomalies (SSTA) and thermocline depth anomalies, respectively, at grid point (i,j). The weights wT=2 °C and wh=50 m represent the characteristic scale of SST and thermocline depth anomalies, respectively.

As mentioned in Sect. 2.2, the RC is trained using a limited feature vector. To ensure a fair comparison of CNOPs between those of the RC and the ZC models, the tropical area of the ZC model is divided into boxes and uniform perturbations are applied over those boxes. Specifically, we apply a uniform SSTA perturbation over all the grid points in the NINO3 area (5° N–5° S, 210–270° E); a uniform thermocline depth perturbation to all the grid points in the area 5° N–5° S, 120–180° E; and a uniform thermocline depth perturbation to all the grid points in the area 5° N–5° S, 180–290° E. The constraint condition can then be written as

For both the RC and the ZC model, the objective function J(v0) in Eq. (7b) has been defined as the root squared error (RSE) between the perturbed and background trajectories. Specifically, if we define the NINO3 index value at time t when the integration start from the initial state V0 as NINO3(t,V0), the objective function J(v0) is defined as

where tN=te. To solve the optimization problem associated with determining the CNOP, we use the gradient-free COBYLA optimization algorithm (Powell, 1994). Since the COBYLA algorithm starts its optimization process from a random initial guess, we always perform 10 different realizations starting from 10 different initial guesses to select the CNOPs that shows the largest error propagation according to the value of J(v0); a detailed description of the COBYLA algorithm is reported in Appendix B.

In the Results section, we first explain the training and validation of the RC (Sect. 3.1), demonstrate the forecasting skills of the RC (Sect. 3.2) while also demonstrating the importance of the zonal surface wind velocity anomalies as a training variable. Next, in Sect. 3.3, we present the results of the CNOP analysis for both the RC and deterministic ZC models.

3.1 Training and validation of the RC

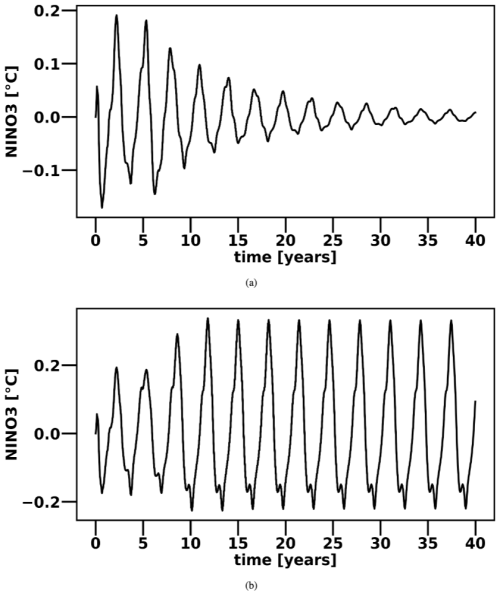

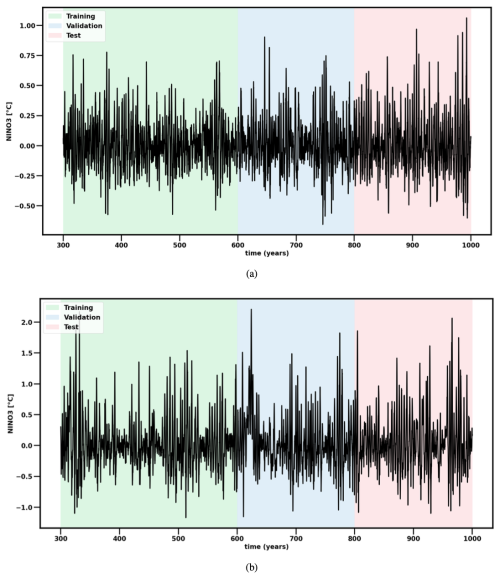

For both subcritical (rd=0.77) and supercritical (rd=0.9) regimes, we first performed a simulation of 1000 years with the stochastic ZC model using a time step of 10 d. We refer to these data as synthetic observations. The NINO3 amplitudes of the supercritical case (Fig. 1b) are, as expected, about a factor of 2 larger than those of the subcritical case (Fig. 1a). As mentioned in Sect. 2.2, the 12-month-period sine signal and the feature vector components hW, hE, τC, and NINO3 (extracted from the synthetic observation time series) are used to train the RC. To investigate the effect of τC on the performance of the RC, we also trained a second RC using only hW, hE, NINO3, and the sine signal. Before training, NINO3 and both hW and hE have been normalized by wT=2 °C and wh=50 m, respectively. From the total 1000 years of synthetic observations, the first 300 years were discarded to avoid capturing any initial transient behaviour. The next 500 years were used for training and validation (300 years for training and 200 years for validation), and the last 200 years were used for testing, ensuring an independent evaluation of the RC model performance. The training of the RC is described in Sect. 2.2, where, given an input sequence u(n)=ytarget(n), Wout is determined from the sequence using the loss function (Eq. 5).

Figure 1NINO3 index from the last 700 years of the stochastic ZC model simulations (synthetic observations) used to train, validate, and test the RC model: (a) rd=0.77 and (b) rd=0.9.

To determine the performance of the RC, we use the RC in self-evolving mode (Sect. 2.2) to make predictions using a time step of 10 d. When we let the RC self-evolve, the only external information we provide is the value of the sine signal representing the current month of the year. All the other variables (NINO3, hE, hW, and τC when the latter is included as a training variable) are directly produced by the output of the RC and are not provided as external information during prediction. To evaluate the RC's performance over the entire 200 years of validation trajectories for different lead times, we adopt a rolling approach. For each time step t(n) in the validation dataset, an RC trajectory with a specific lead time is generated. The final values of each trajectory, corresponding to the lead time of interest, are then concatenated to form a complete 200-year trajectory, say yfull. Before performing inference, we always determine the internal RC state using 5 years of data prior to the time step t(n). Discarding the initial x(n) RC states for is a common practice in reservoir computing. This step is necessary to mitigate the impact of initial transients caused by the arbitrary initialization of the reservoir state, which are typically set to x(0)=0 or randomly initialized. In our case, we set x(0)=0. This initialization creates an artificial starting state that is unlikely to recur once the reservoir dynamics stabilize. A warm-up period is, therefore, necessary to allow the RC to reach a stable dynamical regime. The length of this warm-up period depends on the RC's memory capacity and the specific learning task. Based on our experiments, we found that a 5-year warm-up period is sufficient to stabilize the reservoir dynamics and eliminate the effects of initial transients. As a result, the reservoir states corresponding to the 5 years of data preceding the time step t(n) are used to initialize the RC internal state and then discarded. In our notation, this means discarding the x(n) reservoir states for , where ntransient=180 given our 10 d time step.

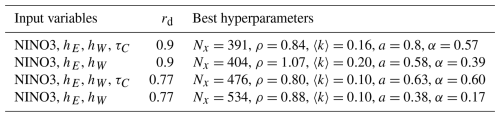

To identify the best set of hyperparameters, a separate validation procedure was conducted for each regime (rd=0.77 and rd=0.9) and for each set of training variables (including and excluding the zonal surface wind-speed anomalies) using a Bayesian search. For each hyperparameter set, the RC model's 18-month lead time predictions were evaluated using the root mean square (RMS) error computed including all feature variables in yfull. The latter was done to ensure that the RC model could replicate the synthetic observations for all variables of interest rather than simply replicating the NINO3 index. Among all the different hyperparameters, the reservoir dimension Nx is one of the most significant for RC's performance (Lukoševičius, 2012; Verstraeten et al., 2007). Increasing Nx expands the reservoir's state space, allowing for a richer and more complex high-dimensional representation of the input signal u(t) (see Sect. 2.2). Additionally, larger Nx values increase the reservoir's memory capacity.

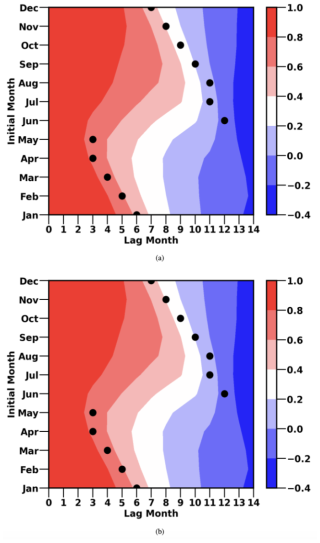

During our experiments, the Bayesian search has consistently converged on large Nx values, with a notable difference between the supercritical and subcritical regimes. In the supercritical regime, the optimal reservoir dimension is approximately 400, regardless of whether the variable τC is included during training. In the subcritical regime, the optimal dimension is larger, with Nx=476 when τC is included and Nx=534 when it is excluded. Table C1 in Appendix C reports the optimal Nx values identified for each regime and input variable configuration as well as the optimal values for all other RC hyperparameters (see Sect. 2.2).

After validation, we evaluated the RC model's performance on the 200-year test set using these best hyperparameter sets, as described next.

3.2 RC performances

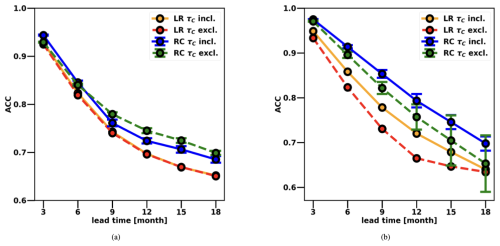

Figure 2 presents the mean and standard deviation of the anomaly correlation coefficient (ACC) of 50 different RC prediction trajectories and the target NINO3 index from the 200-year test dataset, computed at a monthly time step (so averaged over three model time steps). We evaluated the RC's ability to replicate the monthly NINO3 index rather than the 10 d time step index used for training since this is the common approach for assessing the performance of ENSO forecasting models. As the reservoir is generated by random W and Win values, each RC needs to be retrained first (using the 300-year dataset) as described in Sect. 2.2, and hence multiple RCs are used for evaluating the ACC. Again, a rolling approach (as for the validation dataset; see Sect. 3.1) was used for the test set, and hence the ACC is determined using the 200-year vector yfull.

Figure 2Mean and standard deviation of the anomaly correlation coefficient (ACC) of 50 different RC model realizations and the 200-year synthetic observations for the NINO3 index computed at a monthly time step. Results are shown for the two regimes: (a) subcritical (rd=0.77) and (b) supercritical (rd=0.9), with zonal surface wind-speed anomalies (τC) either included or excluded during training. Results from the linear regressor are also included for comparison.

In the supercritical regime (Fig. 2b), the RC model performs better when zonal surface wind-speed anomalies τC are included as a training variable, though its performance is also acceptable even when τC is excluded. On the other hand, in the subcritical regime (Fig. 2a), the RC performance for longer lead times (9 to 18 months) improves when τC is excluded during training. In this regime, ENSO is primarily driven by atmospheric noise, introduced in the Zebiak–Cane model in the form of random zonal wind-stress burst (see Sect. 2.1). When the model is initialized from ENSO neutral conditions, optimal atmospheric noise patterns can trigger transient growth of perturbations, provided the initial conditions are favourable. Conversely, if a perturbation is already developing, subsequent noise patterns can either reinforce or damp its evolution. The variable τC is therefore particularly useful for predicting the short-term variability of ENSO as it provides critical information about external forcing that influences early perturbation dynamics. Accordingly, in the subcritical regime, the RC achieves better performances at shorter lead times (3–6 months) when τC is included. At longer lead times (9–18 months), improved predictive performance requires the RC to rely more on system internal dynamics rather than the short-term influence of stochastic noise. Including τC during training can lead to overfitting, causing the model to focus excessively on short-term noise patterns instead of learning the internal system dynamics. In the supercritical regime, nonlinearities play an essential role (see Sect. 2.1). In the ZC model, these nonlinearities arise from three main sources: heat advection, wind-stress anomalies, and water temperature variations (Duan et al., 2013), all of which influence the evolution of ENSO. This characteristic is reflected in the RC model's performance, which exhibits improved predictive skill at both short and long lead times when τC is included during training, underscoring the importance of the nonlinear effects introduced by this variable in this regime.

Overall, the RC performs better in the supercritical regime, achieving an ACC of 0.8 at a 12-month lead time when zonal surface wind-speed anomalies are included during training. In contrast, in the subcritical regime, the RC model achieves an ACC of 0.75 at a 12-month lead time when τC is excluded during training.

To better appreciate the performance of the RC model, we also compared it with a simple linear regressor as a benchmark; results are also included in Fig. 2. The comparison between the LR and RC reveals that the performance improvement achieved by adopting the RC model is not drastic. However, the results still demonstrate a clear and consistent advantage in using the RC in both the subcritical and supercritical regimes and regardless of whether the variable τC is included during training. This improvement stems from the nonlinear activation function used in the RC (see Sect. 2.2), which enables it to capture nonlinear relationships between input variables, which is something the LR model, limited to linear approximations, cannot achieve. This advantage is particularly clear in the supercritical regime, where the RC model provides a more significant performance increase compared to the subcritical regime. This is expected, as nonlinearities play a more prominent role in the supercritical regime (see Sect. 2.1). Moreover, the relatively small performance gap between the LR and the RC can be attributed to the ZC model being a model of intermediate complexity in which ENSO is a weakly nonlinear phenomena (e.g. all wave dynamics in ocean and atmosphere is linear in the model). The ZC model's data exhibit simpler dynamics than real-world observations or simulations with more complex general circulation models (GCMs). In such cases, the performance advantage of the RC over the LR is expected to be more pronounced.

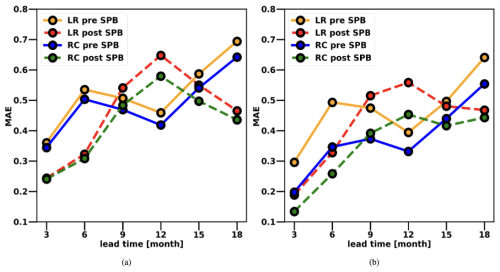

The ability of the RC model to mitigate the SPB is demonstrated in Fig. 3. This figure presents the normalized mean absolute error (MAE) between the median NINO3 of 50 different RC predictions and the corresponding target values from the synthetic observation test dataset (see Sect. 3.1) at various lead times and for both the RC initialized before the SPB in March, April, and May and after the SPB in September, October, and November. As a comparison benchmark, the normalized MAE for the linear regressor (LR) predictions is also included for the same initialization months. Additionally, to ensure a fair comparison between the subcritical and supercritical regimes, all RC and LR predictions and the corresponding target values have been normalized by the standard deviation of the 200-year synthetic observation test dataset (0.47 for the supercritical regime and 0.24 for the subcritical regime) before computing the MAE. In Fig. 3, we present results for the different input variable configurations for both the subcritical and supercritical cases. Specifically, the variable τC is excluded from the input variables in the subcritical regime but included in the input variables in the supercritical regime.

Figure 3Normalized mean absolute error (MAE) between the median of 50 different RC realizations' predictions and the 200-year synthetic observation test set for the NINO3 index computed at a monthly time step, and with the RC initialized both before (March, April, and May) and after (September, October, and November) the SPB: (a) rd=0.77, zonal surface wind-speed anomalies excluded during training. (b) rd=0.9, zonal surface wind-speed anomalies included during training. Results from the linear regressor (LR) are also included for comparison. Both predictions and target values have been normalized by the standard deviation of the 200-year synthetic observation test dataset (0.47 for the supercritical regime and 0.24 for the subcritical regime) before computing the MAE.

In both the subcritical and supercritical regimes, the RC model outperforms the LR also in terms of mean absolute error regardless of the initialization period. However, to a certain extent, it is still affected by the SPB, which occurs in May in the ZC model (as discussed in Sect. 2.1). On the other hand, the RC model demonstrates a clear ability to mitigate the effects of the SPB compared to the LR. This can be most clearly seen when comparing the pre-spring initialization performance of the two models at 3- and 6-month lead times. In the supercritical regime, with pre-SPB initialization, the RC model achieves a normalized MAE of 0.2 at a 3-month lead time and 0.35 at a 6-month lead time, while the LR shows a higher normalized MAE of 0.3 at a 3-month lead time and 0.5 at a 6-month lead time. In the subcritical regime, with pre-SPB initialization, the RC achieves a MAE of 0.34 at a 3-month lead time and 0.5 at a 6-month lead time, while the LR shows a MAE of 0.36 at a 3-month lead time and 0.54 at a 6-month lead time. In the supercritical regime, the RC shows a larger performance improvement compared to the subcritical regime, where the difference in performance with respect to the LR is less evident. Moreover, also in terms of normalized MAE, the RC performs better in the supercritical regime than in the subcritical one.

3.3 CNOP analysis

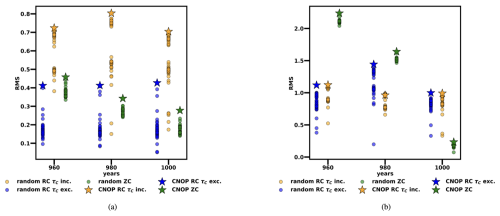

For both the RC model and the deterministic ZC model and for both rd=0.77 (subcritical regime) and rd=0.9 (supercritical regime), we computed the CNOPs for different lead times using the last 50 years of the 200-year synthetic observation test dataset as initial conditions (cf. Sect. 3.1). This choice has been made to balance computational efficiency and statistical significance. The CNOP computations using the COBYLA algorithm are highly computationally expensive, and 50 years of data is sufficient to obtain statistically significant results. By selecting the last 50 years of the 200-year test period, we also ensure complete statistical independence between the training and test data. For the RC model, perturbations were directly applied to NINO3 and mean thermocline depth anomalies (hE and hW). In contrast, for the deterministic ZC model, a uniform perturbation was applied over three different boxes in the Pacific for both SSTA and thermocline depth anomalies (as described in Sect. 2.3).

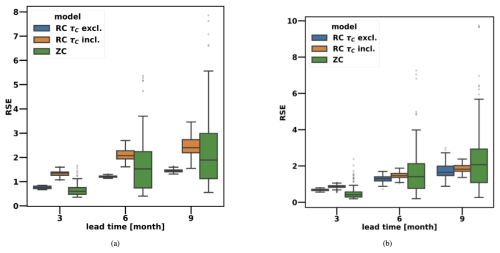

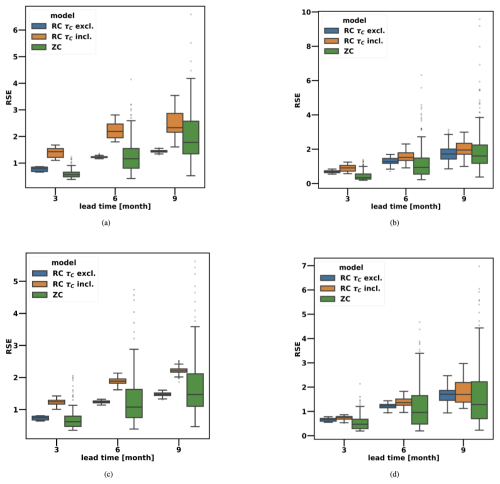

Before computing the CNOPs for the RC model, we identified and saved the best-performing RC realization out of 50 for each combination of lead time, rd value, and training variable set based on the forecasting skill for the 200-year synthetic observation test period (see Sect. 3.1). The top-performing realization (for each combination of lead time, rd value, and training variables) was then considered for the CNOP computation. This was done to avoid biases related to the random initialization of the RC. We computed the CNOPs for lead times 3, 6, and 9 months (the optimization time considered during the CNOP computation), focusing on a single constraint value δ=0.05 and a specific forecast initialization season just before the SPB, encompassing March, April, and May. The value of δ corresponds to a maximum NINO3 perturbation of 0.1 °C (Sect. 2.3) or a maximum he or hw perturbation of 2.5 m. For the deterministic ZC model, the same procedure to compute the CNOP was used (see Sect. 2.3). To quantify the divergence between two trajectories caused by the CNOPs, for both the RC and the ZC models, we computed the root square error (RSE) distance between the perturbed and unperturbed NINO3 trajectories as defined in Eq. (10). This distance was used to estimate each model's sensitivity to initial condition perturbations, given a specific initial state. To make a fair comparison between the subcritical and supercritical regimes, all the RSE distances obtained have been normalized by the standard deviation of the 50 years of NINO3 synthetic observations considered for the CNOP computation (0.29 for the subcritical regime and 0.56 for the supercritical regime).

In the supercritical regime (Fig. 4b), the RC model is more susceptible to initial perturbations at shorter lead times. However, at a 6-month lead time, the RC model's sensitivity to initial perturbations becomes, on average, smaller when τC is excluded during training and similar to that of the deterministic ZC model when τC is included during training (see Table D2). At a 9-month lead time, the RC's sensitivity to initial perturbations is on average smaller for both τC included and excluded. At both 6- and 9-month lead times, the deterministic ZC model's sensitivity results show a much wider distribution than the RC regardless of whether τC is included or excluded as a training variable. In the subcritical regime (Fig. 4a), the RC model becomes more susceptible to perturbations than the deterministic ZC model when τC is included as a training variable. Conversely, when this variable is excluded, the RC model shows less sensitivity to perturbations than the deterministic ZC model. This difference is likely because including τC as a training variable causes the RC model to more easily learn the noise component of synthetic observations. Since ENSO variability in the subcritical regime is highly affected by noise, including these anomalies during training leads to a system with a larger error propagation.

Figure 4Distribution of the normalized RSE distances between the perturbed and unperturbed trajectories for different lead times when CNOPs are applied, taking as initial conditions the months of March, April, and May for (a) rd=0.77 and (b) rd=0.9. The boxes indicate the interquartile range (IQR), the range within which the central 50 % of data points are located. The whiskers extend to the minimum and maximum values within 1.5 times the IQR from the first and third quartile. The central line corresponds to the median. All the RSE distances have been normalized by the standard deviation of the NINO3 index extracted from the 50 years of synthetic observations considered for the CNOP computation (0.29 for the subcritical regime and 0.56 for the supercritical regime).

Previous studies (Mu et al., 2007) have quantified the SPB in the deterministic ZC model using the CNOP framework, revealing that the deterministic ZC model is particularly sensitive to initial perturbations when initialized just before the boreal spring season. Our results support this finding, showing that the deterministic ZC model exhibits a stronger sensitivity to initial condition perturbations when initialized close to the SPB than when it is initialized later in the year (see Fig. D1 in Appendix D). This also holds for summer initialization (not shown) in June, July, and August, where the models show results similar to spring initialization in March, April, and May, with the RC mitigating sensitivity to initial perturbations in a similar manner. This behaviour is due to the proximity of the summer season to the SPB. The CNOP cost function evaluates the distance between the entire perturbed and unperturbed trajectories (see Eq. 10), taking all months into account. When the deterministic ZC model integration is initialized just before the SPB, the number of months affected by the SPB is maximized, and at longer lead times (6 and 9 months), we observe a pronounced increase in the sensitivity to initial condition perturbations compared to when the model is initialized in the autumn and winter seasons. This effect is also found when comparing the sensitivities of autumn and winter initializations. Compared to an autumn-initialized trajectory, an integration initialized in winter has crossed the SPB before at a 9-month lead time, and the sensitivity to initial perturbations for an integration initialized in winter is, on average, larger than for the autumn initialization.

On the other hand, when the RC is initialized later than the SPB, it exhibits a sensitivity to initial perturbations similar to that found when it is initialized just close to the SPB (see Table D2). As the number of months affected by the SPB increases (at 6- and 9-month lead times, with a forecast initialized in spring), the RC effectively reduces both the average sensitivity to initial condition perturbations and the width of sensitivity results' distribution compared to the ZC model, consequently decreasing the number of events strongly sensitive to initial condition perturbations. The only exception is the trained RC, including the variable τC in the subcritical regime, which consistently has a greater sensitivity to initial condition perturbations than the deterministic ZC model for reasons already mentioned above. Moreover, the inclusion of this variable decreases the performance of the RC in the subcritical regime at longer lead times (see Sect. 3.2). These results demonstrate that the RC model effectively mitigates the sensitivity to initial condition perturbations at long lead times (6 and 9 months) when a forecast is initialized just before the SPB compared to the ZC model, for which the spring season corresponds to the strongest sensitivity to initial perturbations. This capability explains why the RC model can reduce the effects of the SPB, delivering skilful predictions at long lead times.

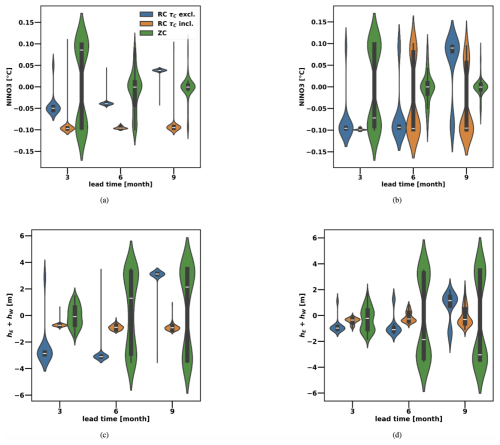

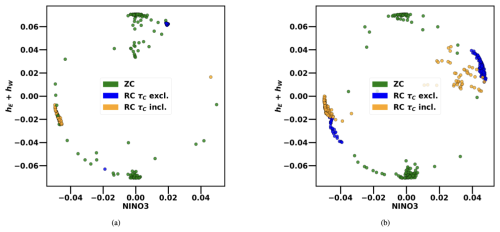

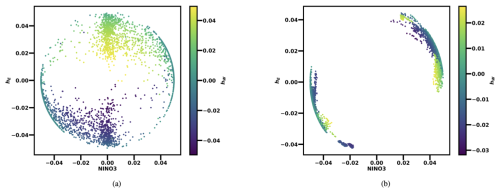

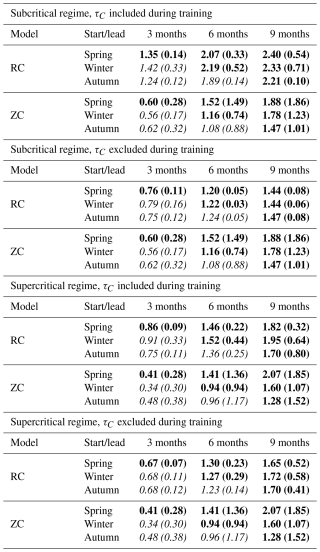

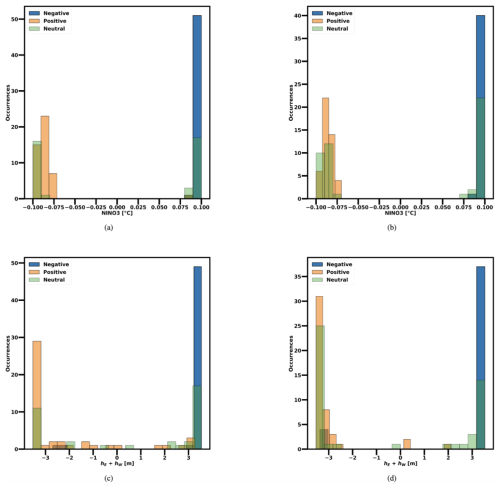

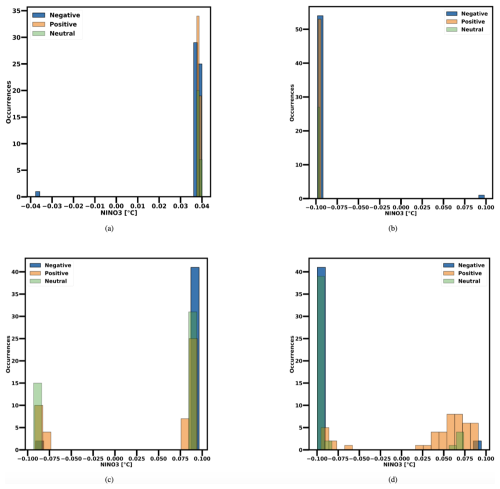

Figure 5 shows the estimated CNOPs for both the ZC and RC models when initialized just before the SPB in March, April, and May. The estimated CNOPs are presented for the NINO3 index and the sum of the thermocline perturbations in the eastern and western Pacific (hE+hW). The ZC model's sensitivity to initial NINO3 perturbations decreases as the forecasting lead time increases. In contrast, perturbations to the thermocline depth become increasingly crucial for optimal perturbation growth at longer lead times. This is true for both rd=0.77 and rd=0.9. On the other hand, the CNOPs of the RC have different behaviour for both the supercritical and subcritical regimes. The RC is sensitive to quite different initial perturbations, leading to less variability in the error propagation compared to the ZC model. This is supported by Fig. 6, which shows the distribution of the CNOPs in the (NINO3, hE+hW) plane for a 9-month lead time. For visualization purposes, the initial anomalies for NINO3 and (hE+hW) have been normalized dividing by 2 °C and 50 m, respectively (see Sect. 2.3). The CNOPs for the RC and the ZC models show a strongly different distribution. The RC model consistently exhibits greater sensitivity to NINO3 perturbations at both short (3-month) and long (6- and 9-month) lead times, while the ZC model shows increasing sensitivity to initial thermocline depth perturbations as the optimization time extends. In the ZC model, ENSO variability is strongly influenced by thermocline feedback (Zebiak and Cane, 1987). The RC reduces this sensitivity at longer lead times, helping to mitigate error propagation over time.

Figure 5Violin plots showing the distribution of the CNOPs obtained for both the NINO3 index and hE+hW (sum of the thermocline anomalies for both the western and eastern Pacific). (a, c) rd=0.77 (b, d) rd=0.9. In both cases, δ=0.05; the period considered corresponds to the last 50 years of the 200-year synthetic observation test dataset; and the months of March, April, and May are taken as initial conditions.

Figure 6Scatter plot of the CNOPs in the normalized NINO3 index, hE+hW anomaly plane. (a) rd=0.77. (b) rd=0.9. In both cases, δ=0.05; the period considered corresponds to the last 50 years of the 200-year synthetic observation test dataset; the months of March, April, and May are taken as initial conditions; and the lead time considered is 9 months. The NINO3 index and hE+hW anomalies are normalized by dividing by 2 °C and 50 m, respectively.

Another notable characteristic, visible in Fig. 5, is that the ZC model exhibits a highly symmetrical distribution for the hE+hW CNOPs at a 9-month lead time as well as for the NINO3 CNOPs at a 3-month lead time. In contrast, the RC model shows a more biased distribution for the estimated NINO3 CNOPs, particularly in the subcritical regime and at shorter lead times (3 months) in the supercritical regime. At longer lead times (9 months) in the supercritical regime, the RC model still shows a relatively symmetrical distribution for the estimated NINO3 CNOPs.

To better understand the origin of these differences, we classify the initial conditions analysed (before applying the CNOPs), spanning the months of March, April, and May into three groups based on the initial eastern Pacific sea surface temperature anomalies (SSTAs). Specifically, in the supercritical (subcritical) regime, we define an initial state as positive if the corresponding initial NINO3 index is larger than 0.2 (0.1) °C, negative if the initial NINO3 index is smaller than −0.2 (−0.1) °C, and neutral if . The initial states have been classified in a different way for the supercritical and subcritical regimes to account for the fact that in the subcritical regime, the amplitude of NINO3 is a factor of 2 smaller compared to the supercritical regime; see Sect. 3.1.

As shown in Fig. D2 in Appendix D, when the ZC model's SSTA initial condition in the eastern Pacific is characterized by a positive (negative) anomaly, the optimal initial perturbation for hE+hW for a 9-month optimization period is negative (positive) if the model is initialized before the event's peak. This limits the propagation of the anomaly, leading to a weaker El Niño (La Niña) event if the model is initialized prior to the event's peak. Conversely, if the model is initialized after the event's peak, the negative (positive) thermocline perturbation drives a faster and steeper return to neutral conditions.

When the ZC model is initialized from neutral conditions, the sign of the estimated CNOPs for hE+hW depends on the reference trajectory. If the system transition into an El Niño event, the optimal hE+hW is negative, thus dampening the positive anomaly; if it transitions into a La Niña event, the optimal hE+hW is positive, suppressing the negative anomaly.

The same reasoning applies to the 3-month-lead-time NINO3 optimal perturbations: a negative (positive) NINO3 perturbation weakens the near-term growth of an initially positive (negative) eastern Pacific SSTA.

Regarding the RC model, as shown in Fig. D3, the estimated CNOPs for the NINO3 index at a 9-month lead time in the supercritical regime exhibit a fairly symmetrical distribution overall. However, significant differences emerge depending on whether the variable τC is included during training, as well as in comparison with the ZC model. When τC is included and the RC is initialized before the peak of an event, the optimal NINO3 perturbation is positive (negative) for initial conditions characterized by a positive (negative) eastern Pacific SSTA, thereby reinforcing the initial anomaly and leading to larger El Niño (La Niña) events. In contrast, when the RC is initialized after the peak of a positive (negative) event, the optimal NINO3 perturbation reverses sign, resulting in a faster and steeper return to neutral conditions.

Neutral initial conditions tend to prefer negative NINO3 perturbations, resulting in stronger La Niña and weaker El Niño events relative to the reference trajectories. When τC is not included during training, positive SSTA initial conditions still favour positive optimal NINO3 perturbations, yielding stronger El Niño events when the model is initialized before the peak. In contrast, for events characterized by negative initial eastern Pacific SSTAs, the optimal NINO3 perturbations are positive, thereby mitigating the development of the initial negative anomaly over time. Moreover, neutral initial conditions exhibit a stronger tendency toward positive optimal NINO3 perturbations in the absence of τC.

In the subcritical regime, the optimal NINO3 perturbation at a 9-month lead time exhibits a marked asymmetry. As illustrated in Fig. D3, including τC during training leads to consistently negative optimal perturbations across all three categories of initial conditions, whereas excluding τC results in positive optimal perturbations for every category. At shorter lead times (3 months), in both subcritical and supercritical regimes and regardless of whether τC is included during training, the RC model clearly prefers negative optimal NINO3 perturbations. These results highlight the influence of τC on the estimated CNOPs, particularly in the subcritical regime. Further evidence of τC's impact in both supercritical and subcritical regimes appears when examining another result shown in Fig. 5, where we find that excluding zonal surface wind-speed anomalies during training in the subcritical regime causes the RC model to exhibit greater sensitivity to thermocline depth perturbations at longer lead times compared to the supercritical regime and to the subcritical regime when τC is included during training. However, even in this scenario, the RC remains less sensitive to thermocline depth anomalies than the ZC model. In the subcritical regime, ENSO variability is primarily driven by atmospheric noise, introduced as stochastic wind-stress forcing. This noise affects the thermocline slope, activating mechanisms that lead to the development of perturbations. When the variable τC is included during training, the RC explicitly learns the relationship between wind-stress anomalies and thermocline adjustments, and the state of the surface winds is provided as an initial condition. Consequently, smaller thermocline perturbations can be amplified by wind-stress anomalies, resulting in larger deviations from the unperturbed trajectory. As a result, the optimal initial perturbations primarily target the NINO3 index, favouring negative NINO3 perturbations, while the optimal hE+hW perturbations are only slightly negative and closer to zero following the same trend as the NINO3 CNOPs. The impact of these optimal perturbations varies based on the initial conditions. When the RC is initialized with positive or slightly positive (neutral) eastern Pacific SSTA, negative NINO3 and hE+hW perturbations suppress or weaken the evolution of positive initial anomalies, resulting in a faster return to neutral conditions. Conversely, when the RC is initialized with negative or slightly negative (neutral) eastern Pacific SSTA, negative NINO3 perturbations amplify the evolution of negative anomalies, leading to either a stronger La Niña event or a longer persistence of La Niña conditions if the RC is initialized after the peak.

When τC is not included, the RC learns only the direct relationship between NINO3 and thermocline depth anomalies without explicit knowledge of how wind anomalies influence thermocline slope adjustments. As a result, in the absence of wind-forcing information, larger initial thermocline perturbations are required to induce significant error growth over time. Under these conditions, the optimal initial perturbations consist of both positive NINO3 and positive hE+hW perturbations.

The effect of these optimal perturbations again depends on the initial conditions. When the RC is initialized with positive or slightly positive eastern Pacific SSTA, the combination of positive NINO3 and hE+hW perturbations significantly enhances the evolution of positive anomalies, leading to a stronger El Niño event if the RC is initialized before the peak. If the RC is initialized after the peak, these perturbations extend the duration of El Niño conditions by delaying the decay of warm anomalies.

For negative or slightly negative (neutral) initial conditions, the positive optimal perturbations effectively suppress the further development of negative anomalies, once again accelerating the return to neutral conditions.

In the supercritical regime, thermocline depth perturbations are not purely activated by atmospheric noise but also emerge due to the internal instability of the system. The influence of stochastic atmospheric noise is weaker than in the subcritical case. This means that even if the model does not explicitly account for the effect of wind-stress forcing on the thermocline slope, strong initial thermocline depth anomalies are not needed to maximize error propagation. However, even in the supercritical regime, when τC is not included, the RC remains more sensitive to initial thermocline perturbations at longer lead times than when τC is included, but the difference is much less pronounced than in the subcritical regime. Also the differences in terms of the distribution of the optimal perturbations per initial condition category is less pronounced compared to the subcritical regime and is only evident for the negative and neutral initial conditions (not for the positive ones).

Relatively limited research has been carried out to understand the underlying reasons for the strong performance of ML prediction models in ENSO prediction, in particular their apparent ability to reduce error propagation and overcome the spring predictability barrier (SPB) as deduced from dynamical models. In previous studies, explainable AI techniques like layerwise relevance propagation (LRP) have been used to identify and estimate which patterns in the data are exploited by machine learning (ML) methods to make specific ENSO predictions (Ham et al., 2019; Rivera Tello et al., 2023) or to explore teleconnections of ENSO (Ito et al., 2021; Liu et al., 2023b). The LRP technique has also been extended to the echo state network (ESN) framework to investigate the importance of the leaking rate parameter α and the ESN's robustness to random input perturbations while performing a El Niño/La Niña binary classification task (Landt-Hayen et al., 2022). In a recent study (Qin et al., 2024), an approach similar to ours is followed using the CNOP framework to estimate optimal initial perturbations for a U-net deep learning model trained on both reanalysis data and simulations from various CMIP6 global circulation models (GCMs). They validated their results with the GFDL CM2p1 numerical model, showing that the deep learning models and numerical models exhibit a similar error evolution over time for the same initial conditions and superimposed perturbations. However, their automatic differentiation-based optimization algorithm only applies to deep learning architectures, preventing them from determining whether the deep learning and numerical models show the same optimal initial perturbations and leading to the largest error propagation.

In our study, we address this limitation by employing a gradient-free optimization algorithm (COBYLA), enabling a fair comparison between a reservoir computing (RC) model and the Zebiak–Cane (ZC) numerical model. Our results indicate that the RC model effectively reduces error growth from optimal initial perturbations compared to the ZC model, offering a plausible explanation for its higher predictive skill. It is important to clarify that the main objective of our study is to demonstrate that the RC can mitigate error propagation resulting from initial condition perturbations more effectively than a classical dynamical numerical model. Such an analysis and comparison is impossible using real-world observations as we do not know the evolution operator of the real-world system and hence cannot determine the CNOP. Nevertheless, the CNOP framework can still be applied to an RC model trained on actual observations. This approach can be used to precisely assess the potential of the RC model, as well as other machine learning methodologies, to predict the real ENSO system and to estimate their corresponding predictability limits more accurately.

Furthermore, assessing whether an RC trained with real observations exhibits similar sensitivity to specific variables as an RC trained on synthetic data from the ZC or other dynamical numerical models can provide helpful information to modellers. In particular, identifying differences in the variables to which the skill of the RC model is most sensitive can help determine whether key physical processes are being captured realistically, offering guidance on refining ENSO representation. Applying the CNOP approach to machine learning models trained on real observations could offer further benefits. For instance, while our study uses an RC model with a highly reduced input state vector consisting of just four indices, employing a more complex architecture, such as a convolutional neural network (CNN) capable of analysing two-dimensional input fields, would allow the CNOP framework to identify the regions and variables to which the skills of the model is most sensitive. These insights could inform more precise and targeted data acquisition strategies. While such experiments with real observations are beyond the scope of the present study, they present a promising direction for future research.

In our study, we first demonstrate that the RC, when trained on data from the stochastic ZC model (acting as synthetic observations), exhibits good predictive skill up to an 18-month lead time and hence effectively overcomes the SPB problem in both the subcritical and supercritical regimes. In the supercritical regime, the RC model performs better when zonal surface wind-speed anomalies are included during training, while in the subcritical regime, the RC actually performs better for longer lead times (9 to 18 months) when the zonal surface wind-speed anomalies are excluded. While this result may depend on the implementation of the wind-stress noise (Feng and Dijkstra, 2017), which we restrict here mostly to the eastern Pacific (using only the first EOF of the residual wind-stress field), the reason is that the RC is overfitting the noise in the subcritical regime.

Previous studies have also noticed strong predictive performances when applying the RC to ENSO forecasting. For instance, Hassanibesheli et al. (2022) achieved high prediction skills (ACC>0.8) up to a lead time of 14 months when training the RC with the observed NINO3 and NINO3.4 indexes, decomposed into a low-frequency and high-frequency components. Their performance is comparable to ours at long lead times, but our model performs better at shorter lead times (3–6 months). Additionally, like in our study, they found that their approach could mitigate the SPB problem. However, care must be taken when comparing our findings with their results due to substantial differences in the data used for training, the training variables considered, and the implementation of the forecasting framework.

After the RC performance analysis, we investigated the propagation of errors in initial conditions in boreal spring (just before the SPB) for both the RC and deterministic ZC models using the conditional nonlinear optimal perturbation (CNOP) approach (Duan et al., 2013). In the supercritical regime, the RC can significantly reduce error propagation in particular at longer lead times (6–9 months). In the subcritical regime, the RC is less susceptible to perturbations compared to the ZC model when surface wind-speed anomalies are excluded during training and more susceptible when they are included. The reduced sensitivity of AI models to small initial perturbations has also been found in Selz and Craig (2023). In that study, the inability of the AI frameworks to reproduce the butterfly effect of the atmosphere was considered a limitation as it prevents the generation of large ensembles due to inadequate error growth properties. However, as noted in Selz and Craig (2023), this limitation can be mitigated by training multiple models with different random seeds to generate a confidence interval. We argue that this reduced sensitivity to initial perturbations is not a disadvantage but is what enables AI models to extend the predictability horizon of a system, allowing them to maintain higher predictive skills at longer lead times. The actual CNOPs have quite a different pattern for the ZC and RC cases; the CNOP pattern of the ZC resembles the one obtained in earlier papers (Duan et al., 2013) with a dominant response in the thermocline field for longer lead times, but in the CNOP pattern of the RC, a strong sea surface temperature component is also present.

The thermocline anomalies are important for error propagation at the longer timescales, in particular in the ZC model, in which the ENSO variability is highly affect by the thermocline feedback (Zebiak and Cane, 1987). Hence, effectively, the RC model reduces the components in the thermocline anomalies and hence reduces error propagation. While we restricted to only particular cases, as we only used one value of parameter in the constraint condition δ and we allowed only one EOF in the ZC wind-stress noise, we think that the modification of the dynamical behaviour in the RC (with respect to the ZC) to change the spatio-temporal properties of the error propagation is the key explanation for the superior skill of the RC on long lead times and the reason for us being able to overcome the SPB. As a final remark, we specify that this mechanism is proposed to explain the RC's superior performance within a relatively short-term prediction horizon as opposed to the decadal timescales required to assess the long-term dynamics and statistics of ENSO. Developing an emulator that captures the long-term dynamics and statistics of a system is an entirely different task, necessitating distinct model architectures, hyperparameter configurations, and evaluation criteria compared to those adopted in our study, such as assessing how effectively a model captures the intrinsic nonlinearities of the system. In recent years, various machine learning models have demonstrated impressive predictive skills at up to a 21-month lead time without necessarily capturing all the underlying physical processes of ENSO. The results shown here for the reservoir computer can be extended to other machine learning models, potentially explaining their predictive skills at up to a nearly 2-year lead time, far beyond the SPB.

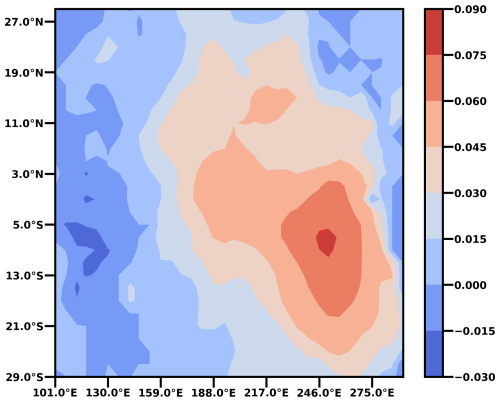

Figure A1First EOF of the residual zonal wind-stress anomalies as determined from the ORAS5 dataset (Copernicus Climate Change Service, 2021).

Figure A3Frequency of the occurrence of La Niña and El Niño events for each calendar month. (a) rd=0.77 (b) rd=0.9. For both rd values, a stochastic ZC model realization of 1000 years has been considered.

The Constrained Optimization BY Linear Approximations (COBYLA) algorithm (Powell, 1994) is a gradient-free optimization method designed to solve nonlinear constrained optimization problems. Given an objective function J(x) to be minimized, where x∈ℝn, and a set of nonlinear constraints Ci(x)≤0, the algorithm starts from an initial guess x0 and constructs an initial n-dimensional simplex represented by a set of n+1 vertices, . Each vertex is defined as , where ei is the ith coordinate vector (a standard basis vector in ℝn) and ρbeg is a specified initial trust-region radius that determines the initial simplex size.

At each iteration, the algorithm constructs a linear approximation of both the objective function and the constraints using a linear interpolation at the n+1 current simplex vertices V(i). It then identifies the worst-performing vertex, denoted as . Once the worst-performing vertex is found, the algorithm formulates and solves a linear optimization problem within a trust region of radius ρ around to generate a new candidate vertex . If this new vertex improves the objective function, it replaces , updating the simplex structure. Otherwise, the trust-region radius is reduced, and the linear optimization problem is solved again.

The algorithm continues iterating until one of the stopping criteria is met. It terminates when the trust-region radius ρ falls below a predefined threshold ρend, when the change in the objective function is smaller than a specified tolerance ε, or when the number of iterations reaches the maximum allowed value Nmax. COBYLA is particularly well suited for nonlinear optimization problems with a small number of variables, especially when computing derivatives is challenging or infeasible. These characteristics make COBYLA an ideal choice for our analysis as the ZC model's derivatives are impossible to compute and our optimization problem involves only three variables (see Sects. 2.3 and 3.3).

To validate the estimated CNOPs obtained using COBYLA for both the RC and ZC models, we first confirm that the CNOPs lie on the boundary of the sphere defined by the constraints. Next, we evaluate error propagation by applying multiple randomly chosen initial perturbations sampled from this boundary to determine whether the CNOPs indeed correspond to the largest error growth. Figures B1 and B2 present these validation results. Specifically, Fig. B1 shows a scatter plot of all estimated CNOPs for both models in the three-dimensional space defined by the normalized NINO3, hE, and hW optimal initial perturbations. In contrast, Fig. B2 illustrates, for a representative case, the divergence between perturbed and unperturbed trajectories resulting from both the CNOP and 50 random initial perturbations sampled along the constraint boundary. For illustration, results are provided for five different years, with both the RC and ZC models always initialized in April.

For our implementation, we adopted the COBYLA solver from the SciPy Python library (Virtanen et al., 2020). Since COBYLA is inherently designed to solve minimization problems, while our objective is to maximize the distance between the reference and perturbed trajectories (see Sects. 2.3 and 3.3), we account for this by minimizing −J(x) instead of J(x).

Figure B1Scatter plot of all computed CNOPs in the normalized NINO3 index vs. hE and hW anomaly plane for (a) the ZC model and (b) the RC model for both τC included and excluded during training. The NINO3 index is normalized by 2 °C, while the hE and hW anomalies are normalized by 50 m.

Figure B2Scatter plot of the RMS differences between perturbed and unperturbed trajectories for three model years with both the RC and ZC models always initialized in April. Results are presented for the CNOP (i.e. the optimal initial perturbation) and for 50 random initial perturbations sampled from the boundary of the constraints.

Figure D1Distribution of the normalized RSE distances between perturbed and unperturbed trajectories for different lead times with the application of CNOPs, using initial conditions from (a, b)December, January, and February and (c, d) September, October, and November. The left plots in (a) and (c) display results for rd=0.77, while the right plots in (b) and (d) show results for rd=0.9. The boxes indicate the interquartile range (IQR), the range within which the central 50 % of data points lie. The whiskers extend to the minimum and maximum values within 1.5 times the IQR from the first and third quartile. The central line corresponds to the median. The RSE distances are normalized by the standard deviation of the NINO3 index extracted from the 50 years of synthetic observations considered for CNOP computation (0.29 for the subcritical regime and 0.56 for the supercritical regime).

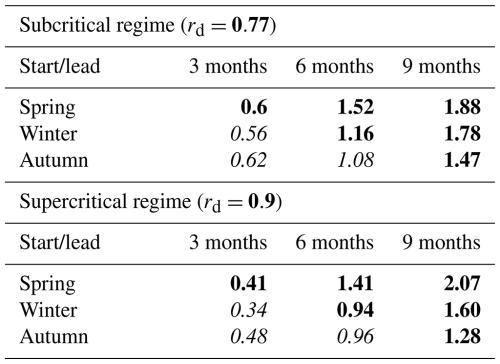

Table D1Table showing the median of the normalized RSE distances between perturbed and unperturbed trajectories at various lead times with CNOPs applied across different starting seasons. Only the Zebiak–Cane model is considered, with the top table representing the subcritical regime (rd=0.77) and the bottom table representing the supercritical regime (rd=0.9). All RSE distances are normalized by the standard deviation of the NINO3 index from the 50 years of synthetic observations considered for the CNOP computation (0.29 for the subcritical regime and 0.56 for the supercritical regime).

Bold: forecast crosses the SPB. Italic: forecast does not cross the SPB.

Table D2Median (IQR) of the normalized RSE distances between perturbed and unperturbed trajectories at various lead times with CNOPs applied across different starting seasons. Both ZC and RC models (trained with and without τC) are considered in the subcritical (rd=0.77) and supercritical (rd=0.9) regime. RSE distances are normalized by the standard deviation of NINO3 index from the 50 years of synthetic observations considered for CNOP computation (0.29 for the subcritical regime and 0.56 for the supercritical regime). The interquartile range (IQR) is defined as the distance between the first and third quartile.

Bold: forecast crosses the SPB. Italic: forecast does not cross the SPB.

Figure D2Distribution of the ZC model's CNOPs for positive, negative, and neutral eastern Pacific SSTA initial conditions. Panels (a) and (b) (top row) show the CNOPs obtained for the NINO3 index at a 3-month lead time comparing subcritical and supercritical regimes, while panels (c) and (d) (bottom row) show the CNOPs obtained for hE+hW, the sum of the thermocline anomalies in the eastern and western Pacific at a lead time of 9 months. In the supercritical regime, positive, negative, and neutral eastern Pacific SSTA initial conditions are defined as NINO3≥0.2, , and , respectively. In the subcritical regime, positive, negative, and neutral SSTA initial conditions are defined as NINO3≥0.1, , and , respectively. In every case, the months of March, April, and May (spring season) from the last 50 years of the synthetic data used for testing (see Sect. 3.1) are taken as initial conditions, yielding a total of 150 initial conditions considered.

Figure D3Distribution of the RC model's CNOPs for positive, negative, and neutral eastern Pacific SSTA initial conditions. Panels (a) and (b) (top row) display the CNOPs for the NINO3 index at a 9-month lead time under the subcritical regime with and without τC included during training. Panels (c) and (d) (bottom row) show the CNOPs for the supercritical regime, also at a 9-month lead time, with τC either included or excluded. In the supercritical regime, positive, negative, and neutral initial conditions are defined as NINO3≥0.2, , and , respectively. In the subcritical regime, positive, negative, and neutral SSTA initial conditions are defined as NINO3≥0.1, , and , respectively. In every case, the months of March, April, and May (spring season) from the last 50 years of the synthetic data used for testing (see Sect. 3.1) are taken as initial conditions, yielding a total of 150 initial conditions considered.

All data and code used in this study are available at this link https://doi.org/10.5281/zenodo.15006826 (Guardamagna et al., 2025).

All authors contributed to the design of this study. FG carried out all the computations and produced a first draft the paper. All authors contributed to the interpretation of the results and the final version of the paper.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The work of Francesco Guardamagna, Claudia Wieners and Henk Dijkstra was supported by the Netherlands Organization for Scientific Research (NWO) under grant no. OCENW.M20.277.

This research has been supported by the Nederlandse Organisatie voor Wetenschappelijk Onderzoek (grant no. OCENW.M20.277).

This paper was edited by Wansuo Duan and reviewed by three anonymous referees.

Barnston, A. G., Tippett, M. K., L'Heureux, M. L., Li, S., and DeWitt, D. G.: Skill of Real-Time Seasonal ENSO Model Predictions during 2002–11: Is Our Capability Increasing?, B. Am. Meteorol. Soc., 93, 631–651, 2012. a

Battisti, D. S. and Hirst, A. C.: Interannual Variability in a Tropical Atmosphere-Ocean Model: Influence of the Basic State, Ocean Geometry and Nonlinearity, J. Atmos. Sci., 46, 1687–1712, https://doi.org/10.1175/1520-0469(1989)046<1687:IVIATA>2.0.CO;2, 1989. a

Bracco, A., Brajard, J., Dijkstra, H. A., Hassanzadeh, P., Lessig, C., and Monteleoni, C.: Machine Learning for the Physics of Climate, Nature Reviews Physics, 7, 6–20, https://doi.org/10.1038/s42254-024-00776-3, 2024. a

Copernicus Climate Change Service: ORAS5 global ocean reanalysis monthly data from 1958 to present, 2021. a, b

Duan, W. and Wei, C.: The “spring predictability barrier” for ENSO predictions and its possible mechanism: Results from a fully coupled model, Int. J. Climatol., 33, 1280–1292, https://doi.org/10.1002/joc.3513, 2013. a

Duan, W., Yu, Y., Xu, H., and Zhao, P.: Behaviors of nonlinearities modulating the El Niño events induced by optimal precursory disturbances, Clim. Dynam., 40, 1399–1413, 2013. a, b, c, d, e, f

Feng, Q. Y. and Dijkstra, H. A.: Climate network stability measures of El Niño variability, Chaos, 27, 035801–15, https://doi.org/10.1063/1.4971784, 2017. a, b

Guardamagna, F., Wieners, C., Fang, X., and Dijkstra, H. A.: Detection of limit cycle signatures of El Niño in models and observations using reservoir computing, Journal of Physics: Complexity, 5, 015016, https://doi.org/10.1088/2632-072X/ad2699, 2024. a, b

Guardamagna, F., Zebiak, S. E., and Cane, M. A.: Supporting Code and Data for the Study: “Explaining the High Skill of Reservoir Computing in El Niño Forecasting”, Zenodo [code, data set], https://doi.org/10.5281/zenodo.15006826, 2025. a

Ham, Y.-G., Kim, J.-H., and Luo, J.-J.: Deep learning for multi-year ENSO forecasts, Nature, 573, 568–572, https://doi.org/10.1038/s41586-019-1559-7, 2019. a, b

Hassanibesheli, F., Kurths, J., and Boers, N.: Long-term ENSO prediction with echo-state networks, Environmental Research: Climate, 1, 011002, https://doi.org/10.1088/2752-5295/ac7f4c, 2022. a, b

Hu, J., Weng, B., Huang, T., Gao, J., Ye, F., and You, L.: Deep Residual Convolutional Neural Network Combining Dropout and Transfer Learning for ENSO Forecasting, Geophys. Res. Lett., 48, e2021GL093531, https://doi.org/10.1029/2021GL093531, 2021. a

Ito, M., Karamperidou, C., Sadowski, P., Camargo, S., Lee, C.-Y., and Patricola, C.: Explainable Artificial Intelligence for Insights into the Relationship between ENSO and Tropical Cyclone Genesis, in: AGU Fall Meeting Abstracts, vol. 2021, GC42A–07, 13–17 December 2021, New Orleans, 2021AGUFMGC42A..07I, 2021. a

Jin, F.-F.: An Equatorial Ocean Recharge Paradigm for ENSO. Part I: Conceptual Model, J. Atmos. Sci., 54, 811–829, https://doi.org/10.1175/1520-0469(1997)054<0811:AEORPF>2.0.CO;2, 1997. a

Jin, Y. and Liu, Z.: A Theory of the Spring Persistence Barrier on ENSO. Part I: The Role of ENSO Period, J. Climate, 34, 2145–2155, https://doi.org/10.1175/JCLI-D-20-0540.1, 2021a. a, b

Jin, Y. and Liu, Z.: A theory of Spring Persistence Barrier on ENSO. Part II: Persistence Barriers in SST and ocean heat content, J. Climate, 34, 5555–5564, https://doi.org/10.1175/JCLI-D-20-0820.1, 2021b. a

Jin, Y., Liu, Z., and McPhaden, M. J.: A Theory of the Spring Persistence Barrier on ENSO. Part III: The Role of Tropical Pacific Ocean Heat Content, J. Climate, 34, 8567–8577, https://doi.org/10.1175/JCLI-D-21-0070.1, 2021. a

Jonnalagadda, J. and Hashemi, M.: Long Lead ENSO Forecast Using an Adaptive Graph Convolutional Recurrent Neural Network, Engineering Proceedings, 39, 5, https://doi.org/10.3390/engproc2023039005, 2023. a, b

Kessler, W. S.: Is ENSO a cycle or a series of events?, Geophys. Res. Lett., 29, 40-1–40-4, https://doi.org/10.1029/2002GL015924, 2002. a

Landt-Hayen, M., Kröger, P., Claus, M., and Rath, W.: Layer-wise Relevance Propagation for Echo State Networks applied to Earth System Variability, arXiv [preprint], https://doi.org/10.48550/arXiv.2210.09958, 2022. a

Lau, K.-M. and Yang, S.: The Asian monsoon and predictability of the tropical ocean–atmosphere system, Q. J. Roy. Meteor. Soc., 122, 945–957, https://doi.org/10.1002/qj.49712253208, 1996. a

Liu, Y., Cai, W., Lin, X., Li, Z., and Zhang, Y.: Nonlinear El Niño impacts on the global economy under climate change, Nat. Commun., 14, 5887, 2023a. a

Liu, Y., Duffy, K., Dy, J. G., and Ganguly, A. R.: Explainable deep learning for insights in El Niño and river flows, Nat. Commun., 14, 339, https://doi.org/10.1038/s41467-023-35968-5, 2023b. a

Lukoševičius, M.: A Practical Guide to Applying Echo State Networks, Springer Berlin Heidelberg, Berlin, Heidelberg, 659–686, https://doi.org/10.1007/978-3-642-35289-8_36, 2012. a

Mahesh, A., cody Evans, M., Jain, G., Castillo, M., Lima, A. R., Lunghino, B. D., Gupta, H., Gaitan, C. F., Hunt, J., Tavasoli, O., and Brown, P. T.: Forecasting El Niño with Convolutional and Recurrent Neural Networks, https://api.semanticscholar.org/CorpusID:209371718 (last access: 27 June 2025), 2019. a

McPhaden, M. J., Zebiak, S. E., and Glantz, M. H.: ENSO as an Integrating Concept in Earth Science, Science, 314, 1740–1745, https://doi.org/10.1126/science.1132588, 2006. a

Mu, M., Xu, H., and Duan, W.: A kind of initial errors related to “spring predictability barrier” for El Niño events in Zebiak-Cane model, Geophys. Res. Lett., 34, L03709, https://doi.org/10.1029/2006GL027412, 2007. a, b

Neelin, J., Battisti, D. S., Hirst, A. C., Jin, F.-F., Wakata, Y., Yamagata, T., and Zebiak, S. E.: ENSO Theory, J. Geophys. Res., 103, 14261–14290, 1998. a

Pathak, J., Wikner, A., Fussell, R., Chandra, S., Hunt, B. R., Girvan, M., and Ott, E.: Hybrid forecasting of chaotic processes: Using machine learning in conjunction with a knowledge-based model, Chaos, 28, 041101, https://doi.org/10.1063/1.5028373, 2018. a, b

Planton, Y. Y., Guilyardi, E., Wittenberg, A. T., Lee, J., Gleckler, P. J., Bayr, T., McGregor, S., McPhaden, M. J., Power, S., Roehrig, R., Vialard, J., and Voldoire, A.: Evaluating Climate Models with the CLIVAR 2020 ENSO Metrics Package, B. Am. Meteorol. Soc., 102, E193–E217, https://doi.org/10.1175/BAMS-D-19-0337.1, 2021. a

Powell, M. J. D.: A Direct Search Optimization Method That Models the Objective and Constraint Functions by Linear Interpolation, Springer Netherlands, Dordrecht, 51–67, https://doi.org/10.1007/978-94-015-8330-5_4, 1994. a, b

Qin, B., Yang, Z., Mu, M., Wei, Y., Cui, Y., Fang, X., Dai, G., and Yuan, S.: The first kind of predictability problem of El Niño predictions in a multivariate coupled data-driven model, Q. J. Roy. Meteor. Soc., 150, 5452–5471, https://doi.org/10.1002/qj.4882, 2024. a

Rivera Tello, G. A., Takahashi, K., and Karamperidou, C.: Explained predictions of strong eastern Pacific El Niño events using deep learning, Sci. Rep.-UK, 13, 21150, https://doi.org/10.1038/s41598-023-45739-3, 2023. a

Roulston, M. S. and Neelin, J. D.: The response of an ENSO Model to climate noise, weather noise and intraseasonal forcing, Geophys. Res. Lett., 27, 3723–3726, https://doi.org/10.1029/2000GL011941, 2000. a

Selz, T. and Craig, G. C.: Can Artificial Intelligence-Based Weather Prediction Models Simulate the Butterfly Effect?, Geophys. Res. Lett., 50, e2023GL105747, https://doi.org/10.1029/2023GL105747, 2023. a, b

Suarez, M. J. and Schopf, P. S.: A Delayed Action Oscillator for ENSO., J. Atmos. Sci., 45, 3283–3287, https://doi.org/10.1175/1520-0469(1988)045<3283:ADAOFE>2.0.CO;2, 1988. a

Takahashi, K., Karamperidou, C., and Dewitte, B.: A theoretical model of strong and moderate El Niño regimes, Clim. Dynam., 52, 7477–7493, 2019. a

Timmermann, A., Jin, F.-F., and Abshagen, J.: A Nonlinear Theory for El Niño Bursting, J. Atmos. Sci., 60, 152–165, https://doi.org/10.1175/1520-0469(2003)060<0152:ANTFEN>2.0.CO;2, 2003. a

Tziperman, E., Stone, L., Cane, M. A., and Jarosh, H.: El Niño chaos: overlapping of resonances between the seasonal cycle and the Pacific ocean-atmosphere oscillator, Science, 264, 72–74, 1994. a

Verstraeten, D., Schrauwen, B., D'Haene, M., and Stroobandt, D.: An experimental unification of reservoir computing methods, Neural Networks, 20, 391–403, https://doi.org/10.1016/j.neunet.2007.04.003, 2007. a