the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Identification of linear response functions from arbitrary perturbation experiments in the presence of noise – Part 1: Method development and toy model demonstration

Guilherme L. Torres Mendonça

Julia Pongratz

Christian H. Reick

Existent methods to identify linear response functions from data require tailored perturbation experiments, e.g., impulse or step experiments, and if the system is noisy, these experiments need to be repeated several times to obtain good statistics. In contrast, for the method developed here, data from only a single perturbation experiment at arbitrary perturbation are sufficient if in addition data from an unperturbed (control) experiment are available. To identify the linear response function for this ill-posed problem, we invoke regularization theory. The main novelty of our method lies in the determination of the level of background noise needed for a proper estimation of the regularization parameter: this is achieved by comparing the frequency spectrum of the perturbation experiment with that of the additional control experiment. The resulting noise-level estimate can be further improved for linear response functions known to be monotonic. The robustness of our method and its advantages are investigated by means of a toy model. We discuss in detail the dependence of the identified response function on the quality of the data (signal-to-noise ratio) and on possible nonlinear contributions to the response. The method development presented here prepares in particular for the identification of carbon cycle response functions in Part 2 of this study (Torres Mendonça et al., 2021a). However, the core of our method, namely our new approach to obtaining the noise level for a proper estimation of the regularization parameter, may find applications in also solving other types of linear ill-posed problems.

- Article

(5349 KB) - Full-text XML

- Companion paper

- BibTeX

- EndNote

To gain understanding of a physical system, it is very helpful to know how it responds to perturbations. Considering a small time-dependent perturbation , the resulting time-dependent response can from a very general point of view be written as

where the linear response function is a characteristic of the considered system. In fact, under a number of assumptions – among which smoothness and causality are the most important – Eq. (1) is the first term of a functional expansion of the response R into the perturbation f around the unperturbed state , known as Volterra series (Volterra, 1959; Schetzen, 2010). In this framing, the key to gaining insight into the system is the linear response function χ: by knowing this function one has at hand not only a powerful tool to predict the response for sufficiently small but otherwise arbitrary perturbations, but also a means to study the internal dynamic modes of the unperturbed system by analyzing the temporal structure of the response function.

Linear response functions have been successfully applied within different contexts in many fields of science and technology. In physics, for example, material constants like the magnetic susceptibility or the dielectric function must be understood as linear response functions that can be obtained by Kubo's theory of linear response (Kubo, 1957) via the fluctuation–dissipation theorem from an auto-correlation of the unperturbed system. However, applications of these functions range far beyond physics into fields like neurophysiology and climate (Gottwald, 2020). In climate science, in particular, applications of linear response functions in the context of Ruelle's developments in response theory (see below) are a recent topic (e.g., Lucarini, 2009; Lucarini and Sarno, 2011; Lucarini et al., 2014; Ragone et al., 2016; Lucarini et al., 2017; Aengenheyster et al., 2018; Ghil and Lucarini, 2020; Lembo et al., 2020; Bódai et al., 2020). On the other hand, these functions have already been successfully employed as a heuristic tool to study climate and the carbon cycle for decades (e.g., Siegenthaler and Oeschger, 1978; Emanuel et al., 1981; Maier-Reimer and Hasselmann, 1987; Enting, 1990; Joos et al., 1996; Joos and Bruno, 1996; Thompson and Randerson, 1999; Pongratz et al., 2011; Caldeira and Myhrvold, 2013; Joos et al., 2013; Ricke and Caldeira, 2014; Gasser et al., 2017; Enting and Clisby, 2019). Yet another perspective is that from engineering sciences, in which the impulse response – that to a large extent corresponds to the linear response function – and the closely related transfer function (or system function) characterize linear time-invariant (LTI) systems, widely applied in fields such as signal processing and control theory (Kuo, 1966; Rugh, 1981; Beerends et al., 2003; Boulet and Chartrand, 2006). Regardless of which viewpoint a particular community takes to investigate the linear response of a system, a fundamental step in this investigation is the identification of the appropriate linear response function, the topic of the present study.

From a theoretical point of view, the existence of a linear response is by no means obvious: structurally stable dynamical systems are the exception (Abraham and Marsden, 1982), so that already small parameter changes typically lead to topological changes in their sets of stable and unstable solutions. Not every such bifurcation must prevent a linear response in macroscopic observables, but the question remains how in view of microscopic structural instability macroscopic linearity can prevail. A key result in this field is Ruelle's rigorous demonstration of the existence of a linear response for the structurally stable class of uniform hyperbolic systems (Ruelle, 1997, 1998). It is believed that this result transfers to large classes of nonequilibrium systems (Ruelle, 1999; Lucarini, 2008; Lucarini and Sarno, 2011; Gallavotti, 2014; Ragone et al., 2016; Lucarini et al., 2017). An example may be the Lorenz system at standard parameters, for which numerical analysis revealed evidence for a linear response despite non-hyperbolicity (Reick, 2002; Lucarini, 2009). Recent investigations by Wormell and Gottwald (2018, 2019) indicate that the thermodynamic limit must be invoked to reconcile microscopic structural instability with macroscopic differentiability. Results on the existence/absence of a linear response have been particularly obtained for iterative maps (Großmann, 1984; Baladi, 2018; Śliwiak et al., 2021), which are known for their notoriously rich bifurcation structure. Well studied is also the linear response of stochastic systems (Hänggi and Thomas, 1982; Risken, 1996) for whose quasistatic response rigorous mathematical results also exist (Hairer and Majda, 2010).

In practical applications where the response function must be recovered from data, its identification may be a challenging task. The reason is that the identification problem is generally ill-posed, so that by classical numerical methods one obtains a recovery severely deteriorated by noise (see below). In addition, existent methods to identify these functions from data require one to perform special perturbation experiments. In the present study, we develop a method to identify linear response functions, taking data from any type of perturbation experiment while fully accounting for the ill-posedness of the problem.

The generality of our method allows for derivation of response functions in cases hardly possible before. Examples are problems where performing perturbation experiments is computationally expensive, so that one must use data that were not designed for the purpose of deriving these functions. In the geosciences, this may be the case when one is interested in characterizing by response functions the dynamics of Earth system models – extremely complex systems employed to simulate climate and its coupling to the carbon cycle. In principle, with our method one can derive these functions, taking simulation data from Earth system model intercomparison exercises such as C4MIP – the Coupled Climate-Carbon Cycle Model Intercomparison Project (Taylor et al., 2012; Eyring et al., 2016) – that are already available in international databases. In Part 2 of this study we explore this possibility by investigating in an Earth system model the response of the land carbon cycle to atmospheric CO2 perturbations. Because of the relationship between the linear response function and the impulse response and the transfer function in LTI systems, our work can also be seen from the viewpoint of the engineering sciences as a contribution to the corpus of methods to solve system identification problems (Åström and Eykhoff, 1971; Söderström and Stoica, 1989; Isermann and Münchhof, 2010; Pillonetto et al., 2014).

In the field of climate science, the typical method to identify linear response functions is by means of the impulse response function, which is the response to a Dirac delta-type perturbation (e.g., Siegenthaler and Oeschger, 1978; Maier-Reimer and Hasselmann, 1987; Joos et al., 1996; Thompson and Randerson, 1999; Joos et al., 2013). This method has become so widely known that often the terms linear response function and impulse response function are used interchangeably. Indeed, in the particular case where perturbations are weak, the two concepts coincide. However, this is not true in general: if the impulse strength is large so that nonlinearities become important, the impulse response function differs from the linear response function.

Other studies have proposed to identify linear response functions by making use of other types of perturbations. Reick (2002) and Lucarini (2009) used a weak periodic forcing to derive response functions in the Fourier space (also called susceptibilities). Hasselmann et al. (1993), Ragone et al. (2016), MacMartin and Kravitz (2016), Lucarini et al. (2017), Van Zalinge et al. (2017), Aengenheyster et al. (2018), and Bódai et al. (2020) identify the linear response function using step experiments, where the perturbation is a Heaviside-type function. Additional studies have proposed to compute the linear response of the system using the invariant measure of the unperturbed system (Gottwald et al., 2016) and by means of shadowing methods (Reick, 1996; Ni and Wang, 2017; Ni, 2020).

As noted by Lucarini et al. (2014), in principle the linear response function of a system can be derived by taking data from an arbitrary type of perturbation experiment. One method would be to apply a Laplace transform to Eq. (1), so that χ(t) can in principle be computed by the inverse Laplace transform

where ℒ{⋅} is the Laplace transform operator. In fact, a first step towards the derivation of χ(t) from the general Eq. (1) was taken by Pongratz et al. (2011), although the problem was not systematically discussed.

Deriving χ(t) from perturbation experiment data is not a trivial problem. For the general case where the perturbation is different from a Dirac delta-type function, the problem is ill-posed (e.g., Bertero, 1989; Landl et al., 1991; Lamm, 1996; Engl et al., 1996). This basically means that attempts to recover the exact χ(t) yield a solution with large errors due to an amplification of the noise in the data. On the other hand, when f(t) is a Dirac delta-type function with sufficiently small perturbation strength, so that the response can be considered linear, the impulse response gives directly the linear response function, i.e., χ(t)=R(t). However, even in this case noise may hinder the recovery: because the perturbation is only one “pulse” with small perturbation strength, the response may have a too low signal-to-noise ratio because of internal variability (Joos et al., 2013), giving once more a recovery with large errors.

To remedy these noise problems, a method intended to “damp” the noise in the response is usually employed. In MacMartin and Kravitz (2016), a step experiment with large perturbation strength is used to obtain a better signal-to-noise ratio in the response but at the cost of enhancing the effect of nonlinearities. An alternative approach is employed by Ragone et al. (2016) and Lucarini et al. (2017), who employ an ensemble of simulation experiments and take the ensemble-averaged response so that the level of noise is reduced. However, especially for complex models such as Earth system models, ensembles of simulations can be computationally extremely expensive, so that such a procedure may not be feasible.

Instead of trying to improve the signal-to-noise ratio of the data by improved experiment design, here we are interested in deriving χ(t) from a single realization of a given experiment by accounting for the ill-posedness of the problem. For this purpose, we employ regularization theory. Although this theory offers a variety of methods to solve ill-posed problems (see, e.g., Groetsch, 1984; Bertero, 1989; Bertero et al., 1995; Engl et al., 1996; Hansen, 2010), currently no general all-purpose method exists. Typically, methods rely on some type of prior information about the problem (Istratov and Vyvenko, 1999). Hence, they must be tailored according to the particularities of each application. Here, we develop a method that under certain assumptions solves the ill-posed problem when, in addition to the data from a single arbitrary perturbation experiment, data from an associated unperturbed – or control – experiment are also given to obtain independent information about the noise level (Sect. 3). First, we assume that the response function is well approximated by a sum of decaying exponentials, meaning that potential oscillatory contributions to the response function are so small that they can be considered to be part of the noise. The response function is obtained by applying Tikhonov–Phillips regularization. The regularization parameter is chosen via the discrepancy method. An essential ingredient of the discrepancy method is the noise level, which is usually not known a priori. For this reason, we propose a method to estimate the noise level by taking advantage of the information given by a spectral analysis of the perturbation experiment and the control experiment. If the desired response function is known to be monotonic, the noise estimate can be further adjusted. In Sect. 4, the method is demonstrated to give reliable results under appropriate conditions of noise and nonlinearity. In Sect. 5, we compare the derived method with two existent methods in the literature to identify the response function in the time domain. Results and technical details are discussed in Sect. 6. Additional calculations are shifted to the Appendices.

As a preparation for introducing our method in Sect. 3, in the present section we derive its basic ansatz, which takes into account, in addition to the response formula (Eq. 1), also the noise in the data. Depending on the application context, the noise may arise for different reasons, such as errors in the measurements or stochastic components in the system. As will be seen, our basic ansatz is in principle applicable to all those cases. However, to make the connection to modern applications of linear response functions that arise in the context of Ruelle's developments (e.g., climate), here we derive this ansatz starting from considerations of linear response theory (Ruelle, 2009). Ruelle considered systems of type

where x(t) is the possibly infinite dimensional state vector and the perturbation f(t) couples to the unperturbed system via the field A1(x). In the present context Eq. (3) could, e.g., represent the dynamics of the Earth system perturbed by anthropogenic emissions f(t). Considering an observable Y(x), Ruelle proved that the ensemble average of its deviation from the unperturbed system 〈ΔY〉 can be expanded in the perturbation f(t):

where the order symbol 𝒪(f2) represents terms that vanish in the limit more quickly than the leading linear term. This expansion describes the response of a system that is noisy as a result of its chaotic evolution: starting from different initial states, one obtains different values for ΔY(x(t)). Compared to Eq. (1), in Eq. (4) the linear response function does not describe the response in observables directly, but only in their ensemble average, i.e., in an average over the initial states of the unperturbed system. For the recovery of linear response functions from numerical experiments, this would mean that one had to perform many experiments starting from different initial states to obtain the appropriate ensemble averages. Using tailored perturbation experiments, it was demonstrated in several studies (e.g., Ragone et al., 2016; Lucarini et al., 2017; Bódai et al., 2020) that linear response functions can indeed be obtained in this way but at the expense of a large numerical burden from the need to perform many experiments. Instead, the aim here is to obtain the linear response functions from a given experiment and only from a single realization. Since we are dealing with a single realization, Eq. (4) becomes

where η(t) is a noise term that must show up as a consequence of dropping the ensemble average. Accordingly, the noise η(t) is introduced here as the difference between the noisy response in a single realization ΔY(t) and the response in the ensemble average 〈ΔY(x(t))〉 (compare Eq. 4). In addition, we assume linearity in the perturbation. As a consequence, the present study is based on the ansatz

where now the response ΔY(t) is divided into a deterministic term and a noise term η(t).

The linearity assumption is on purpose: in the present approach to derive the linear response function (see next section), hereafter called the RFI method (response function identification method), we first use Eq. (6) to obtain χ(t) and justify the linearity assumption a posteriori by analyzing how robustly the response can be recovered for different perturbation strengths. Dropping the nonlinear terms has the advantage that one can use the corpus of linear methods to derive χ(t) from Eq. (6). Note that, in practice, however small the perturbation may be, the nonlinear terms do not vanish. Therefore, the contribution of nonlinearities is in this way distributed between χ(t) and η(t), which will be different from the previous χ(t) and η(t) in Eq. (5). How strongly nonlinearities affect the numerical identification of χ(t) depends on the estimation of η(t), which is a crucial part of our RFI method and the main novelty introduced here to deal with the ill-posedness of the problem to identify χ(t).

In addition, although we derived Eq. (6) starting from considerations of linear response theory, it is clear that this ansatz can also be employed in any other context where it may be assumed that the response formula (Eq. 1) applies and that the data are contaminated by additive noise.

In this section we derive the RFI method. As mentioned above, the aim of this method is to obtain the linear response function using data from a single realization of a given perturbation experiment. For this purpose, an essential step is our novel estimation of the noise term η(t), which requires additional data from an unperturbed (control) experiment.

Starting from the ansatz Eq. (6), the method is based on the idea that the noise term η(t) can be estimated using information on the internal variability from the control experiment in combination with a spectral analysis of the perturbation experiment. The identification of the linear response function proceeds as follows: first, we choose a functional form for χ(t). Second, Eq. (6) is discretized for application to the discrete set of time series data, which results in a matrix equation. Then, assuming that the solution obeys the Picard condition (see below), we estimate the high-frequency components of the noise term η(t) in Eq. (6) via a spectral analysis of the matrix equation applied to the data from the perturbation experiment. Next, assuming that the spectral distribution of noise is similar in the control and perturbation experiments, we also estimate the low-frequency components of η(t). The final estimate of η(t) is then used in a regularization procedure to determine the regularization parameter and thereby find an approximate solution for χ(t). In case χ(t) is known to be monotonic, the approximated solution is further adjusted by checking for monotonicity.

This section is organized as follows. In the first subsection, we introduce the assumption for the functional form of the linear response function. In Sect. 3.2, we present the discretized problem. In Sect. 3.3 we briefly review some elements of regularization theory employed in our method, in particular Tikhonov–Phillips regularization (Sect. 3.3.1) and the discrepancy method (Sect. 3.3.2). In Sect. 3.4 we present our noise estimation procedure by which the regularization parameter is determined. Finally, in Sect. 3.5 we show how this procedure can be further improved in the presence of a monotonicity constraint.

3.1 Functional form of the linear response function

In general, the identification of linear response functions from data may be performed either pointwise (e.g., Ragone et al., 2016) or assuming a functional form (e.g., Maier-Reimer and Hasselmann, 1987). Both approaches usually lead to an ill-posed problem and therefore to similar difficulties in finding the solution (see more details in Sect. 3.3.1). Although the RFI method may be applied in either case, here we assume that the response function consists of an overlay of exponential modes. By this ansatz we guarantee from the outset that the response relaxes to zero for t → ∞, which is consistent with the expectation that real systems have finite memory. Besides constraining the function space for the derivation of the response function, another added value of this approach is that in principle it also gives the spectrum of internal timescales of the response.

Assuming this ansatz, the question on the functional form of χ(t) arises. In climate science, it is typically assumed that the response function can be described by only a few exponents (Maier-Reimer and Hasselmann, 1987; Enting and Mansbridge, 1987; Hasselmann et al., 1993, 1997; Grieser and Schönwiese, 2001; Li and Jarvis, 2009; Joos et al., 2013; Colbourn et al., 2015; Lord et al., 2016), i.e.,

where the τi values are interpreted as characteristic timescales and the gi values are their respective weights. τi and gi are then obtained by applying some fitting technique taking a fixed number of terms M. Thus, an important step in this type of approach is to determine a suitable value for M. A common practice is to initially take only a small number of terms M, solve the problem, and then add terms progressively until the addition of a new term does not improve the fit anymore according to some quality-of-fit criterion (e.g., Kumaresan et al., 1984; Maier-Reimer and Hasselmann, 1987; Hasselmann et al., 1993; Pongratz et al., 2011; Colbourn et al., 2015; Lord et al., 2016). Thereby it is assumed that once results stabilize, the information in the data has already been fully exploited, so that fitting of additional terms would be artificial. Nevertheless, finding the parameters τi and gi either from a given χ(t) by Eq. (7) or from ΔY(t) by inserting Eq. (7) into Eq. (1) means solving a special case of a Fredholm equation of the first kind (see Appendix A), which is an ill-posed problem (Groetsch, 1984). This implies that even though the obtained solution may give a very good fit to the data, it may significantly differ from the exact solution (see, e.g., the famous example from Lanczos, 1956, p. 272).

Therefore, to avoid the complication of determining M, we assume instead that the response function is characterized by a continuous spectrum g(τ) (Forney and Rothman, 2012):

Accordingly, we assume that the response is dominated by relaxing exponentials, meaning that potential contributions from oscillatory modes are not distinguishable from noise. By this approach the timescale τ is not an unknown anymore but is given after discretization by a prescribed distribution with M terms covering a wide range of τi values. Thus, only a discrete approximation to the spectrum g(τ) needs to be found. In this way the functional representation is made independently of the question of information content as long as the spectrum of discrete timescales is chosen to be sufficiently large and dense to widely cover the spectrum of internal timescales of the considered system.

This approach has an additional advantage. By prescribing the distribution of timescales, one must not solve a nonlinear ill-posed problem (by solving Eq. 7 for τi and gi) but only a linear ill-posed problem (by solving Eq. 8 only for g(τ)), for which the mathematical theory is fairly well developed (Groetsch, 1984; Engl et al., 1996). Because the problem is linear, the solution is even given analytically (see Sect. 3.3.1), which makes the method very transparent.

3.2 Discretized problem

In view of applications to geophysical systems like the climate or the carbon cycle (Part 2 of this study) that are known to cover a wide range of timescales (Ghil and Lucarini, 2020; Ciais et al., 2013, Box 6.1), it is useful to switch to a logarithmic scale (Forney and Rothman, 2012) by rewriting Eq. (8) in terms of log 10τ:

Hereafter, q(τ) and its discrete version q (see below) will be called the spectrum.

In order to apply the basic Eq. (6) together with Eq. (9) to experiment data, the whole problem needs to be discretized in time and also with respect to the spectrum of timescales. Here we assume the data to be given at equally spaced time steps , , where N is the number of data, while the timescales are assumed to be equally spaced at a logarithmic scale between maximum and minimum values τmax and τmin, i.e.,

where M is the number of timescales. As shown in Appendix B, the resulting discretized equations corresponding to Eqs. (6) and (9) are

and

where ηk stands for the noise. Combining the response data ΔYk, the spectral values qj, and the noise values ηj into vectors ΔY∈ℝN, q∈ℝM, and η∈ℝN, these equations can be written in vector form as

with the components of matrix A given by

Matrix A is known from the prescribed spectrum of timescales τi and the forcings fi. Considering η as a fitting error, in principle one can apply standard linear methods to solve Eq. (13) for the desired spectrum by minimizing

where denotes the Euclidean norm, i.e., . Here we denoted the spectrum as qη instead of q to emphasize that the spectrum found in this way can only be an approximation to the original q depending on the noise present in the data.

Unfortunately, it turns out that solving Eq. (15) is not a trivial task. The first difficulty is that the finite information provided by the data makes the problem underdetermined: ideally one wants to obtain a spectrum q(τ) defined for , but the data ΔY are discrete and cover only a limited time span. However, the most serious issue in identifying χ(t) arises because Eq. (1) is a special case of a Fredholm equation of the first kind (Groetsch, 1984, 2007, see also Appendix A), where the quest for the integral kernel is well known to be an ill-posed problem (see, e.g., Bertero, 1989; Hansen, 1992). This basically means that any solution qη of Eq. (15) obtained via classical numerical methods such as lower-upper (LU) or Cholesky decomposition will be extremely sensitive to even small errors in the data (Hansen, 1992). Therefore, to solve Eq. (15) for the spectrum qη, we invoke regularization.

3.3 Regularization

To treat the ill-posedness of Eq. (15), our RFI method combines techniques from regularization theory with a novel approach to estimate the noise level in the data. To facilitate the understanding of the method, in this section we briefly review these techniques along with some other aspects of the theory that are relevant for our method development.

3.3.1 Regularized solution

To deal with the ill-posedness, it is useful to perform a singular value decomposition (SVD) of the matrix A:

with , , , and . Σ is a diagonal matrix with diagonal entries known as singular values, and

are orthonormal matrices with being the left singular vectors and the right singular vectors of A. In practice, assuming that there are more data than prescribed timescales, i.e., N≥M, the singular values σi computed numerically are nonzero (see Golub and Van Loan, 1996, Sect. 5.5.8). In this case, Eq. (15) has the unique solution (see Golub and Van Loan, 1996, Theorem 5.5.1)

where • denotes the usual scalar product.

In practice, when a SVD is applied to a discrete version of a Fredholm equation of the first kind, the components of the singular vectors vi and ui tend to have more sign changes with increasing index i, as observed by Hansen (1989, 1990). This observation justifies that in the following we dub low-index terms in Eq. (19) low-frequency contributions and high-index terms high-frequency contributions.

It is well known that when applying the solution (Eq. 19), one encounters certain numerical problems. Regularization is a means to handle these problems. These problems arise – even in the absence of noise – as follows. From the Riemann–Lebesgue lemma (see, e.g., Groetsch, 1984) it is known that the high-frequency components of the data ΔY(t) must approach zero. In the discrete case, by Hansen's observation this means that the projections ui•ΔY should approach zero for increasing index values i. However, due to machine precision or the noise η contained in ΔY, numerically the absolute values do not approach zero but settle at a certain non-zero level for large i or, in the presence of noise, may even increase. Due to the ill-posedness, the singular values σi in the denominator of Eq. (19) also tend to zero, so that these high-frequency contributions to qη are strongly amplified. Hence applying Eq. (19) naively would not give a stable solution for qη because its value would depend critically on numerical errors and the noise present in the data.

Regularization remedies this problem by suppressing the problematic high-frequency components. This approach assumes that the main information on the solution is contained in the low-frequency components, so that the high-frequency contributions to the sum (Eq. 19) can be ignored. This assumption is consistent with the very nature of ill-posed problems because in such problems information on high frequencies is anyway suppressed, so that only low-frequency components of the solution are recoverable (Groetsch, 1984, Sect. 1.1).

To perform such filtering, we employ the Tikhonov–Phillips regularization method (Phillips, 1962; Tikhonov, 1963). Besides being mathematically well developed (see, e.g., Groetsch, 1984; Engl et al., 1996), the Tikhonov–Phillips regularization method gives an explicit solution in terms of the SVD expansion, which allows for a clear interpretation of the filtering. In addition, it provides a smooth filtering of the solution, in contrast to the also well-known truncated singular value decomposition method (Hansen, 1987). For additional regularization methods, see, e.g., Bertero (1989), Bertero et al. (1995), and Palm (2010).

The standard Tikhonov–Phillips regularization yields the regularized solution in the simple form (Hansen, 2010; Bertero, 1989)

where the fi(λ) are the filter functions

Therefore, now the problem boils down to determining λ (see next section). Once λ is determined, the solution qλ is obtained by Eq. (20) and the desired linear response function χ(t) finally follows from Eq. (12).

3.3.2 Determining the regularization parameter λ

By construction it is clear that qλ as computed from Eq. (20) strongly depends on the regularization parameter λ. Accordingly, much effort has been put in developing methods to determine suitable values for λ (e.g., Engl et al., 1996; Hansen, 2010). Of special interest are methods that give solutions converging with decreasing noise level to the “true” solution. One such method known to conform to this condition while uniquely determining the regularization parameter has been proposed by Morozov (1966). His discrepancy method is based on the idea that the solution to the problem allows the data to be recovered with an error of the magnitude of the noise (Groetsch, 1984): let δ denote an upper bound of the noise level , i.e., . Then, λ should be chosen such that the discrepancy matches δ, i.e.,

Groetsch (1983) motivates the choice of this method by demonstrating that determining λ from Eq. (22) minimizes a natural choice for an upper bound of the error in the solution given by regularization. Unfortunately, in practical applications the noise level δ is usually not known. To try to solve this problem for the application of interest, in the next section we propose an approach to estimate δ.

3.4 Estimating the noise level δ

To introduce our approach, in the following we assume that data from an unforced experiment (control experiment) are available – as is typically the case in applications to Earth system models (see Part 2) – that allow for an independent estimate of the noise level δ.

A naive way to invoke these data to determine λ would be to take δ essentially as the standard deviation of the control experiment – more precisely: . Technically, to find λ, one way is to start with a large value for λ and decrease it until the left-hand side of Eq. (22) matches δ (as suggested by Hämarik et al., 2011). That this procedure works is explained by the fact that the function is continuous, is increasing, and contains δ in its range (Groetsch, 1984, Theorem 3.3.1). Having found λ in this way, the desired solution qλ is then obtained from Eq. (20). However, this approach is not as straightforward as one may think: because of the forcing, the noise in the perturbed experiment may have different characteristics from that in the control experiment. Therefore in the following we devise a method for how to account for this problem.

Formally in Eq. (13) ΔY is split into a “clean” part and noise η. Entering this into Eq. (19) gives

Accordingly, the first term in the sum gives the “true” solution q, while the second term gives the noise contribution to qη. As already pointed out when discussing regularization, the “true” solution of ill-posed problems can only be recovered if it is dominated by the projection onto the first singular vectors. This requirement is formally stated by the discrete Picard condition (Hansen, 1990), which demands that the size of the projection coefficients drops sufficiently quickly to zero, so that they become smaller than σi before σi levels off to a finite value because of numerical errors. To find a good estimate for the noise level δ, we use this in the following way. Let imax be the value of the index i, where the singular values σi start to level off. Assuming that the Picard condition holds, one can infer that

Therefore,

This equation determines the high-frequency components of the noise η. It remains to determine also the low-frequency components to obtain an estimate for δ.

For this purpose, we take advantage of the data from the control experiment. The control experiment is an experiment performed for the same conditions as the perturbed experiment, with the only difference that the forcing f is zero, so that the resulting ΔYctrl can be understood as pure noise; therefore we write ηctrl:=ΔYctrl. While in the forced experiment the low-frequency noise is obscured by the low-frequency response induced by the forcing, the low-frequency part of the control experiment data can to first order be expected to give an estimate of the low-frequency noise present in the forced experiment. Nevertheless, it is clear that due to the forcing the spectral characteristics of noise may be different in the forced and unforced experiments. More precisely, the spectrum of noise may differ in its overall level and spectral distribution (i.e., the “shape” of the spectrum). In the following, we account for a possible difference in the overall level. However, we will assume that the spectral distribution is approximately the same for ηctrl and η; we call this the spectral similarity assumption.

After these considerations, λ can be determined as follows: take imax as the last index i before the plateau σi≈0. This imax distinguishes high-frequency () from low-frequency () components. Then

are the levels of high-frequency noise in the perturbed (see Eq. 25) and control experiments, respectively. We now scale the spectral components of ηctrl so that its high-frequency level matches the high-frequency level of ΔY:

In this way, the magnitude of the high-frequency components of η′ matches that of ΔY and because of Eq. (25) also that of η. On the other hand, the spectral distribution of η′ is the same as for ηctrl and by the spectral similarity assumption approximately the same as for η. Because η′ and η have similar spectral distributions, the fact that the magnitude of the high-frequency components of η′ matches that of η implies that the magnitude of their low-frequency components also matches. Therefore, η′ can be seen as an estimate of the noise η in the perturbed system not only at high, but also at low frequencies. Hence this corrected noise vector η′ can be used to obtain an estimate of the noise level of the perturbed system by setting

Compared to taking for δ simply the noise level from the unperturbed experiment (as was insinuated above), taking it in this scaled way ensures that the high-frequency components are consistent with the Picard condition that must hold for q to be recoverable from the ill-posed problem tackled here. Having determined δ, λ can now be computed from Eq. (22) as described above, from which the q follows (Eq. 20) and hence χ(t) (Eq. 12).

3.5 Additional noise-level adjustment in the presence of a monotonicity constraint

In the application to the land carbon cycle in Part 2 of this study, we show that certain response functions χ(t) decrease monotonically to zero. In attempts to recover such response functions by employing the noise-level adjustment described in the previous section, it may turn out that the numerically found response function fails to be monotonic. There may be several reasons for this failure (strong nonlinearities, signal too obscured by noise, etc.). However, one additional reason may be that the low-frequency level of the noise was not properly estimated by assuming that the spectral distribution in the unperturbed experiment reflects the distribution in the perturbed experiment. For such cases one may try to improve the result by further adjustment of the low-frequency noise level to obtain a more reasonable result.

The idea is to adjust the low-frequency components of noise independently of the high-frequency components iteratively until the solution obeys the monotonicity constraint. To understand how to do so, several things must be explained.

-

A sufficient condition for χ(t) being monotonic is that all components qi have the same sign (see Appendix C). Therefore, starting out from a numerical solution for χ(t), it would develop towards monotonicity if one could come up with a sequence of vectors qλ with fewer and fewer sign changes.

-

From Eq. (20) it is seen that because of Hansen's observation explained in Sect. 3.3.1, that singular vectors vi are less noisy for lower i, qλ has fewer sign changes the fewer vi contribute to the sum.

-

As seen from Eqs. (20) and (21), this is the case the more components the filter function is suppressing, i.e., the larger the value of λ.

-

To obtain larger values of λ, one sees from the discrepancy method (Eq. 22) that one has to increase δ. The proof for this can be found in Groetsch (1984) (Theorem 3.3.1), but it can also be made plausible as follows: starting from λ=0, qλ=qη, which is the solution of the minimization problem (Eq. 15). Hence, for λ=0 the discrepancy on the left-hand side of Eq. (22) is minimal. By increasing λ, one decreases all components of qλ (Eq. 20), thereby increasing the discrepancy.

-

Following the reasoning of the previous section, in order to obtain a larger value for δ, one must increase the noise level (compare Eq. 29). In doing so, one must keep the high-frequency components of unchanged because they must keep matching the level of the high-frequency components of the noise in the perturbed experiment η (given by Eq. 25). Hence, to increase δ, one sees from Eq. (29) that this is achieved by scaling up the low-frequency components of .

Summarizing these considerations, we have to increase the level of low-frequency contributions to η′ to develop a given solution for χ(t) towards monotonicity.

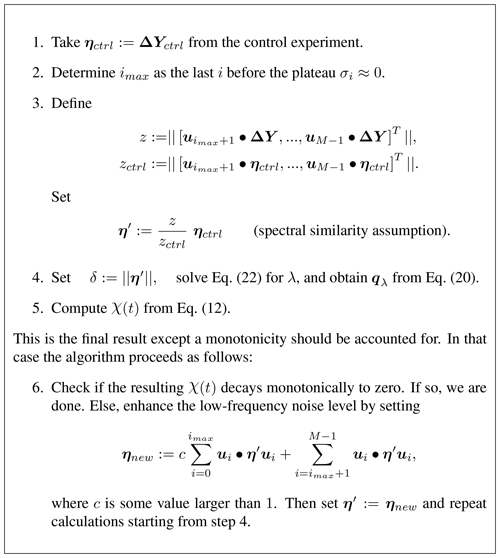

This leads to the overall algorithm listed in Fig. 1. The first five steps have already been explained at the end of Sect. 3.3.2. To account for monotonicity, the additional step 6 combined with the loop back to step 4 has to be iteratively executed. To enhance the low-frequency noise level as explained above, we calculate in step 6 a new noise vector ηnew by keeping the high-frequency part from η and enhancing its low-frequency components by a factor c>1. Then we recompute χ(t) from steps 4 and 5 and once more check for monotonicity.

For the RFI algorithm to be applicable, two conditions must be met: (1) a linear response exists for sufficiently weak perturbation and, (2) in addition to the response experiment, a control experiment is also available. The assumptions needed for the successful application of the algorithm are summarized in Table 1.

In application to real data, the presence of noise and nonlinearities may complicate the recovery of linear response functions. Therefore, by using artificial data generated from a toy model, in the present section we analyze the robustness of the RFI method in the presence of such complications. Robustness for real data is studied in Part 2.

4.1 Toy model and artificial experiments

As a toy model we take

Here the matrix describes the relaxation of the unperturbed model. The second right-hand-side term represents the deterministic forcing constructed from the time-dependent forcing strength and the coupling vector a∈ℝD. Additionally, the system is perturbed by the stochastic forcing , which for simplicity is assumed to be white noise. To make the relation to the carbon cycle considered in Part 2, the components of x may be understood as the carbon stored in plant tissues and soils at the different locations worldwide, so that the observable is the analog of globally stored land carbon. The solution of the system is

We assume from the outset M to be diagonal with eigenvalues , the being the relaxation timescales. Then

with the linear response function χ*(t) and the noise term η*(t) given by

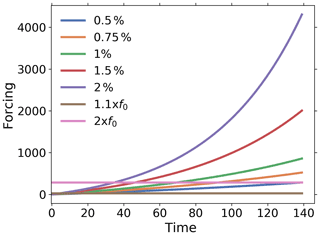

To complete the description of the toy model, one has to specify its parameters. For the dimension D we take 70, and the timescales are assumed to be distributed logarithmically between and , i.e., with . With carbon cycle applications in mind, the distribution of the components of the coupling vector is adapted from the log-normal rate distribution found by Forney and Rothman (2012) for the decomposition of soils:

with μ and σ chosen so that the peak timescale is around τ=5 and the limits of the log-normal distribution are approximately within τ=0.1 and τ=200 (see the “true” spectrum in Fig. 3). The components of n are taken as uncorrelated, i.e., 〈ni(0)nj(t)〉=ξδijδ(t), with standard deviation ξ being chosen differently in different experiments.

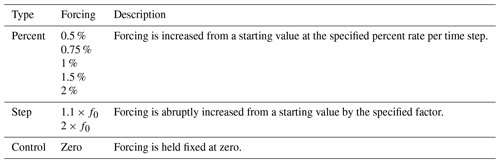

Table 2Experiments considered in this study. Forcings are shown in Fig. 2. To standardize the types of experiments considered here and in Part 2, we select forcing functions that mimic those employed in climate change simulation experiments to whose data the RFI method is applied in Part 2. Note that in principle any type of forcing could be employed.

Figure 2Forcings for the experiments considered in this study. To standardize the type of experiments considered here and in Part 2, we select forcing functions that mimic those employed in climate change simulation experiments to whose data the RFI method is applied in Part 2. Note that in principle any type of forcing could be employed.

In our experiments we explore how Y(t) behaves as a function of the forcing f(t). To this end, we choose a forcing function f(t) (see Table 2 and Fig. 2). The most obvious way to perform the toy model experiments would be to integrate Eq. (30). However, to have better control over the noise, it is for our purpose more appropriate to use the analytical solution (Eqs. 32–34). Hence, we numerically integrate Eq. (32), using the representation Eqs. (33) and (34). The data from these experiments are then used to investigate the performance of the RFI algorithm to recover χ*(t). Since all ai values are non-negative, the response function (Eq. 33) is monotonic, so that we apply the extended version of the algorithm (see Fig. 1, including step 6). In all experiments we generate N=140 data points to have a time series of similar length to the climate change simulations analyzed in Part 2 (140 years, one value for each year). To apply the RFI method, the noise from an associated control experiment is also needed. This is obtained from Eq. (34) by using another realization ni(t) of white noise for each system dimension i.

4.2 Choice of parameters for the RFI method

To apply the RFI method, we choose M=30 timescales for the recovery of χ*. Using and , we distribute the spectrum of timescales according to Eq. (10). These parameters are also used for the application on the carbon cycle in Part 2 and for the comparison with previous methods in Sect. 5.

4.3 Ideal conditions

To gain trust in the numerics of our implementation of the RFI method, we present in this section a technical test considering conditions under which it is known that the linear response function should be quite perfectly recoverable. Such ideal conditions are characterized by perfect linearity and absence of noise. Hence we use the presented toy model (which is anyway linear) in the absence of noise (n=0) for this test. Actually, this will not be a full test of the algorithm but only of the implementation of its basic apparatus (Sects. 3.2 and 3.3.1), culminating in Eq. (20) since in the absence of noise, the method to determine the regularization parameter λ (Sects. 3.3.2 and 3.5) is not applicable. One might think that in the absence of noise one could use Eq. (19) to determine the linear response function, but even under such ideal conditions the ill-posedness of the problem calls for regularization to suppress the numerical noise that prevents one from obtaining a sensible solution from Eq. (19) (see the discussion in the paragraph after Eq. 19). However, choosing the small value of for the regularization parameter when evaluating Eq. (20) is sufficient for this technical test.

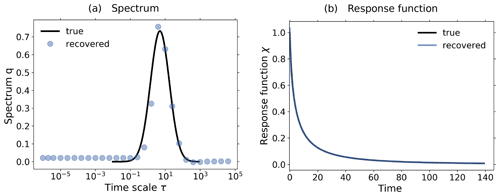

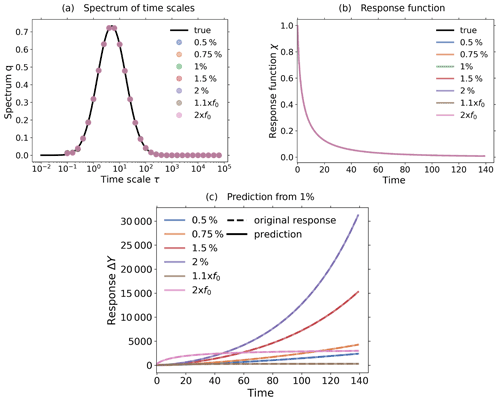

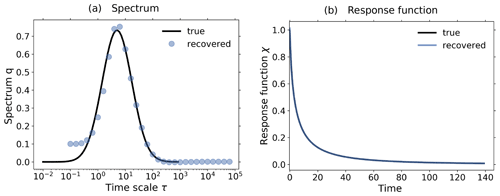

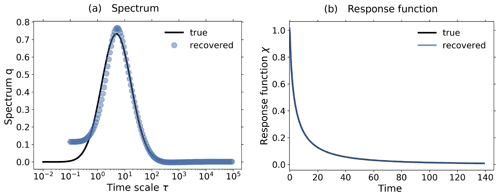

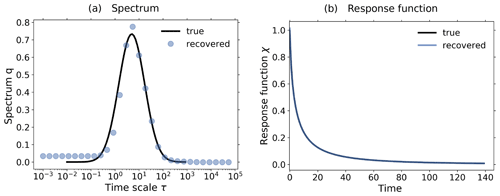

Figure 3c shows the response of the noiseless toy model to the forcings shown in Fig. 2; i.e., we performed the experiments listed in Table 2, although for the present test the control experiment is not needed.

Applying Eq. (20) to the experiment data gives the spectrum qλ shown in Fig. 3a. Here, we derived the spectrum qλ for each experiment separately, although in the figure only single dots are seen, because all results coincide so closely and are almost indistinguishable from the “true” solution q*, as was expected for this ideal case. The next Fig. 3b shows the response function obtained from the spectra qλ using Eq. (12). Obviously from Fig. 3a the “true” response function is reconstructed perfectly from whatever experiment is used. As a final test we predict using in Eq. (1) the response function obtained from the 1 % experiment the responses of other experiments, and indeed, these predicted responses are indistinguishable from the responses obtained directly from the experiments (see Fig. 3c). This latter result demonstrates perfect robustness of the numerical approach to recover the responses in this ideal case.

Figure 3Demonstration of robust recovery for noise-free data from the toy model: (a) recovered qλ; (b) recovered χ(t); and (c) original responses and predictions using χ(t) derived from the 1 % experiment. Reconstructed values are almost indistinguishable from original data. To plot the “true” spectrum of the toy model in subfigure (a), we used the relation , which can be obtained by comparing Eq. (33) with Eq. (12). Since from the discrete spectrum the response function and the response may be obtained for any time t, the spectrum is plotted as dots, while the response function and response are plotted as continuous lines. The regularization parameter is chosen as .

4.4 First complication: noise

The presence of noise may severely hinder the detailed recovery of χ*(t) due to the ill-posed nature of the problem. In order to demonstrate the effect of the addition of noise on the quality of the derived χ(t), we define a relative error for the prediction of the responses from different experiments. Consider a particular experiment – which is in our case the 1 % experiment – from which we have obtained by the RFI method the response function, which we call here χ0(t). The relative error for the prediction of the response from an experiment “k” by the recovered χ0(t) via the convolution (Eq. 1) is

where ⋆ stands for the discrete form of the convolution operation (Eq. 1) used to predict the responses, i.e., . In the following we denote as the prediction error for the experiment “k”. To measure the quality of the prediction across multiple experiments, we also define the mean prediction error

where K is the number of predicted responses. The reader may wonder why we quantify the quality of the recovery only indirectly from the responses found in different experiments and not directly from the recovery of χ(t). The reason is that in real applications the “true” χ(t) is not known but the responses are. The reliability of this indirect measure for the quality of the recovery is discussed in Sect. 5.

To study how the quality of the recovery depends on the noise level, we introduce the signal-to-noise ratio (SNR) of the response data from a perturbation experiment as

where δ is the final noise-level estimate obtained by the RFI method, as described in Sect. 3.3.2 (see Eq. 29).

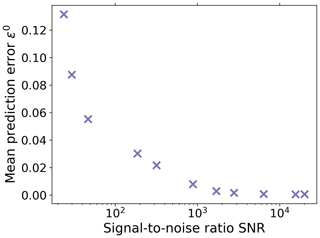

To demonstrate the dependence of the mean prediction error (Eq. 37) on the SNR, we performed 1 % experiments using different noise levels. The resulting dependence is shown in Fig. 4. As expected, for a small error a sufficiently large SNR is needed; i.e., a good recovery may be hindered by a too high noise level.

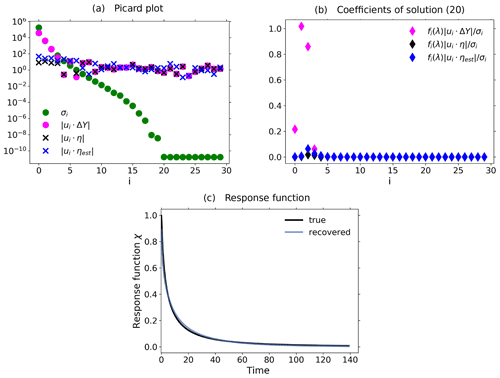

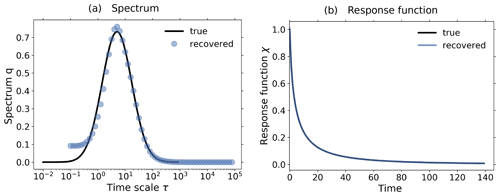

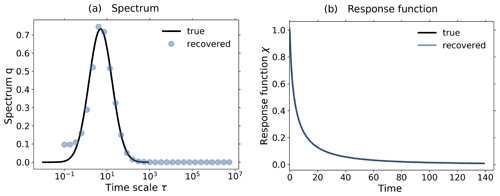

Figure 5Demonstration of the operation of the RFI algorithm in the presence of noise using toy model data from a 1 % and control experiment. To demonstrate the relevance of the noise-level adjustment (step 3 from Fig. 1), the standard deviation of the noise in the control experiment was taken to be 10 times smaller than that for the noise in the perturbed experiment. (a) Picard plot showing the singular values σi and the projection coefficients of the data , the “true” noise , and the final noise estimate ; (b) coefficients of the regularized solution (Eq. 20); (c) “true” and recovered linear response functions. Since the RFI algorithm correctly adjusted the noise level to the “true” noise in the data, the resulting regularized solution has contributions only from the first few projection coefficients which are not completely obscured by noise. Overall, the recovery is almost perfect, because the SNR (chosen as about 520) is still sufficiently good and because the noise was chosen to conform with the spectral similarity assumption. The regularization parameter determined by the algorithm is λ≈ 30 364. Because the noise-level adjustment (step 3 from Fig. 1) already gave a good estimate of the “true” noise in the data, no monotonicity check was needed (step 6 from Fig. 1).

In Fig. 5 we demonstrate how the overall noise-level adjustment in step 3 of the RFI algorithm (see Fig. 1) affects regularization to recover the correct response function. To guarantee that the overall level of the noise spectrum is indeed substantially different in the control and perturbed experiments (so that the adjustment is really needed), we take for the noise in the control experiment a standard deviation 10 times smaller than that for the noise in the perturbed experiment. To demonstrate how the adjustment works, it is helpful to consider the so-called “Picard plot”. This type of plot was originally introduced to analyze the spectral characteristics of an ill-posed problem (see, e.g., Hansen, 1992). In Fig. 5a we show the Picard plot for data obtained from a 1 % experiment with the toy model using a SNR of ≈ 520 to ensure a good recovery. The singular values σi decrease to extremely small values as the index i increases. This demonstrates that the problem of solving for the response function is indeed ill-posed and therefore regularization is needed for its solution (compare Eq. 19 with Eq. 20). The data labeled by are the “true” noise coefficients, obtained by subtracting the “clean” response Aq, known analytically from the toy model description, from the noisy toy model response ΔY. Comparing them to the projection coefficients of the response , one sees that with the exception of the first few coefficients the response is dominated by its noise content. Accordingly, only the information contained in these first few coefficients is recoverable from this ill-posed problem whatever method is used. The data labeled by have been added to the Picard plot to demonstrate how the RFI algorithm operates: these data are the projection coefficients of the estimated noise content in the data, where ηest is the final value of η′ obtained by the RFI method. Obviously, the RFI algorithm correctly estimates the “true” noise level not only at high frequencies – where it is correct by the noise-level adjustment in step 3 of the RFI algorithm (see Fig. 1) – but also at low frequencies, where it is predicted from the adjusted low-frequency components of the control experiment (also step 3). Accordingly, in this case the spectral similarity assumption holds, and there is no need to further adjust the noise level (step 6).

How the estimation of the noise in the data and the resulting regularization affects the projection coefficients of the spectrum q can be seen in Fig. 5b: only those few coefficients not dominated by noise contribute to the regularized solution. In this case these few coefficients selected by determining the regularization parameter λ from the noise level are sufficient for an almost perfect recovery of the response function, as seen in Fig. 5c.

It is important to note that in the situation of Fig. 5, where the overall noise level differs considerably in the control and perturbed experiments, a naive noise estimate taken from the control experiment without the adjustment in step 3 (as first suggested in Sect. 3.3.2) would severely underestimate the noise actually in the data. This would in turn lead to an underestimation of the regularization parameter (see Groetsch, 1984, Theorem 3.3.1). As a result, the wrong filtering by regularization would leave projection coefficients dominated by noise in the solution, likely leading to large errors in the recovered response function. This example therefore demonstrates the relevance of the noise adjustment in step 3.

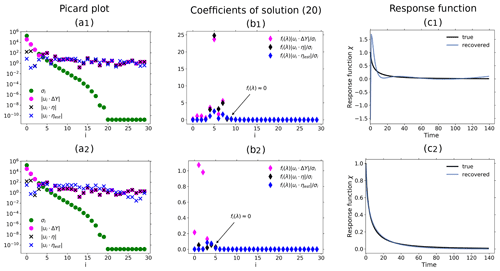

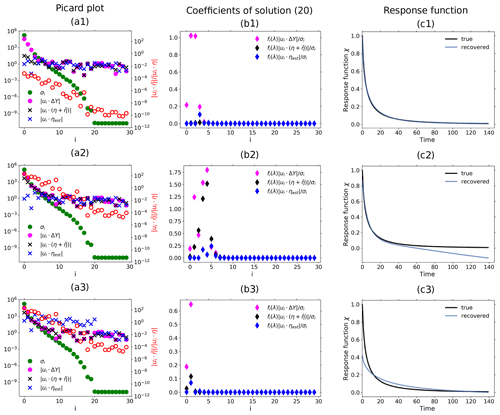

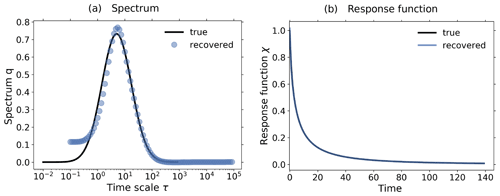

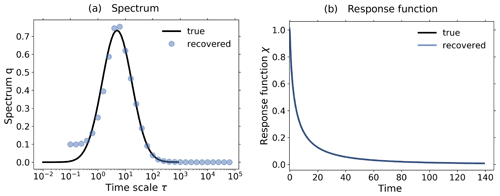

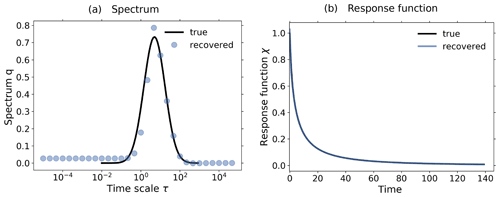

Figure 6Demonstration of the additional noise-level adjustment in the presence of a monotonicity constraint using toy model data from a 1 % and control experiment: (a) Picard plot; (b) coefficients of the regularized solution (Eq. 20) and (c) recovered linear response function. All the figures are based on the same toy model experiments using a SNR = 1189. To demonstrate the effect of the noise-level adjustment, the spectral similarity assumption is broken by artificially increasing the low-frequency components of the noise in the experiments. The plots in the first row show the results from the RFI algorithm in the absence of additional noise-level adjustment (step 6 in Fig. 1). Although the “true” response function of the toy model is monotonic, the response function recovered by the RFI algorithm is non-monotonic (last figure in the first row). However, if the noise adjustment is switched on (second row), the response function is correctly recovered as monotonic (last figure in the second row). Arrows in subfigures (b) indicate the index icritical that separates components of the solution that are only weakly suppressed (i<icritical) from those that are almost completely suppressed (i≥icritical). The regularization parameter determined by the algorithm is λ≈1 for the first row and λ≈ 11 450 for the second. For more details, see the text.

Finally in this section, we demonstrate that by accounting for monotonicity of the linear response function, one may obtain a better estimate of the low-frequency components of the noise whereby the recovery of the response function is improved. In Fig. 6 we plot results from toy model experiments where the spectral similarity assumption does not hold. This was achieved by artificially enhancing the low-frequency components of the noise η*(t) in Eq. (32). The top row plots show the results from the recovery when the additional noise-level adjustment was not used. Because the spectral similarity assumption does not hold, the estimated low-frequency components of the noise do not match those of the “true” noise (Fig. 6a1). Ideally, only those four projection coefficients of the data which are larger than the projection coefficients of the “true” noise should contribute to the recovered response function. Instead, as seen in Fig. 6b1, the coefficients with indexes between i=4 and i=7 give the dominant contributions because they are larger than the estimated noise coefficients (compare Fig. 6a1). Therefore, the recovery of the response function is poor (Fig. 6c1). However, since in this case the low-frequency components of noise are such that the recovered response function is non-monotonic although the “true” response function is known to be monotonic, one may further adjust the noise level to improve the results.

This further adjustment is the purpose of step 6 of the RFI algorithm (see Fig. 1). Its effect is demonstrated by the second-row plots of Fig. 6: the estimated noise components now match the “true” noise components better that had been underestimated in the first row (compare Fig. 6a2 and a1), so that only those four components that carry information (compare in Fig. 6a2 the projections for low index i with ) survive the regularization (Fig. 6b2). As a result, the quality of the recovery of the response function has considerably improved (Fig. 6c2).

4.5 Second complication: nonlinearity

The second difficulty in recovering the linear response function χ(t) from a perturbation experiment may arise from nonlinearities present in the considered system. Generally it must be suspected that nonlinearities are present, so that they should not hurt as long as they are small, and indeed, from the viewpoint of regularization, contributions from nonlinearities can be considered an additional noise, so that in principle they can also be filtered out. However, as with noise, when getting stronger they cause a deterioration of the recovery of the response function. In the following, we show this more formally and discuss in detail how the RFI algorithm behaves in the presence of nonlinearities.

To understand how contributions from nonlinearities affect the recovery of the response function, we write the nonlinear terms in Eq. (5) collectively as . This formally gives

instead of Eq. (13). Plugging this into Eq. (20), the spectrum is obtained as

Accordingly, the nonlinear contributions can be understood as an additional noise in the spectrum qλ, so that the theory of regularization fully applies when replacing η by the combined noise . Hence, as in their absence, nonlinearities do not prevent the application of regularization as long as the signal is not buried under this combined noise.

However, for the RFI algorithm to give good results, a second condition is that the contributions from must not be large compared to those from η. To understand this, one must realize that the response and with it the nonlinear contributions are dominated by low-frequency components because of the low-frequency nature of the forcing for the problems of interest (for instance in % experiments). The RFI algorithm uses an estimate for the noise level in the perturbation experiment obtained from the control experiment assuming that the spectral distribution is approximately the same in the noise from the control experiment and the noise in the data from the perturbation experiment (spectral similarity assumption; step 3 of Fig. 1). However, the control experiment does not contain any contributions from nonlinearities because the forcing is zero. Therefore, if in the data from the perturbation experiment the contributions from nonlinearities are not small compared to those from η, the spectral similarity assumption does not hold. Since this assumption is at the heart of the RFI algorithm, its breakdown leads to a poor recovery of the linear response function.

All this is demonstrated in the following by toy model experiments. For this purpose, we artificially consider the response of the toy model not in Y but in its nonlinear transform

where the parameter a determines the strength of the nonlinearity. The particular functional form chosen for Ynonlin(t) mimics the nonlinear effect of saturation encountered for instance in the land carbon sink when atmospheric CO2 rises to high values. In the following, to demonstrate the effect of nonlinearities, we set the noise level in the toy model experiments to a rather small value in order to have a good SNR in the experiments considered.

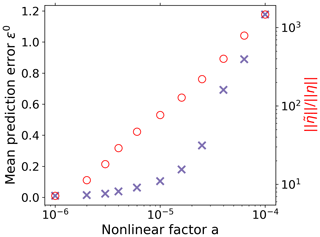

Figure 7Mean prediction error (Eq. 37) of the recovery when deriving χ(t) for different values of the nonlinearity factor a of the toy model. As a increases, the recovery of χ(t) deteriorates because the level of the contributions from nonlinearities becomes large compared to the noise level ; how these terms are computed for the toy model is explained in Appendix D. To demonstrate here the pure effect from the breakdown of the spectral similarity assumption, the RFI algorithm is used here without the additional noise-level adjustment enforcing monotonicity.

In Fig. 7 we show by plotting the mean prediction error (see Eq. 37) how the recovery of the response function deteriorates as the nonlinearity parameter a increases. To demonstrate that this is indeed caused by a breakdown of the spectral similarity assumption, we plot in addition the ratio . It is seen that, indeed, as claimed above, the recovery works well only when this ratio is not large, i.e., when the contributions from nonlinearities are not large compared to those from the noise η.

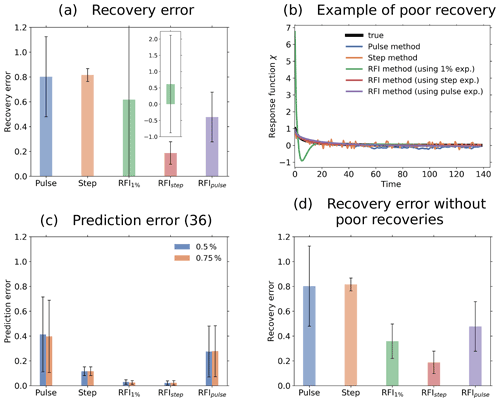

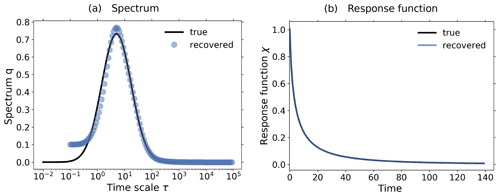

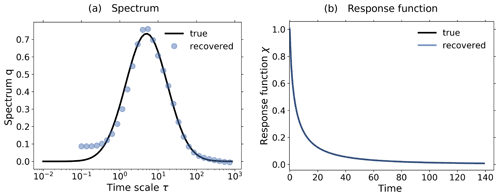

Figure 8Demonstration of how nonlinearities affect the recovery of the response function: (a) Picard plot; (b) coefficients of regularized solution (Eq. 20) and (c) recovered linear response function. First row: nonlinearity factor (no monotonicity check); second row: nonlinearity factor (no monotonicity check); third row: nonlinearity factor (with monotonicity check). The noise is overestimated in the low-frequency spectrum in the third row because nonlinearities yield a derived χ(t) that does not obey the monotonicity constraint. As a consequence, the method increases the level of low-frequency components until the monotonicity constraint is obeyed. The failure to obey the monotonicity constraint and consequent large overestimation of noise in this case can be taken as an indication of the presence of nonlinearities in the response. Note that the “true” linear response function in this nonlinear case a≠0 is obtained analytically from the linear case a=0 via Eq. (41) (see Appendix D). The regularization parameter determined by the algorithm is λ≈3120 for the first row, λ≈74 for the second, and λ≈14 611 873 for the third. For more details, see the text.

More insight into how nonlinearities affect the recovery is obtained from the more detailed SVD analysis shown in Fig. 8. The first row of subfigures was obtained from the toy model assuming a rather small nonlinearity (). In the Picard plot (Fig. 8a1) it is seen that in this case both conditions necessary for a good recovery are met: first, the signal is clearly visible above the combined noise (see the first four components). Second, in this case is small over the whole spectrum; i.e., the contributions from are small compared to those from η. As explained above, because this second condition is also met, the noise estimate from the RFI algorithm ηest is a good approximation to the combined noise across all frequencies (compare in the Picard plot to ). As a result, the four components selected by the regularization for the recovered solution (Fig. 8b1) are precisely those dominated by the signal (compare with ). This example demonstrates that as long as these two conditions are met, small contributions from nonlinearities do not prevent a good recovery of the response function (see Fig. 8c1).

In the second row of Fig. 8, we demonstrate how the violation of the second condition obstructs the recovery. In this case the nonlinearity parameter has been given a larger value (). As a consequence, one sees in the Picard plot that the low-frequency components of the combined noise are enhanced. The first condition is still met: the signal is visible above the combined noise (see the first two components). However, now the ratio becomes large at low frequencies, violating the second condition. As explained, the violation of the second condition leads to the breakdown of the spectral similarity assumption. As a result, the RFI algorithm underestimates the combined noise at low frequencies (compare in the Picard plot to ). Using this wrong noise estimate, regularization selects components for the recovered solution that are to a large extent dominated by the combined noise (see components i=2 to i=6 in Fig. 8b2). The result is that the strong low-frequency contributions from nonlinearities deteriorate the recovery of the response function at long timescales (Fig. 8c2).

In the third row, we demonstrate for this type of nonlinearity that by accounting for monotonicity one can remove from the recovered solution all components dominated by noise. For this purpose, we set the nonlinearity parameter to the same value as for the second row () but employ the additional noise-level adjustment (step 6 of Fig. 1); i.e., the low-frequency range of the noise estimate is now automatically adjusted in order to recover a response function that decays monotonically to zero. As seen in the Picard plot, the additional noise-level adjustment results in an artificial enhancement of the low-frequency components of the noise estimate, with a large jump separating the low- from high-frequency ranges. In this case, such enhancement is able to better estimate the largest components of the combined noise (first few components in the Picard plot). As a consequence, regularization correctly selects for the recovered solution only the two first components which are not dominated by noise (Fig. 8b3). Unfortunately, as seen in Fig. 8c3, these two first components do not contain enough information for a perfect recovery, since the quality improves at long timescales but deteriorates at short timescales (compare Fig. 8c3 and c2). This is a consequence of how regularization works: it filters out components dominated by noise (or in this case nonlinearity) at the expense of also removing useful information contained in those components.

As a last test of the quality of the results given by the RFI method in application to the toy model, in this section we compare our method against two existent methods in the literature to identify response functions in the time domain. The comparison is performed for the particular case where the response function is known to be monotonic and also for the more general case where it is not. As a side issue, this section also reveals some insight into the relation between the quality of the recovery of χ(t) as measured by the prediction of responses and the quality of the recovery of χ(t) itself.

In climate science, the most commonly used method is to obtain χ(t) from an impulse response, i.e., the response to a perturbation of Dirac delta type (e.g., Siegenthaler and Oeschger, 1978; Maier-Reimer and Hasselmann, 1987; Joos and Bruno, 1996; Joos et al., 1996; Thompson and Randerson, 1999; Joos et al., 2013). Here we call it the pulse method. Although this method is conceptually straightforward, in some cases it might not yield satisfactory results. Since the perturbation is only one “pulse”, depending on the observable of interest it may give a response with a small SNR. As a consequence, the recovered response function may be severely affected by noise. On the other hand, if the strength of the pulse is made large to obtain a good SNR, the linear regime may be exceeded. In this case, the impulse response does not correspond anymore to the linear response function.

The second method consists of deriving the linear response function from a step response, i.e., the response to a Heaviside-type perturbation (e.g., Hasselmann et al., 1993; Ragone et al., 2016; MacMartin and Kravitz, 2016; Lucarini et al., 2017; Van Zalinge et al., 2017; Aengenheyster et al., 2018). Here we call it the step method. Due to the special form of this “step” perturbation, the linear response function can in principle be derived from

where fstep is the step perturbation and ΔYstep is the corresponding response. Unfortunately, such derivation involves numerical differentiation, which is known to be an ill-posed problem (Anderssen and Bloomfield, 1974; Engl et al., 1996). Because the problem is ill-posed, noise is amplified, potentially resulting in large errors in the derived linear response function.

These two methods therefore share two limitations: first, they require a special perturbation experiment; second, because of noise in the data they might yield a response function with large errors. In principle, the second limitation may be overcome by using instead of a single response the ensemble average over multiple responses. However, this comes at the expense of the numerical burden of performing multiple experiments, which is especially large when dealing with complex models such as state-of-the-art Earth system models.

The main advantages of the RFI method lie precisely in overcoming these two limitations: it recovers the response function from any type of perturbation experiment and automatically filters out the noise by regularization.

For the results of this section, we performed ensembles of 200 simulation experiments with the toy model (see Sect. 4.1). Each ensemble member is defined by a realization of the noise η*(t) with a fixed standard deviation (see Eq. 34). Each realization was added via Eq. (32) to three experiments: 1 %, step (2×f0), and pulse (4×f0). Note that because of the issue with the SNR mentioned above, we had to employ for the pulse experiment twice the forcing strength employed for the step experiment. Further, for each ensemble member an additional realization of the noise was generated to serve as a control experiment to compute the noise estimate for the RFI method (step 1 of Fig. 1).

We computed the response function by the pulse and step method as follows. For a pulse experiment the forcing is f(t)=aδ(t) with forcing strength a, so that the response is given by

Therefore, for the pulse method we took the response from the pulse experiment and obtained the response function by

The recovery by the step method was calculated by taking the response from the step experiment and applying Eq. (42). The derivative was computed by forward difference.

To obtain comparable results with these two methods, we recovered the response function by the RFI method from the same pulse and step experiments. To compare the quality of the results using also an experiment not decidedly tailored for the identification, we include additionally the recovery from the 1 % experiment.

To obtain a quantitative comparison for the quality of the recovery for each method, we define the recovery error:

where χ is the recovered response function and χ* is the “true” response function, which is known because we use the toy model. In contrast to the prediction error that measures the quality of the recovery of χ(t) by means of the response (see Eq. 45), the recovery error εr measures the quality of the recovery of χ(t) itself. Another reason for introducing the recovery error is to compare its results with results from the prediction error. By doing that, we can gain insight into how much the prediction error can be trusted as an indirect measure of the quality of recovery in real applications, where the “true” response function is not known.

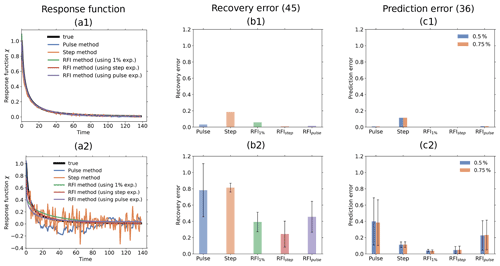

Figure 9Quality of response function recovery by the full RFI method (including step 6 in Fig. 1) in comparison to the pulse and step method. Subscripts at “RFI” indicate the experiment from which the response function was recovered with the RFI method. First row: taking the average over the whole ensemble of toy model experiments for recovery; second row: performing the recovery for each ensemble member separately. (a1) Recovered response function; (b1) recovery error; (c1) prediction error (Eq. 36); (a2) example of recovered response function from one ensemble member; (b2) statistics of recovery error; (c2) statistics of prediction error (Eq. 36). The prediction error is separately computed for the 0.5 % and 0.75 % experiments. Taking the ensemble average, all methods perform well (see first row). However, taking only one ensemble member, the RFI algorithm gives better recovery and prediction errors than the pulse and step methods when comparing the same responses (see second row).

First, we compare the pulse and step methods against the full RFI algorithm, i.e., the RFI algorithm taking monotonicity into account (step 6 in Fig. 1). Results are shown in Fig. 9. In the first row of subfigures, we took for the recovery the ensemble average over the 200 responses for each experiment. For the RFI method, we took the ensemble average over the control experiments as well to estimate the noise (step 1 of Fig. 1). As shown in Fig. 9a1, with this approach all the methods recover the response function almost perfectly. The quality of the recovery is quantified by the recovery error in Fig. 9b1. The RFI method shows the smallest values for the step and pulse experiments when compared to the step and pulse methods. Overall, the step method clearly shows the largest value. To quantify the quality of the prediction, we plot in Fig. 9c1 the prediction error (Eq. 36). As seen, values are even smaller than for the recovery error. Overall, we see a similar pattern: the step method again stands out, with other methods showing much smaller error values.

In the second row, we compare results by taking only a single response for the recovery. Since the quality of the recovery by the different methods may vary depending on the particular noise realization, we again performed 200 simulations to obtain better statistics but this time deriving the linear response function for each ensemble member separately. Figure 9a2 shows an example of recovery for one of the ensemble members. As expected, the recoveries by the pulse and step methods largely deviate from the true response function. For the pulse method, the large errors result from the low SNR of the pulse response: even taking twice the forcing strength of the step experiment, the SNR of the pulse response is of order 100 against order 101 for the step and 1 % responses. For the step method, on the other hand, the large errors are not a result of a low SNR but of the noise amplification associated with the ill-posedness of numerical differentiation. In contrast to the recovery by these two methods, because of regularization the recoveries by the RFI method are smoother and visually seem to better fit the true response function. To quantitatively check these results, we plot in Fig. 9b2 for each method the average and standard deviation over the 200 values of the recovery error (one for each ensemble member). The figure shows that the pulse and step methods indeed display the largest average recovery error, with the pulse method having a much larger spread. Such spread is probably related to the low SNR in the response from the pulse experiment. The results from the 1 % and pulse experiments by the RFI method are better, showing comparable error magnitudes. The smallest average recovery error is obtained from the RFI method using the step experiment. In Fig. 9c2 we show the average and standard deviation over the 200 values of the prediction error (Eq. 36). The smallest average prediction errors are obtained from the RFI method using the 1 % and step experiments. The largest errors are obtained for the pulse method and the RFI method using the pulse experiment. In contrast to the situation for the recovery error, for the prediction error no substantial difference between the two is found. Note also that when comparing recoveries from the same response (i.e., comparing “Pulse” with “RFIpulse” and “Step” with “RFIstep”), the RFI method gives better results than both the pulse and step methods. Another interesting point is that prediction errors for the step method remain approximately unchanged by taking the ensemble mean and a single response (compare “Step” in Fig. 9c1 and c2). Overall, as in the first row, the prediction error shows for each individual method values smaller than the recovery error. However, now there is a difference between the plots for the recovery and prediction error: although the pulse and step methods show the largest averages, with values of comparable size for the recovery error, for the prediction error the pulse method has the largest average, with a value much larger than the step method.